* p values are highly unrealiable - don't trust them, don't use them!

www.thenewstatistics.com

tiny.cc/osfsigroulette

#IRICSydney

- FAIR Data Cheatsheet @w-u-r.bsky.social

- Open Research: Examples of Good Practice, and Resources Across Disciplines @ukrepro.bsky.social

- 3 Myths About Open Science That Just Won’t Die @syeducation.bsky.social

and more!

rdmweekly.substack.com/p/rdm-weekly...

- FAIR Data Cheatsheet @w-u-r.bsky.social

- Open Research: Examples of Good Practice, and Resources Across Disciplines @ukrepro.bsky.social

- 3 Myths About Open Science That Just Won’t Die @syeducation.bsky.social

and more!

rdmweekly.substack.com/p/rdm-weekly...

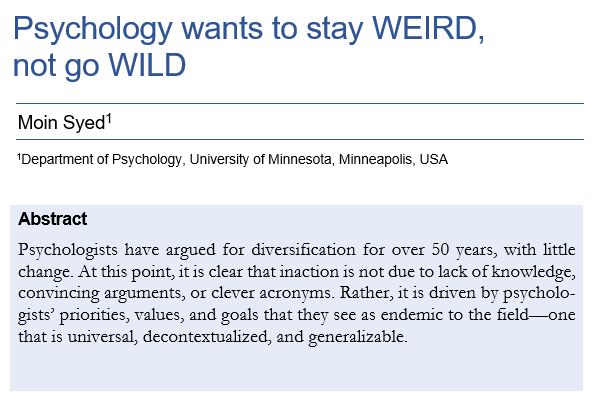

"Psychology wants to stay WEIRD, not go WILD"

Why hasn't psychology diversified it samples, methods, theories, etc.? Because it doesn't want to. osf.io/preprints/ps...

"Psychology wants to stay WEIRD, not go WILD"

Why hasn't psychology diversified it samples, methods, theories, etc.? Because it doesn't want to. osf.io/preprints/ps...

www.bps.org.uk/psychologist...

www.bps.org.uk/psychologist...

If you're submitting AI slop you're a loser. You're just making these great free services harder to run, and making it more difficult to separate signal (science) from noise (your crappy AI shit.)

If you're submitting AI slop you're a loser. You're just making these great free services harder to run, and making it more difficult to separate signal (science) from noise (your crappy AI shit.)

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

Chocolate is more desirable than poop:

Cohen's d_rm = 6.20, 95%CI [5.63, 6.78]

N = 486, two single item 1-7 Likert scales of desirability.

w/

@jamiecummins.bsky.social

@jamiecummins.bsky.social and I are replicating Balcetis & Dunning's (2010) "chocolate is more desirable than poop" (Cohen's d = 4.52)

Let us known in the replies what effect size you think we'll find. Details of the study in the thread below.

Chocolate is more desirable than poop:

Cohen's d_rm = 6.20, 95%CI [5.63, 6.78]

N = 486, two single item 1-7 Likert scales of desirability.

w/

@jamiecummins.bsky.social

Preprints are read, shared, and cited, yet still dismissed as incomplete until blessed by a publisher. I argue that the true measure of scholarship lies in open exchange, not in the industry’s gatekeeping of what counts as published.

Preprints are read, shared, and cited, yet still dismissed as incomplete until blessed by a publisher. I argue that the true measure of scholarship lies in open exchange, not in the industry’s gatekeeping of what counts as published.

By @syeducation.bsky.social

By @syeducation.bsky.social

septentrio.uit.no/index.php/no...

septentrio.uit.no/index.php/no...

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

* With federal funding exceptionally unreliable, scientists say they are stressed about spending thousands of grant dollars on unexpected and questionable open-access charges.

By @stephaniemlee.bsky.social for @chronicle.com

www.chronicle.com/article/maki...