Current primary project: tech for substance use disorder programs.

1. we just hired the extremely talented @drawimpacts.bsky.social for a design project

2. The estimable @roxanadaneshjou.bsky.social

and I wrote a rapid response to a BMJ (British Medical Journal) article. www.bmj.com/content/387/...

When Cursor added agentic coding in 2024, adopters produced 39% more code merges, with no sign of a decrease in quality (revert rates were the same, bugs dropped) and no sign that the scope of the work shrank. papers.ssrn.com/sol3/papers....

When Cursor added agentic coding in 2024, adopters produced 39% more code merges, with no sign of a decrease in quality (revert rates were the same, bugs dropped) and no sign that the scope of the work shrank. papers.ssrn.com/sol3/papers....

Join us tomorrow, November 13 at 10 AM PT for a livestream on the risks of AI and how we can safeguard civil liberties online. eff.org/livestream-ai

Join us tomorrow, November 13 at 10 AM PT for a livestream on the risks of AI and how we can safeguard civil liberties online. eff.org/livestream-ai

Multi-agent AI systems are becoming increasingly practical for complex tasks. There are different architectural patterns being used today for how specialized agents can collaborate with each suited to specific business challenges and workflows. (1️⃣/3️⃣)

🧵

Multi-agent AI systems are becoming increasingly practical for complex tasks. There are different architectural patterns being used today for how specialized agents can collaborate with each suited to specific business challenges and workflows. (1️⃣/3️⃣)

🧵

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Most of the supposed value is in sci-fi speculation. “Imagine a machine that cures cancer.”

And it’s all built on what seems to be malicious and vast intellectual property theft.

What does OpenAI offer the world?

If you work with a collaborator in China, this bill would:

1) Make you ineligible for new U.S. federal grants (as long as the collaboration exists)

2) If passed, you would only have 90 days to sever the connection, or be banned for up to 5 years.

If you work with a collaborator in China, this bill would:

1) Make you ineligible for new U.S. federal grants (as long as the collaboration exists)

2) If passed, you would only have 90 days to sever the connection, or be banned for up to 5 years.

www.cnn.com/2025/11/06/u...

You’re reducing support tickets and unlocking expansion.

Quiet work only stays tactical if you describe it that way.

Frame outcomes, not effort. That’s how invisible work becomes strategic work.

You’re reducing support tickets and unlocking expansion.

Quiet work only stays tactical if you describe it that way.

Frame outcomes, not effort. That’s how invisible work becomes strategic work.

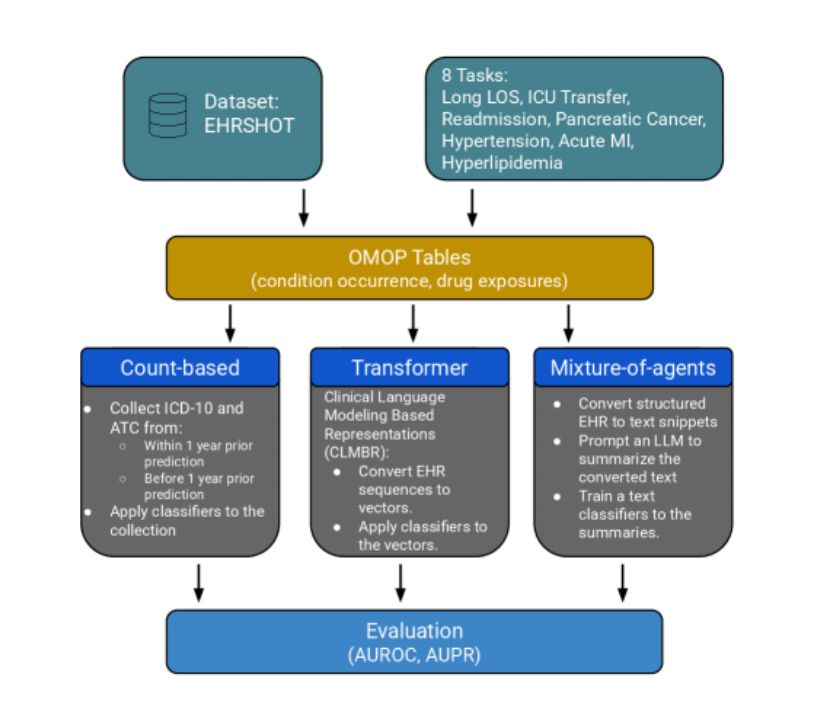

A new preprint by @simocristea.bsky.social et. al. shows wins are split. Count-based methods (like LightGBM) remain a strong, simple, and interpretable baseline.

#MedSky #MLSky #MedAI

A new preprint by @simocristea.bsky.social et. al. shows wins are split. Count-based methods (like LightGBM) remain a strong, simple, and interpretable baseline.

#MedSky #MLSky #MedAI

The answers are saddening:

1) being a woman

2) practicing in a rural area

3) caring for sicker patients and dual-eligible patients

www.acpjournals.org/doi/10.7326/...

The answers are saddening:

1) being a woman

2) practicing in a rural area

3) caring for sicker patients and dual-eligible patients

www.acpjournals.org/doi/10.7326/...

How Moderna, the company that helped save the world, unraveled

www.statnews.com/2025/10/30/m...

How Moderna, the company that helped save the world, unraveled

www.statnews.com/2025/10/30/m...

www.statnews.com/2025/11/05/f... via @statnews.com

www.statnews.com/2025/11/05/f... via @statnews.com

Anthropic sweetly asked Sonnet about its preferences in how it wanted to be deprecated

in addition:

- no, still not open weights

- preserve weights and keeping it running internally

- letting models pursue their interests

www.anthropic.com/research/dep...

Anthropic sweetly asked Sonnet about its preferences in how it wanted to be deprecated

in addition:

- no, still not open weights

- preserve weights and keeping it running internally

- letting models pursue their interests

www.anthropic.com/research/dep...

There's a plausible world where even Timnit would get venom on bsky in a few months lol -- mostly a lot of blind rage at this point.

www.politico.com/newsletters/...

www.politico.com/newsletters/...

Abstinence-only AI education isn't any better than the sex variety.

Abstinence-only AI education isn't any better than the sex variety.