Studying continual learning and adaptation in Brain and ANNs.

The 1st preprint of my PhD 🥳 fast dynamical similarity analysis (fastDSA):

📜: arxiv.org/abs/2511.22828

💻: github.com/CMC-lab/fast...

I’ll be @cosynemeeting.bsky.social - happy to chat 😉

www.ocrampal.com/what-a-neuro...

#philosophy #science #psychology #AI #intelligence #physics #biology #philmind #philsci #philsky #philpsy #neurosky #neuroskyence

www.ocrampal.com/what-a-neuro...

#philosophy #science #psychology #AI #intelligence #physics #biology #philmind #philsci #philsky #philpsy #neurosky #neuroskyence

theoreticalneuroscience.no/thn36

Manifold analysis has changed our thinking on how cortex works. One of the pioneers of this modelling approach explains.

theoreticalneuroscience.no/thn36

Manifold analysis has changed our thinking on how cortex works. One of the pioneers of this modelling approach explains.

arxiv.org/abs/2512.15948

I welcome any feedback on these preliminary ideas.

arxiv.org/abs/2512.15948

I welcome any feedback on these preliminary ideas.

It’s a nasty problem, but @vgeadah.bsky.social made tremendous progress, ending up with some really elegant formalisms.

A year ago, I was presenting our work at IEEE CDC on solving this problem for stochastic LQR.

arxiv.org/abs/2502.15014

Short 🧵 on the results, and how I think about them a year later.

It’s a nasty problem, but @vgeadah.bsky.social made tremendous progress, ending up with some really elegant formalisms.

bigthink.com/neuropsych/n...

#neuroscience

bigthink.com/neuropsych/n...

#neuroscience

AI models adjust millions of internal settings to get better at a task. But how are these adjustments determined? For decades, we've mostly figured this out through trial & error.

We took a different approach...🧵 (1/6)

🔗 openreview.net/forum?id=oMi...

AI models adjust millions of internal settings to get better at a task. But how are these adjustments determined? For decades, we've mostly figured this out through trial & error.

We took a different approach...🧵 (1/6)

🔗 openreview.net/forum?id=oMi...

@david-g-clark.bsky.social and Ashok Litwin-Kumar! Timely too, as “low-D manifold” has been trending again. (If you read thru the end, we escape Flatland and return to the glorious high-D world we deserve.) www.biorxiv.org/content/10.6...

@david-g-clark.bsky.social and Ashok Litwin-Kumar! Timely too, as “low-D manifold” has been trending again. (If you read thru the end, we escape Flatland and return to the glorious high-D world we deserve.) www.biorxiv.org/content/10.6...

🔗 doi.org/10.1101/2025...

Led by @atenagm.bsky.social @mshalvagal.bsky.social

🔗 doi.org/10.1101/2025...

Led by @atenagm.bsky.social @mshalvagal.bsky.social

arxiv.org/pdf/2410.03972

It started from a question I kept running into:

When do RNNs trained on the same task converge/diverge in their solutions?

🧵⬇️

arxiv.org/pdf/2410.03972

It started from a question I kept running into:

When do RNNs trained on the same task converge/diverge in their solutions?

🧵⬇️

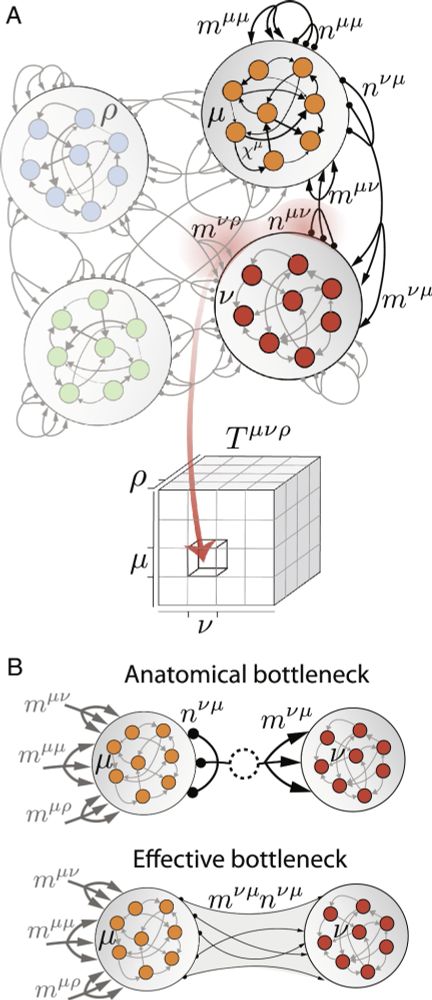

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

#neuroskyence

www.thetransmitter.org/neural-dynam...

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

#neuroskyence

www.thetransmitter.org/systems-neur...

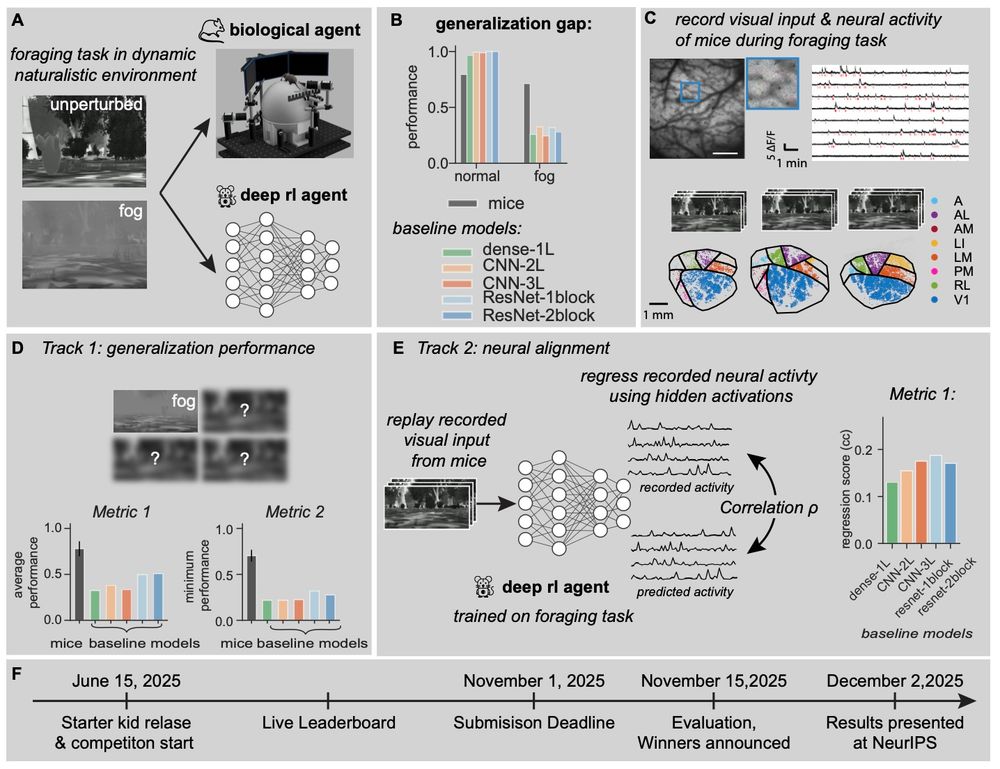

We present our #NeurIPS competition. You can learn about it here: robustforaging.github.io (7/n)

We present our #NeurIPS competition. You can learn about it here: robustforaging.github.io (7/n)

To quote someone from my lab (they can take credit if they want):

Def not news to those of us who use [ANN] models, but a good counter argument to the "but neurons are more complicated" crowd.

arxiv.org/abs/2504.08637

🧠📈 🧪

To quote someone from my lab (they can take credit if they want):

Def not news to those of us who use [ANN] models, but a good counter argument to the "but neurons are more complicated" crowd.

arxiv.org/abs/2504.08637

🧠📈 🧪

More on Spatial Computing:

doi.org/10.1038/s414...

More on Spatial Computing:

doi.org/10.1038/s414...

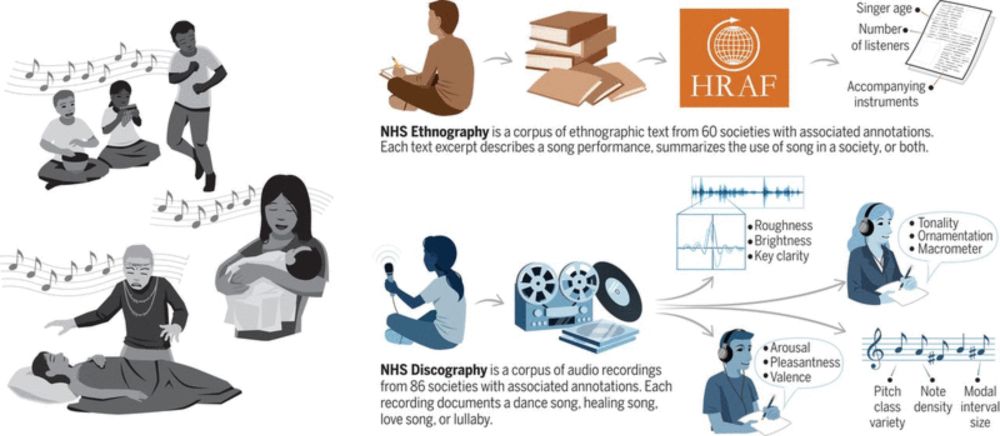

PNAS link: www.pnas.org/doi/10.1073/...

(see dclark.io for PDF)

An explainer thread...

PNAS link: www.pnas.org/doi/10.1073/...

(see dclark.io for PDF)

An explainer thread...

www.science.org/doi/10.1126/...

#neuroscience

www.science.org/doi/10.1126/...

#neuroscience

How do neural dynamics in motor cortex interact with those in subcortical networks to flexibly control movement? I’m beyond thrilled to share our work on this problem, led by Eric Kirk @eric-kirk.bsky.social with help from Kangjia Cai!

www.biorxiv.org/content/10.1...

How do neural dynamics in motor cortex interact with those in subcortical networks to flexibly control movement? I’m beyond thrilled to share our work on this problem, led by Eric Kirk @eric-kirk.bsky.social with help from Kangjia Cai!

www.biorxiv.org/content/10.1...

My group will study offline learning in the sleeping brain: how neural activity self-organizes during sleep and the computations it performs. 🧵

My group will study offline learning in the sleeping brain: how neural activity self-organizes during sleep and the computations it performs. 🧵

Neuromorphic hierarchical modular reservoirs

www.biorxiv.org/content/10.1...

Neuromorphic hierarchical modular reservoirs

www.biorxiv.org/content/10.1...

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7