Posts

Media

Videos

Starter Packs

Reposted by Erin Campbell

Reposted by Erin Campbell

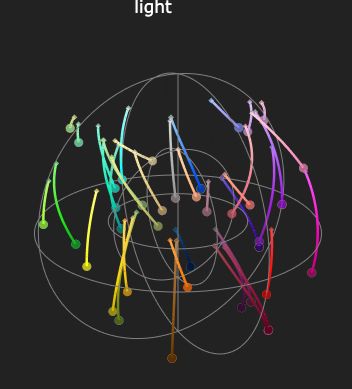

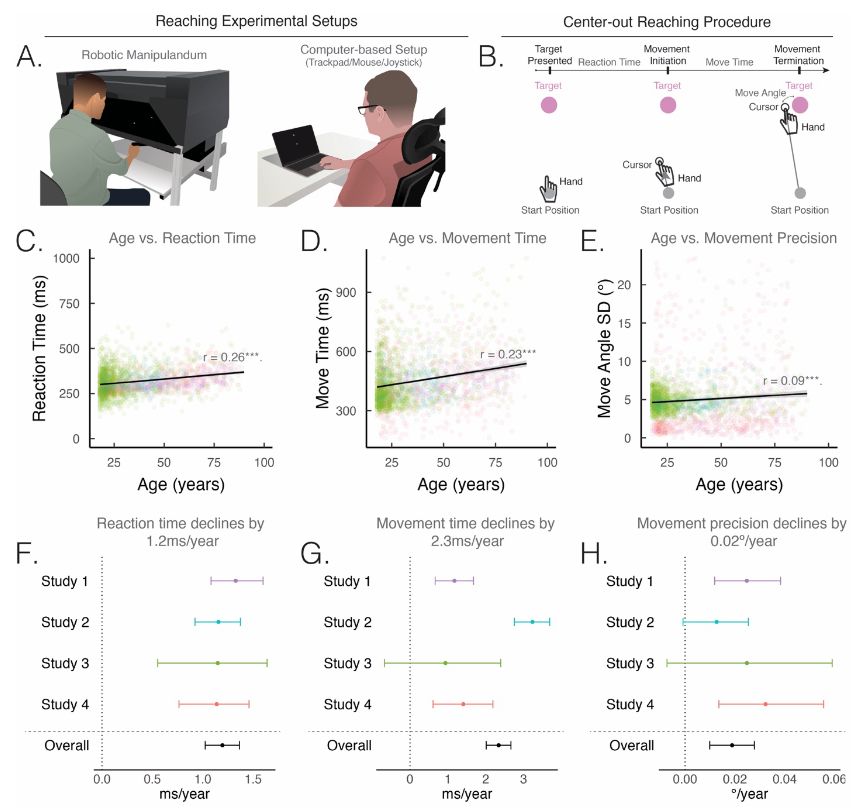

samuel mehr

@mehr.nz

· 28d

Reposted by Erin Campbell

Reposted by Erin Campbell

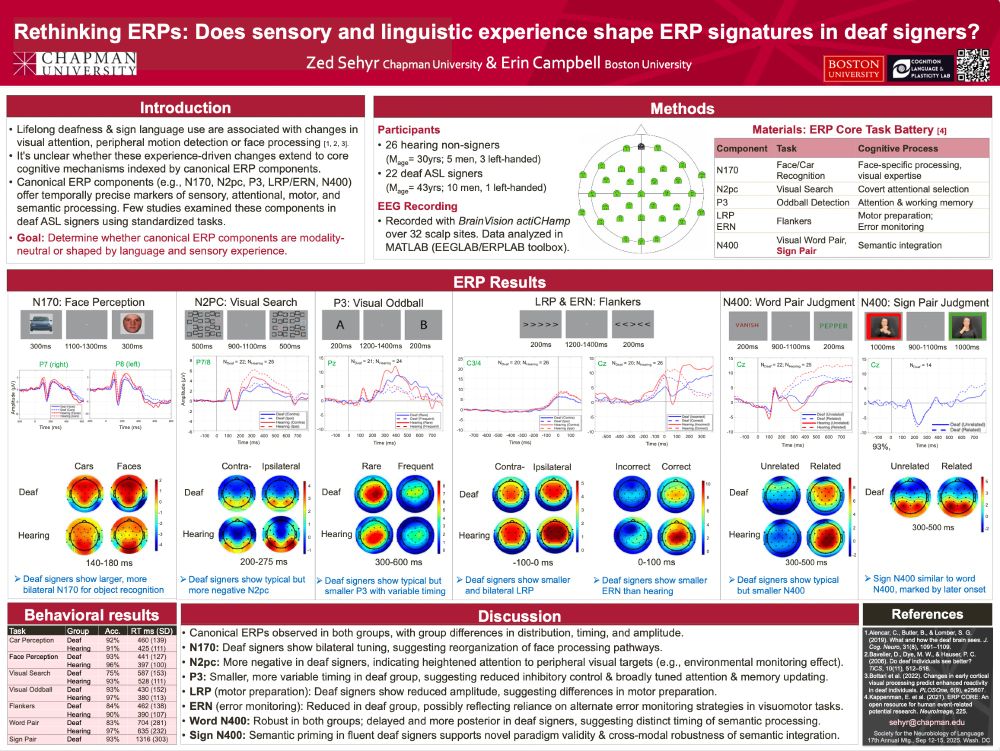

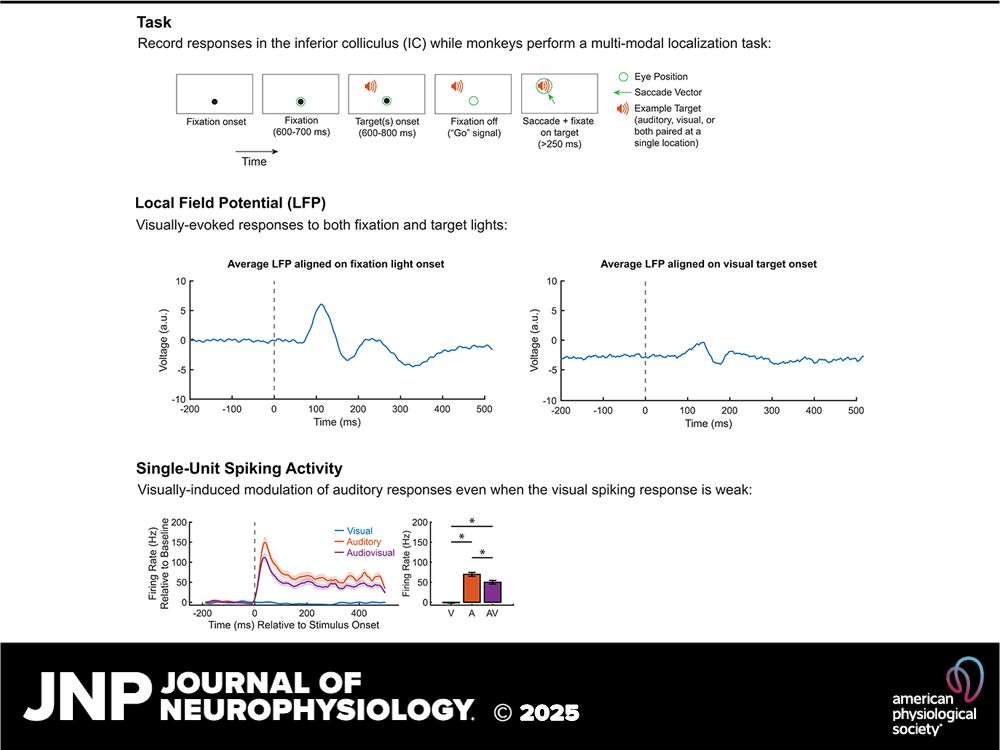

Erin Campbell

@erinecampbell.bsky.social

· Jul 31

Reposted by Erin Campbell

Reposted by Erin Campbell

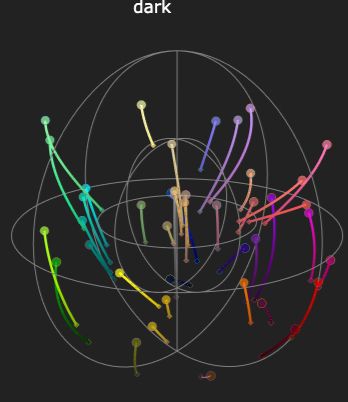

Erin Campbell

@erinecampbell.bsky.social

· Jul 14

Reposted by Erin Campbell

Reposted by Erin Campbell

Reposted by Erin Campbell

Reposted by Erin Campbell

Reposted by Erin Campbell

Reposted by Erin Campbell

Reposted by Erin Campbell

Jenni Sander

@jennisander.bsky.social

· Jun 10

Reposted by Erin Campbell

Mike Ginn

@shutupmikeginn.bsky.social

· Mar 8

Reposted by Erin Campbell

Reposted by Erin Campbell

Reposted by Erin Campbell