Joris Frese

@fresejoris.bsky.social

3.5K followers

1.4K following

220 posts

PhD candidate in political science at the EUI.

Interested in political behavior, quantitative methods, metascience.

https://www.jorisfrese.com/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Joris Frese

Reposted by Joris Frese

Reposted by Joris Frese

Reposted by Joris Frese

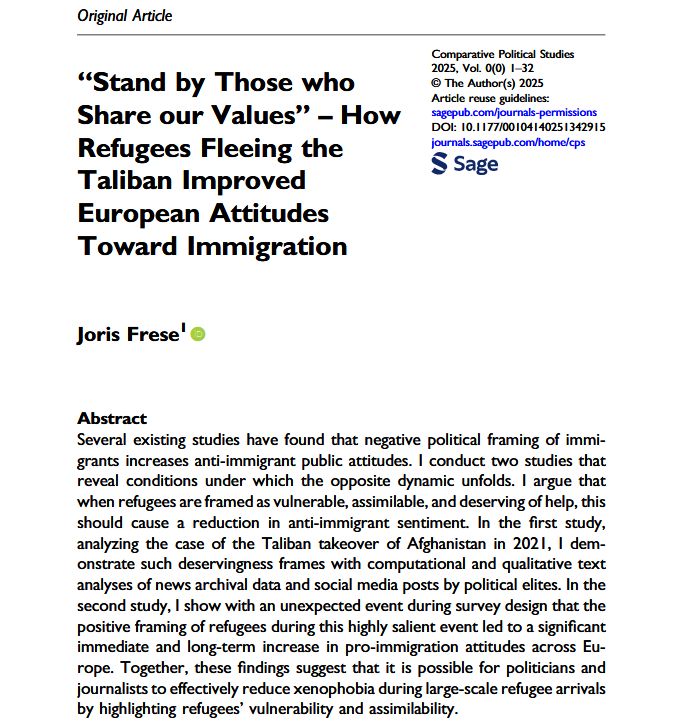

Joris Frese

@fresejoris.bsky.social

· Sep 6

Joris Frese

@fresejoris.bsky.social

· Sep 5

Reposted by Joris Frese

Joris Frese

@fresejoris.bsky.social

· Sep 4

Reposted by Joris Frese

Reposted by Joris Frese

Reposted by Joris Frese

Joris Frese

@fresejoris.bsky.social

· Jul 21

Joris Frese

@fresejoris.bsky.social

· Jul 21

Joris Frese

@fresejoris.bsky.social

· Jul 21

Joris Frese

@fresejoris.bsky.social

· Jul 21

Joris Frese

@fresejoris.bsky.social

· Jul 21