gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

osf.io/preprints/ps...

osf.io/preprints/ps...

news.harvard.edu/gazette/stor...

news.harvard.edu/gazette/stor...

Caveats:

-*-*-*-*

> These are my opinions, based on my experiences, they are not secret tricks or guarantees

> They are general guidelines, not meant to cover a host of idiosyncrasies and special cases

Caveats:

-*-*-*-*

> These are my opinions, based on my experiences, they are not secret tricks or guarantees

> They are general guidelines, not meant to cover a host of idiosyncrasies and special cases

In a new paper led by @lanceying.bsky.social, we introduce a cognitive model that achieves this by synthesizing rational agent models on-the-fly -- presented at #EMNLP2025!

In a new paper led by @lanceying.bsky.social, we introduce a cognitive model that achieves this by synthesizing rational agent models on-the-fly -- presented at #EMNLP2025!

I highly recommend 'Normal Rationality', which collects her essays.

If you're looking to start, maybe look here:

bit.ly/4qk2GZS

bit.ly/46XudIV

bit.ly/4nkfLQc

bit.ly/3KUIiOy

I highly recommend 'Normal Rationality', which collects her essays.

If you're looking to start, maybe look here:

bit.ly/4qk2GZS

bit.ly/46XudIV

bit.ly/4nkfLQc

bit.ly/3KUIiOy

"Using teacher models that answer at varying levels of abstraction, from executable action sequences to high-level subgoal descriptions, we show that lifelong learning agents benefit most from answers that are abstracted and decoupled from the current state."

"Using teacher models that answer at varying levels of abstraction, from executable action sequences to high-level subgoal descriptions, we show that lifelong learning agents benefit most from answers that are abstracted and decoupled from the current state."

A: We're not sure, but it achieved 94.7% on CHIKENBench-Large

A: We're not sure, but it achieved 94.7% on CHIKENBench-Large

www.biorxiv.org/cgi/content/...

www.biorxiv.org/cgi/content/...

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

I’m excited to stay at FAIR and work with @asli-celikyilmaz.bsky.social and friends on fun LLM questions; I’ll be working from the New York office so we’re sticking around.

I’m excited to stay at FAIR and work with @asli-celikyilmaz.bsky.social and friends on fun LLM questions; I’ll be working from the New York office so we’re sticking around.

📃 authors.elsevier.com/a/1lo8f2Hx2-...

📃 authors.elsevier.com/a/1lo8f2Hx2-...

**TreeIRL** is a novel planner that combines classical search with learning-based methods to achieve state-of-the-art performance in simulation and in **real-world autonomous driving**! 🚘 🤖 🚀

**TreeIRL** is a novel planner that combines classical search with learning-based methods to achieve state-of-the-art performance in simulation and in **real-world autonomous driving**! 🚘 🤖 🚀

grants.nih.gov/grants/guide...

grants.nih.gov/grants/guide...

Humans are capable of sophisticated theory of mind, but when do we use it?

We formalize & document a new cognitive shortcut: belief neglect — inferring others' preferences, as if their beliefs are correct🧵

Humans are capable of sophisticated theory of mind, but when do we use it?

We formalize & document a new cognitive shortcut: belief neglect — inferring others' preferences, as if their beliefs are correct🧵

We examine how people figure out what happened by combining visual and auditory evidence through mental simulation.

Paper: osf.io/preprints/ps...

Code: github.com/cicl-stanfor...

We examine how people figure out what happened by combining visual and auditory evidence through mental simulation.

Paper: osf.io/preprints/ps...

Code: github.com/cicl-stanfor...

arxiv.org/abs/2509.09737

arxiv.org/abs/2509.09737

We study how two cognitive constraints—action consideration set size & policy complexity—interact in context-dependent decision making, and how humans exploit their synergy to reduce behavioral suboptimality.

osf.io/preprints/ps...

We study how two cognitive constraints—action consideration set size & policy complexity—interact in context-dependent decision making, and how humans exploit their synergy to reduce behavioral suboptimality.

osf.io/preprints/ps...

kempnerinstitute.harvard.edu/kempner-inst...

kempnerinstitute.harvard.edu/kempner-inst...

www.nytimes.com/2025/07/28/u...

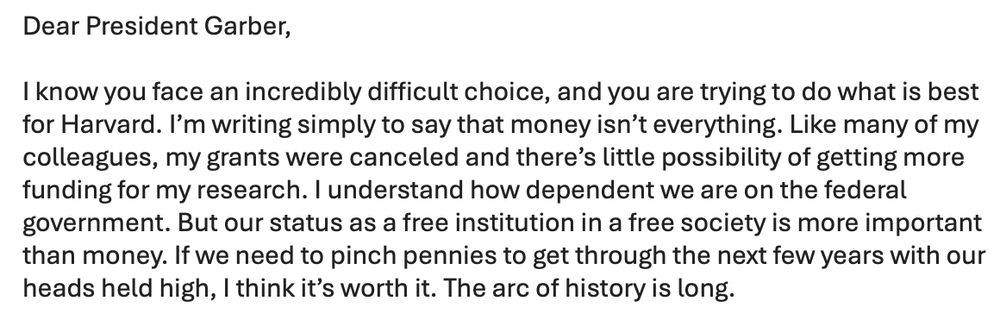

I wrote to the president of Harvard. I hope other faculty will speak their conscience, even if it means more struggle ahead.

www.nytimes.com/2025/07/28/u...

I wrote to the president of Harvard. I hope other faculty will speak their conscience, even if it means more struggle ahead.

#ucsd #newprofessor #womeninSTEM

#ucsd #newprofessor #womeninSTEM