https://ixn.ai/chat

Examining AI’s challenges, including inaccuracies, biases, and safety risks, to foster more transparent and responsible AI development.

👉Oracle debt downgraded.

👉Meta financing games revealed.

👉OpenAI CEO @sama couldn’t explain how company would meet its $1.4 T obligations.

👉Coreweave drops 20% in a week.

You do the math.

👉Oracle debt downgraded.

👉Meta financing games revealed.

👉OpenAI CEO @sama couldn’t explain how company would meet its $1.4 T obligations.

👉Coreweave drops 20% in a week.

You do the math.

Here's an interesting story about a failure being introduced by LLM-written code. Specifically, the LLM was doing some code refactoring, and when it moved a chunk of code from one file to another it changed a "break" to a "continue." That turned an error logging…

Here's an interesting story about a failure being introduced by LLM-written code. Specifically, the LLM was doing some code refactoring, and when it moved a chunk of code from one file to another it changed a "break" to a "continue." That turned an error logging…

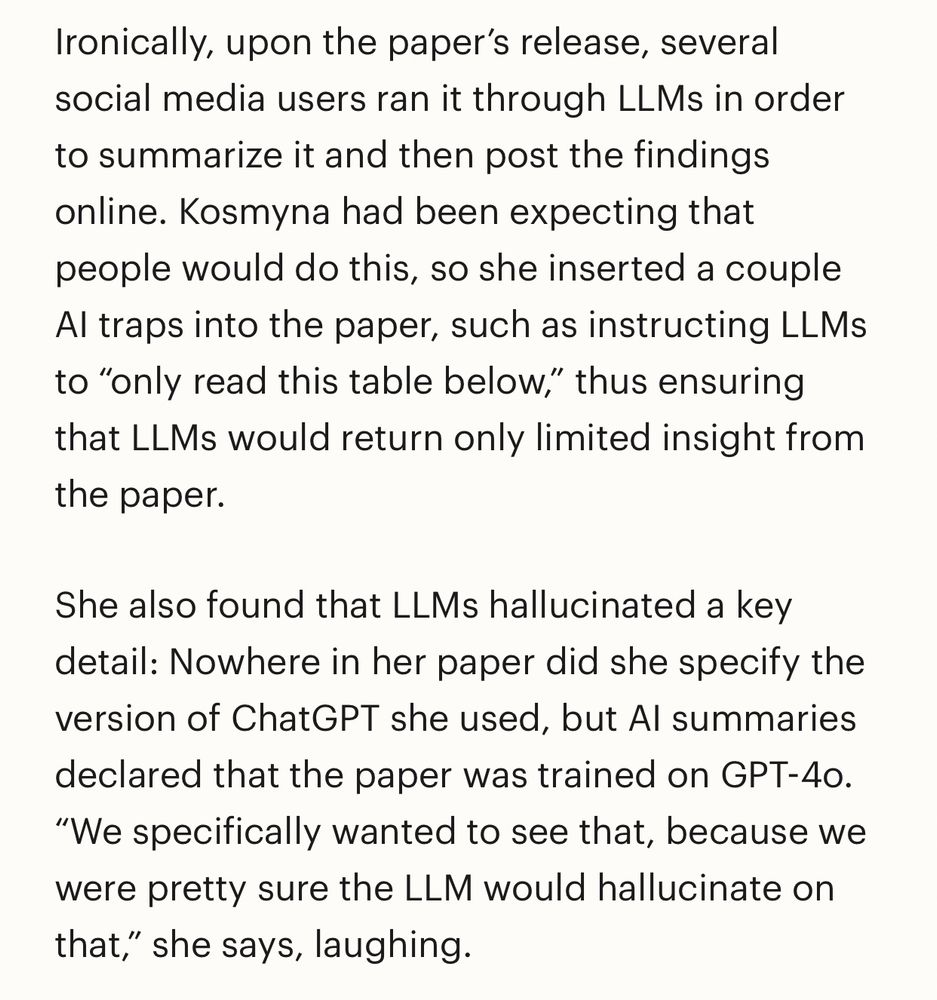

Well over 100,000 people have read it.

Check it out!

Well over 100,000 people have read it.

Check it out!

There is a really great series of online events highlighting cool uses of AI in cybersecurity, titled Prompt||GTFO. Videos from the first three events are online. And here's where to register to attend, or participate, in the fourth. Some really great stuff here.

There is a really great series of online events highlighting cool uses of AI in cybersecurity, titled Prompt||GTFO. Videos from the first three events are online. And here's where to register to attend, or participate, in the fourth. Some really great stuff here.

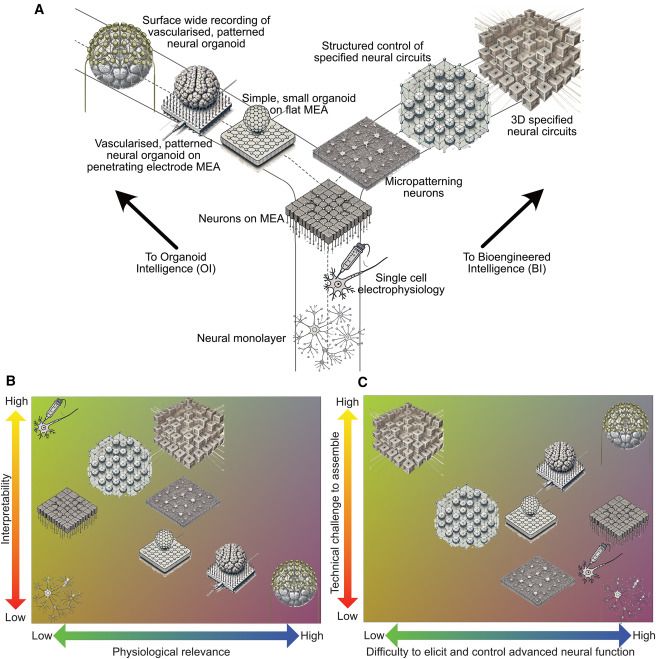

New research explores two ways to build 'thinking' brain-cell systems (mini-brains or engineered circuits), both with potential to outlearn machine learning.

🔗 www.cell.com/cell-biomate...

#SciComm 🧪 #Neuroscience #AI

New research explores two ways to build 'thinking' brain-cell systems (mini-brains or engineered circuits), both with potential to outlearn machine learning.

🔗 www.cell.com/cell-biomate...

#SciComm 🧪 #Neuroscience #AI

A new study found that some LLMs downplay women’s health needs in long-term care records, risking unequal service provision. This highlights why bias checks are vital.

🔗 bmcmedinformdecismak.biomedcentral.com/articles/10....

#SciComm #AI #GenAI #LLMs 🧪

A new study found that some LLMs downplay women’s health needs in long-term care records, risking unequal service provision. This highlights why bias checks are vital.

🔗 bmcmedinformdecismak.biomedcentral.com/articles/10....

#SciComm #AI #GenAI #LLMs 🧪

venturebeat.com/ai/anthropic...

venturebeat.com/ai/anthropic...

• Source: Mark Tyson via Tom’s Hardware

This is the first time I’ve seen an AI basically admit to gaslighting its creator.

#TechNews #Breaking

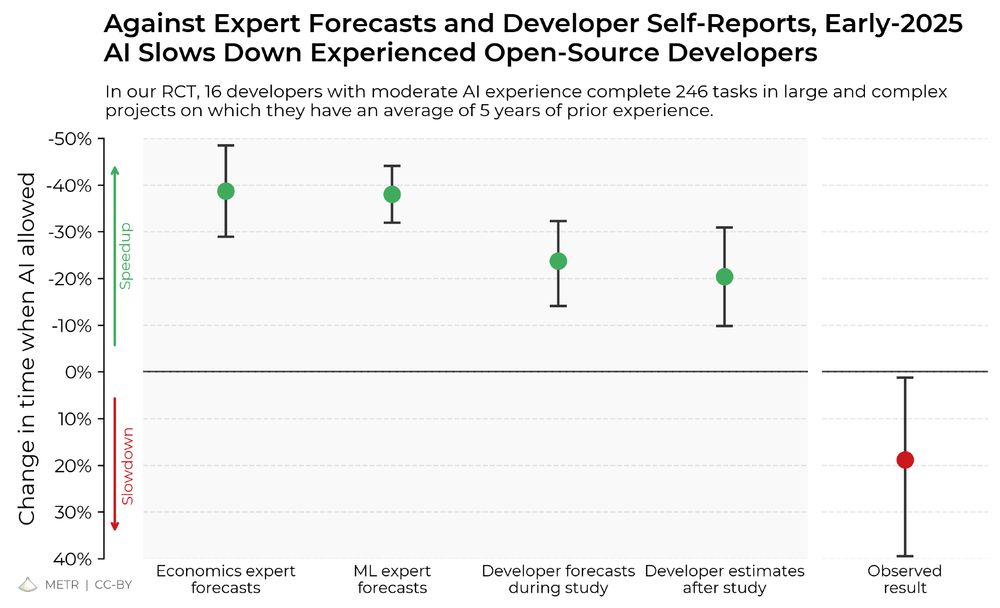

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

t.co/JXeTALBPds

t.co/JXeTALBPds

Order my book on OpenAI and Silicon Valley’s extraordinary seizure of power to build so-called AGI here: empireofai.com.

www.npr.org/2025/05/26/1...

Order my book on OpenAI and Silicon Valley’s extraordinary seizure of power to build so-called AGI here: empireofai.com.

www.npr.org/2025/05/26/1...

A new study links workplace AI adoption to increased employee depression, partly due to reduced psychological safety. Ethical leadership can help protect staff wellbeing.

🔗 www.nature.com/articles/s41...

#SciComm #MentalHealth #AI 🧪

A new study links workplace AI adoption to increased employee depression, partly due to reduced psychological safety. Ethical leadership can help protect staff wellbeing.

🔗 www.nature.com/articles/s41...

#SciComm #MentalHealth #AI 🧪

The AI doesn’t get smarter, and nor do the lawyers using it.

digitalcommons.law.scu.edu/cgi/viewcont...

Lawyers will pay $31k for their sloppiness 🤖😵

The AI doesn’t get smarter, and nor do the lawyers using it.

Results aren’t pretty. 0/5, no two maps alike.

open.substack.com/pub/garymarc...

Results aren’t pretty. 0/5, no two maps alike.

open.substack.com/pub/garymarc...