Jaemin Cho

@jmincho.bsky.social

190 followers

300 following

32 posts

Incoming assistant professor at JHU CS & Young Investigator at AI2

PhD at UNC

https://j-min.io

#multimodal #nlp

Posts

Media

Videos

Starter Packs

Pinned

Jaemin Cho

@jmincho.bsky.social

· Jul 3

Reposted by Jaemin Cho

Jaemin Cho

@jmincho.bsky.social

· May 21

Jaemin Cho

@jmincho.bsky.social

· May 20

Jaemin Cho

@jmincho.bsky.social

· May 20

Jaemin Cho

@jmincho.bsky.social

· May 20

Jaemin Cho

@jmincho.bsky.social

· May 20

Reposted by Jaemin Cho

Reposted by Jaemin Cho

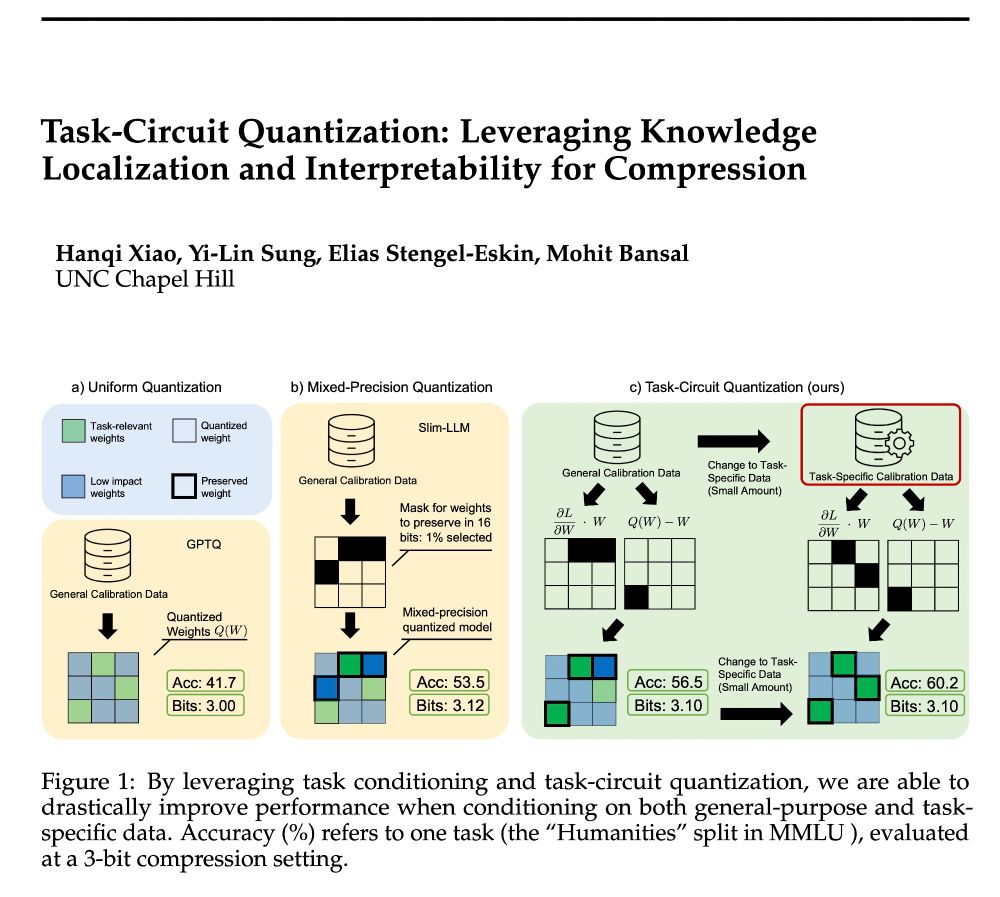

Mohit Bansal

@mohitbansal.bsky.social

· May 5

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Elias Stengel-Eskin

@esteng.bsky.social

· Apr 29

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Reposted by Jaemin Cho

Ana Marasović

@anamarasovic.bsky.social

· Feb 21

Martin Tutek

@mtutek.bsky.social

· Feb 21

Measuring Faithfulness of Chains of Thought by Unlearning Reasoning Steps

When prompted to think step-by-step, language models (LMs) produce a chain of thought (CoT), a sequence of reasoning steps that the model supposedly used to produce its prediction. However, despite mu...

arxiv.org

Reposted by Jaemin Cho