Levi Lelis

@programsynthesis.bsky.social

310 followers

480 following

81 posts

Associate Professor - University of Alberta

Canada CIFAR AI Chair with Amii

Machine Learning and Program Synthesis

he/him; ele/dele 🇨🇦 🇧🇷

https://www.cs.ualberta.ca/~santanad

Posts

Media

Videos

Starter Packs

Reposted by Levi Lelis

Reposted by Levi Lelis

Reposted by Levi Lelis

Reposted by Levi Lelis

Marc Lanctot

@sharky6000.bsky.social

· Jun 29

Reposted by Levi Lelis

Reposted by Levi Lelis

Matthew Guzdial

@matthewguz.bsky.social

· Jun 16

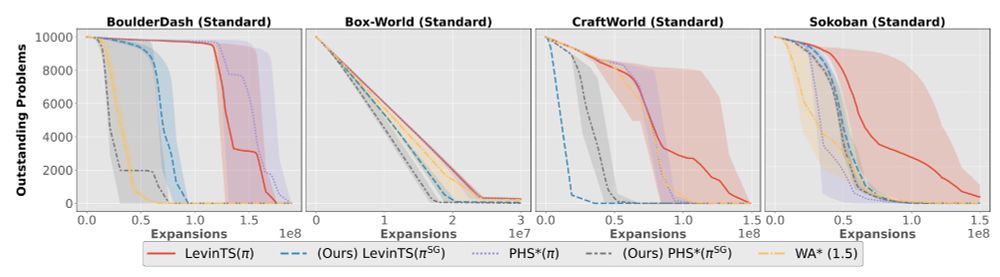

Levi Lelis

@programsynthesis.bsky.social

· Jun 13

Levi Lelis

@programsynthesis.bsky.social

· Jun 13

Levi Lelis

@programsynthesis.bsky.social

· Jun 13