Qihong (Q) Lu

@qlu.bsky.social

1.4K followers

650 following

62 posts

Computational models of episodic memory

Postdoc with Daphna Shohamy & Stefano Fusi @ Columbia

PhD with Ken Norman & Uri Hasson @ Princeton

https://qihongl.github.io/

Posts

Media

Videos

Starter Packs

Pinned

Qihong (Q) Lu

@qlu.bsky.social

· May 8

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Sreejan Kumar

@sreejan.bsky.social

· Sep 6

Sensory Compression as a Unifying Principle for Action Chunking and Time Coding in the Brain

The brain seamlessly transforms sensory information into precisely-timed movements, enabling us to type familiar words, play musical instruments, or perform complex motor routines with millisecond pre...

www.biorxiv.org

Qihong (Q) Lu

@qlu.bsky.social

· Sep 5

Hayoung Song

@hayoungsong.bsky.social

· Sep 5

A neural network with episodic memory learns causal relationships between narrative events

Humans reflect on past memories to make sense of an ongoing event. Past work has shown that people retrieve causally related past events during comprehension, but the exact process by which this causa...

www.biorxiv.org

Reposted by Qihong (Q) Lu

Dirk Gütlin

@gutlin.bsky.social

· Aug 30

Representations of stimulus features in the ventral hippocampus

The ventral hippocampus (vHPC) controls emotional response to environmental cues,

yet the mechanisms are unclear. Biane et al. examine how positive and negative experiences

are encoded by vHPC ensembl...

www.cell.com

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Xinchi Yu

@xinchiyu.bsky.social

· Jun 27

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Mariam Aly

@mariamaly.bsky.social

· Aug 9

Reposted by Qihong (Q) Lu

Hyunwoo Gu

@hyunwoogu.bsky.social

· Jul 29

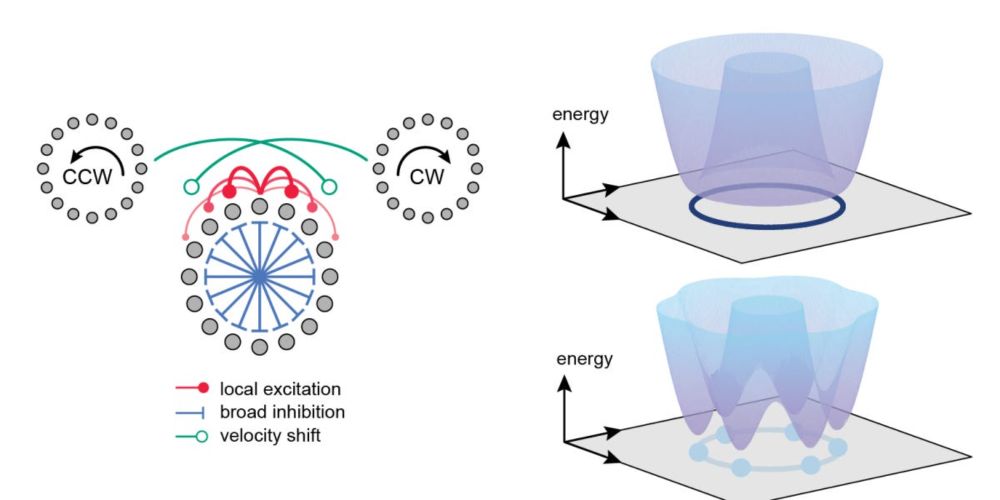

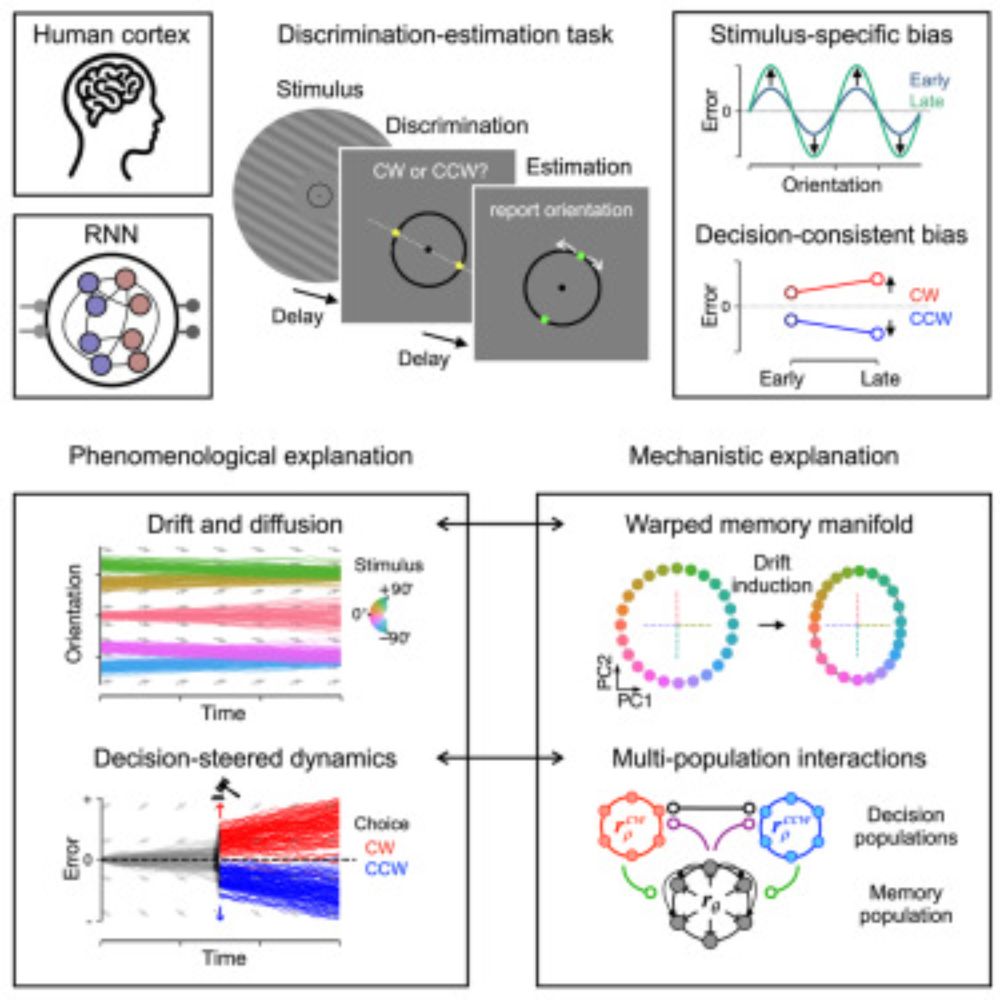

Attractor dynamics of working memory explain a concurrent evolution of stimulus-specific and decision-consistent biases in visual estimation

People exhibit biases when perceiving features of the world, shaped by both external

stimuli and prior decisions. By tracking behavioral, neural, and mechanistic markers

of stimulus- and decision-rela...

dlvr.it

Qihong (Q) Lu

@qlu.bsky.social

· Jul 28

Qihong (Q) Lu

@qlu.bsky.social

· Jul 28

Reposted by Qihong (Q) Lu

Harrison Ritz

@hritz.bsky.social

· Jul 27

Reposted by Qihong (Q) Lu

Reposted by Qihong (Q) Lu

Zhenglong Zhou

@neurozz.bsky.social

· Jul 16

A gradient of complementary learning systems emerges through meta-learning

Long-term learning and memory in the primate brain rely on a series of hierarchically organized subsystems extending from early sensory neocortical areas to the hippocampus. The components differ in t...

bit.ly

Reposted by Qihong (Q) Lu

Earl K. Miller

@earlkmiller.bsky.social

· Jul 15

Numerosity coding in the brain: from early visual processing to abstract representations

Abstract. Numerosity estimation refers to the ability to perceive and estimate quantities without explicit counting, a skill crucial for both human and ani

doi.org