Chenting Wang, Yuhan Zhu, Yicheng Xu ... Limin Wang

arxiv.org/abs/2512.01342

Trending on www.scholar-inbox.com

Chenting Wang, Yuhan Zhu, Yicheng Xu ... Limin Wang

arxiv.org/abs/2512.01342

Trending on www.scholar-inbox.com

lyra.horse/blog/2025/12...

"CSS hack accidentally becomes regular hack"

lyra.horse/blog/2025/12...

"CSS hack accidentally becomes regular hack"

www.nber.org/system/files...

www.nber.org/system/files...

If you are around come say hi!

Paper: arxiv.org/abs/2506.12025

Poster #3703 Friday 5 December 4:30 - 7:30 pm

If you are around come say hi!

Paper: arxiv.org/abs/2506.12025

Poster #3703 Friday 5 December 4:30 - 7:30 pm

An expert, end-to-end OCR model built on Hunyuan's native multimodal architecture and training strategy. This model "supposed to" achieve SOTA performance with only 1 billion parameters, significantly reducing deployment costs.

An expert, end-to-end OCR model built on Hunyuan's native multimodal architecture and training strategy. This model "supposed to" achieve SOTA performance with only 1 billion parameters, significantly reducing deployment costs.

🎬 This is a new, HTML-based submission format for TMLR, that supports interactive figures and videos, along with the usual LaTeX and images.

🎉 Thanks to TMLR Editors in Chief: Hugo Larochelle, @gautamkamath.com, Naila Murray, Nihar B. Shah, and Laurent Charlin!

🎬 This is a new, HTML-based submission format for TMLR, that supports interactive figures and videos, along with the usual LaTeX and images.

🎉 Thanks to TMLR Editors in Chief: Hugo Larochelle, @gautamkamath.com, Naila Murray, Nihar B. Shah, and Laurent Charlin!

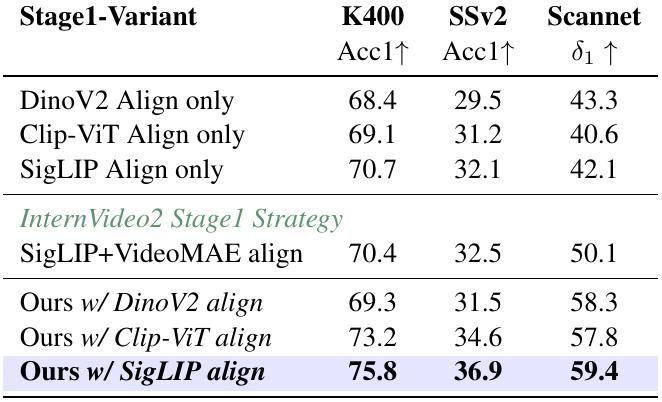

TLDR; Spiritual successor to CroCo with a simpler multi-view objective and larger scale. Beats DINOv3 and CroCo v2 in RoMa, feedforward reconstruction, and rel. pose.

arxiv.org/abs/2511.17309

github.com/davnords/mum

TLDR; Spiritual successor to CroCo with a simpler multi-view objective and larger scale. Beats DINOv3 and CroCo v2 in RoMa, feedforward reconstruction, and rel. pose.

arxiv.org/abs/2511.17309

github.com/davnords/mum

Google DeepMind suggests that pixel-by-pixel autoregressive modeling may scale into a truly unified vision paradigm. Their study shows that as resolution increases, model size must grow much faster than the dataset,

Google DeepMind suggests that pixel-by-pixel autoregressive modeling may scale into a truly unified vision paradigm. Their study shows that as resolution increases, model size must grow much faster than the dataset,

Their first agentic small language model for computer use. This experimental model includes robust safety measures to aid responsible deployment.

Blog: www.microsoft.com/en-us/resear...

Model: huggingface.co/microsoft/Fa...

Their first agentic small language model for computer use. This experimental model includes robust safety measures to aid responsible deployment.

Blog: www.microsoft.com/en-us/resear...

Model: huggingface.co/microsoft/Fa...

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

My favorites:

1) CAD representation

2) synthetic data to help city-scale reconstruction

3) trends in 3D vision

4) visual chain-of-thoughts?

My favorites:

1) CAD representation

2) synthetic data to help city-scale reconstruction

3) trends in 3D vision

4) visual chain-of-thoughts?

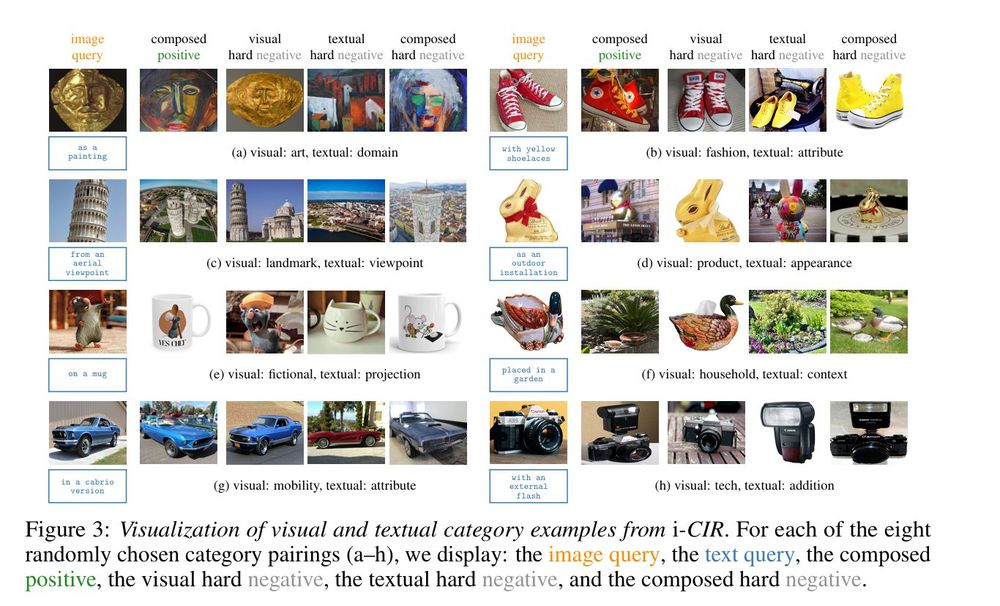

@billpsomas.bsky.social George Retsinas @nikos-efth.bsky.social Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, @gtolias.bsky.social

tl;dr: condition-based retrieval (+dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387

@billpsomas.bsky.social George Retsinas @nikos-efth.bsky.social Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, @gtolias.bsky.social

tl;dr: condition-based retrieval (+dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387

We show that synthetic data (e.g., LLM simulations) can significantly improve the performance of inference tasks. The key intuition lies in the interactions between the moment residuals of synthetic data and those of real data

We show that synthetic data (e.g., LLM simulations) can significantly improve the performance of inference tasks. The key intuition lies in the interactions between the moment residuals of synthetic data and those of real data

Kinaema: A recurrent sequence model for memory and pose in motion

arxiv.org/abs/2510.20261

By @mbsariyildiz.bsky.social, @weinzaepfelp.bsky.social, G. Bono, G. Monaci and myself

@naverlabseurope.bsky.social

1/9

Kinaema: A recurrent sequence model for memory and pose in motion

arxiv.org/abs/2510.20261

By @mbsariyildiz.bsky.social, @weinzaepfelp.bsky.social, G. Bono, G. Monaci and myself

@naverlabseurope.bsky.social

1/9