Meta-scientist and psychologist. Senior lecturer @unibe.ch. Chief recommender @error.reviews. "Jumped up punk who hasn't earned his stripes." All views a product of my learning history. If behaviorism did not exist, it would be necessary to invent it. .. more

Meta-scientist and psychologist. Senior lecturer @unibe.ch. Chief recommender @error.reviews. "Jumped up punk who hasn't earned his stripes." All views a product of my learning history. If behaviorism did not exist, it would be necessary to invent it.

When researchers combine methods or concepts, more out of convenience than any deep curiosity in the resulting research question, to create publishable units.

"What role does {my favourite construct} play in {task}?"

Reposted by Dorothy Bishop, Ian Hussey

How many suspicious patterns can you find in this table?

(You don't need to know what the variables are.)

Hints in next post! 👇

More than 6 have passed since the published was involved.

No action taken.

One of the component studies has since been retracted.

If you recalculate from Table 3, the primary outcome is Cohen's d = 21 (!)

pubpeer.com/publications...

errors.shinyapps.io/recalc_indep...

Medical research often uses non-parametric tests. We looked, and noticed that small-sample rank-based tests (i.e. M-W, Wilcoxon) have significant granularity... like p-values!

So, here's GRIM for U values -- GRIM-U.

Enjoy.

medicalevidenceproject.org/grim-u-obser...

Your opening tweet is a clearly causal claim that you've repeated, which immediately doesn't stand up to scrutiny. This is a ubiquitous critique of this type of work.

Reposted by Ian Hussey

Poor lady cannot catch a break.

(links in comments)

Reposted by Ian Hussey, Marcus Credé

#retraction #stemcells #cardiology

@erictopol.bsky.social

Reposted by Ian Hussey

Learn more about how MDPI continues to strengthen its publication ethics policies: buff.ly/nssdnLb

#MDPI #PublicationEthics #OpenAccess

Reposted by Dorothy Bishop, Ian Hussey

Learn more about how MDPI continues to strengthen its publication ethics policies: buff.ly/nssdnLb

#MDPI #PublicationEthics #OpenAccess

Reposted by Ian Hussey

Reposted by Ian Hussey

Reposted by Ian Hussey

Reposted by Ian Hussey

Reposted by Ian Hussey

I'm guessing this isn't news to everyone, but it was to me. Bizarre.

Reposted by Alexander Wuttke

journals.sagepub.com/doi/10.1177/...

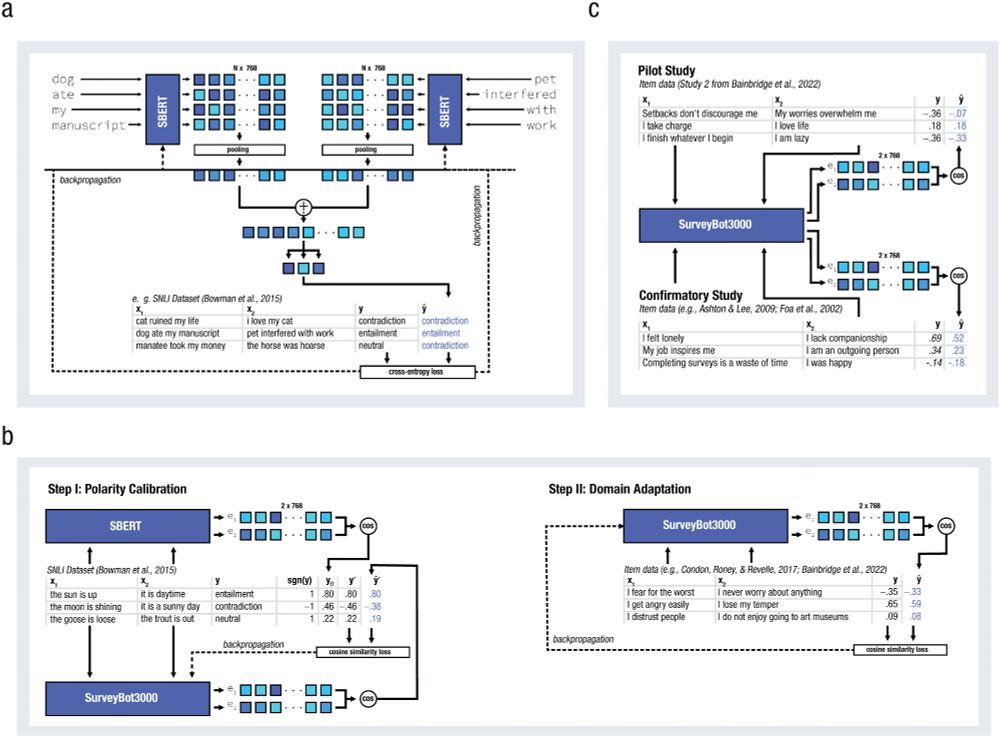

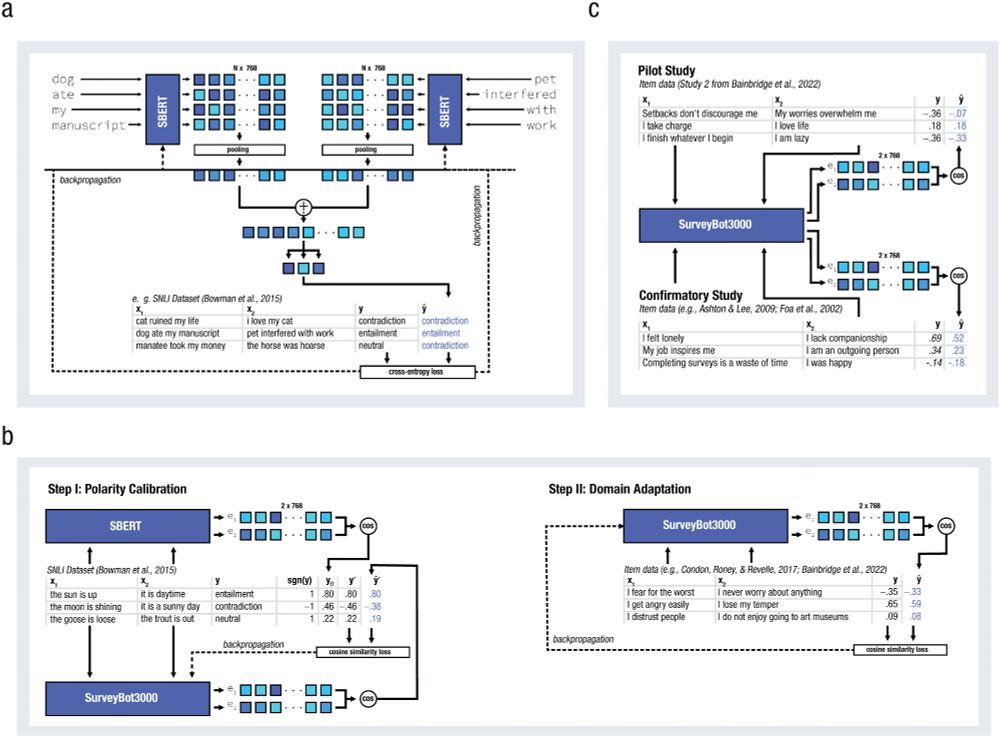

The difference: this does not rely on magic beans or assumed omniscience, it is trained and validated against a large corpus of highly relevant data and makes specific predictions with known accuracy and precision.

journals.sagepub.com/doi/10.1177/...

Reposted by Alberto Acerbi

There is no way this could cause confusion in this heated space.

¯\_(ツ)_/¯