formerly spent time in startups, biggish tech, and physics labs

www.ethanrosenthal.com

arxiv.org/abs/2601.04253

arxiv.org/abs/2601.04253

2014: Python over R

2017: PyTorch over Tensorflow

2023: Bluesky over Twitter, Mastodon, and Threads

I don’t miss.

github.com/anthropics/c...

github.com/anthropics/c...

Interviewer: Tell me about a time that you and Claude Code disagreed.

Me: I asked Claude to create a custom serializer. When it showed me its implementation—

Interviewer: Excuse me, it sounds like you weren’t in YOLO mode? I’m sorry, but this role won’t be a good fit.

Interviewer: Tell me about a time that you and Claude Code disagreed.

Me: I asked Claude to create a custom serializer. When it showed me its implementation—

Interviewer: Excuse me, it sounds like you weren’t in YOLO mode? I’m sorry, but this role won’t be a good fit.

My life is a perennial partitioning of space and time to make room for activities.

My life is a perennial partitioning of space and time to make room for activities.

tdhopper.com/blog/how-im-...

tdhopper.com/blog/how-im-...

www.youtube.com/watch?v=OnXu...

www.youtube.com/watch?v=OnXu...

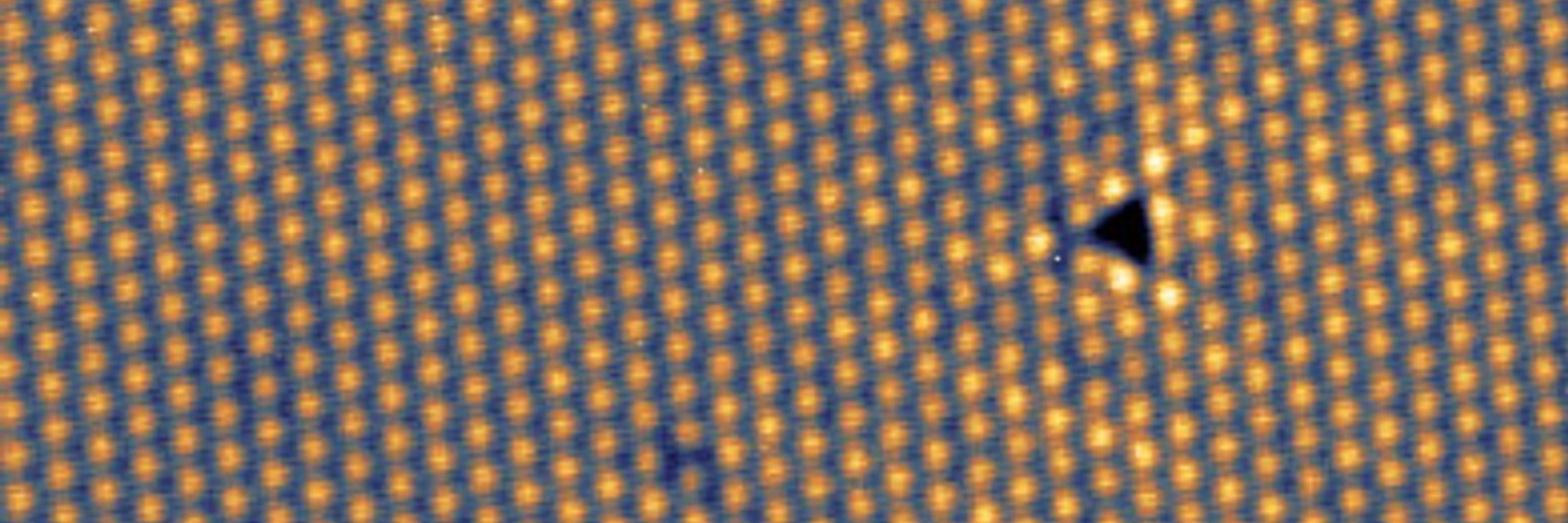

Our latest work shows that pretraining ViTs on procedural symbolic data (eg sequences of balanced parentheses) makes subsequent standard training (eg on ImageNet) more data efficient! How is this possible?! ⬇️🧵

Our latest work shows that pretraining ViTs on procedural symbolic data (eg sequences of balanced parentheses) makes subsequent standard training (eg on ImageNet) more data efficient! How is this possible?! ⬇️🧵