https://www.naher-osten.uni-muenchen.de/personen/wiss_ma/peter-tarras/index.html

andrewjacobs.org/translations...

#shamelessselfpromotion

www.degruyterbrill.com/document/doi...

#shamelessselfpromotion

www.degruyterbrill.com/document/doi...

#shamelessselfpromotion

www.degruyterbrill.com/document/doi...

A great chat, freely available here:

pod.link/1703401848/e...

A great chat, freely available here:

pod.link/1703401848/e...

#AARSBL25 #EthiopicBible #Ethiopic #Manuscripts #Presentations #History #Literature #Bibles

#AARSBL25 #EthiopicBible #Ethiopic #Manuscripts #Presentations #History #Literature #Bibles

www.wissenschaftskommunikation.de/wir-versinke... #Wisskomm @aiforensics.org @kurzgesagt.org

www.wissenschaftskommunikation.de/wir-versinke... #Wisskomm @aiforensics.org @kurzgesagt.org

And also to scientists writing press releases, too - calling something "AI" when it was actually your student spending 12 months fitting and validating a model is disingenuous

And also to scientists writing press releases, too - calling something "AI" when it was actually your student spending 12 months fitting and validating a model is disingenuous

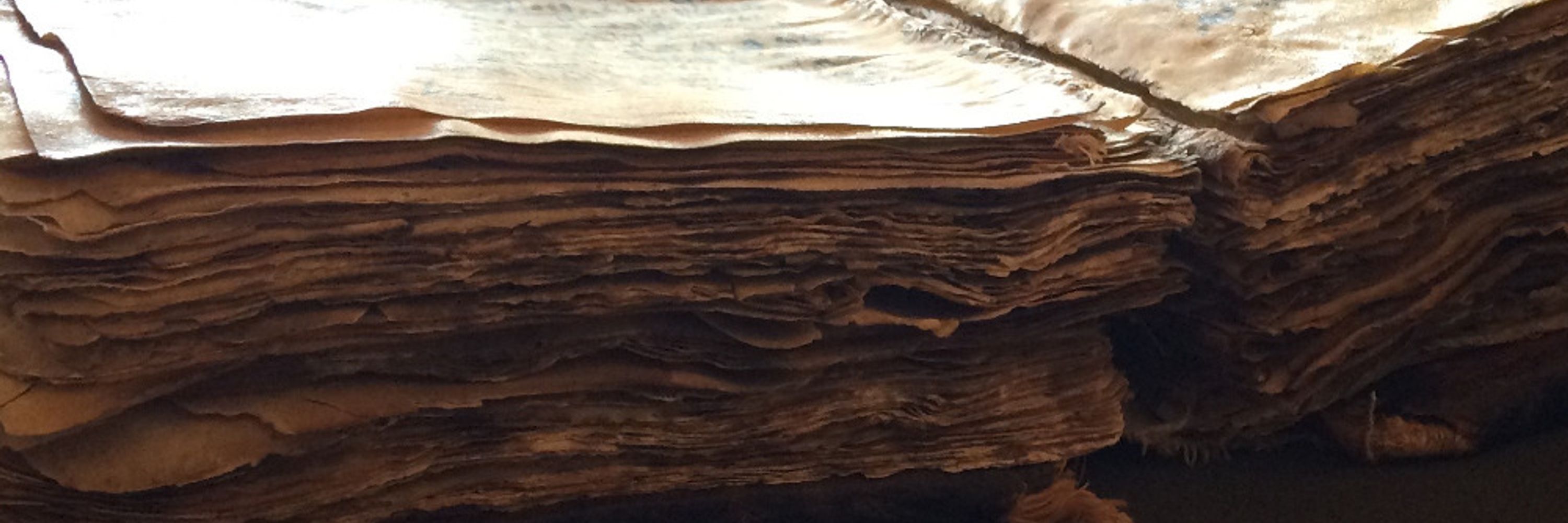

[MS Pierpont Morgan 736, f.187]

[MS Pierpont Morgan 736, f.187]

www.ancientjewreview.com/read/godsghostwriters

www.ancientjewreview.com/read/godsghostwriters