tim.darcet.fr

Or at least, it seems they 𝘸𝘢𝘯𝘵 some…

ViTs have artifacts in attention maps. It’s due to the model using these patches as “registers”.

Just add new tokens (“[reg]”):

- no artifacts

- interpretable attention maps 🦖

- improved performances!

arxiv.org/abs/2309.16588

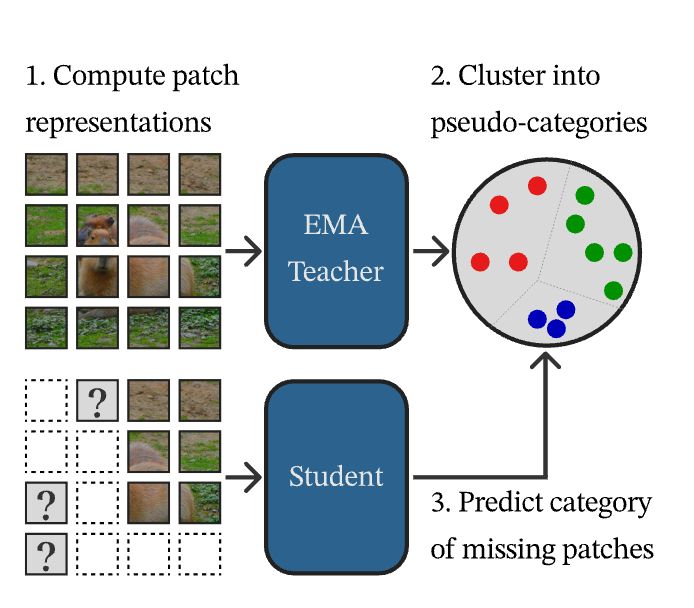

CAPI: Cluster and Predict Latents Patches for Improved Masked Image Modeling.

CAPI: Cluster and Predict Latents Patches for Improved Masked Image Modeling.

Webpage & code: juliettemarrie.github.io/ludvig

Webpage & code: juliettemarrie.github.io/ludvig

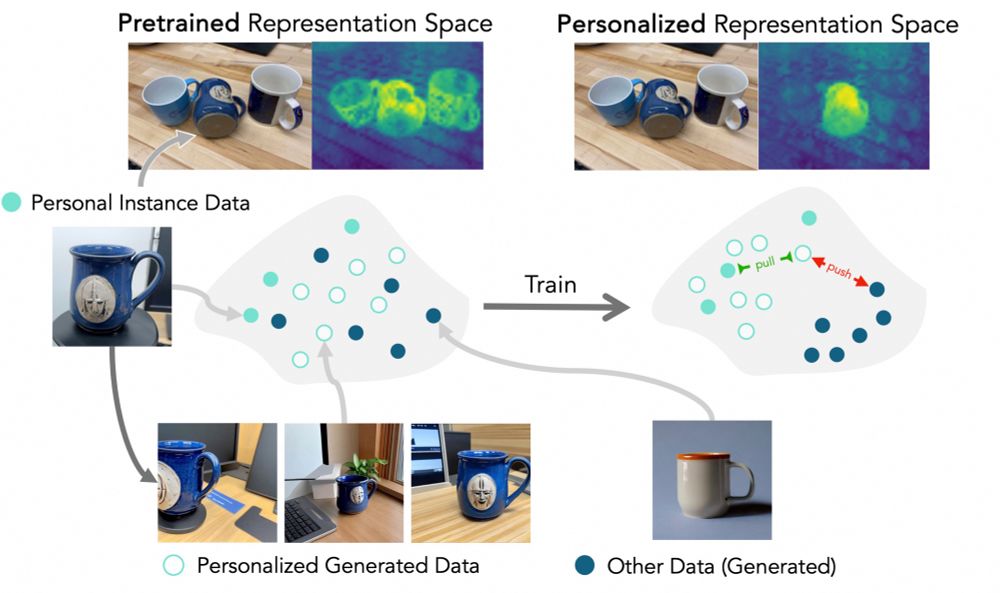

In our new paper, we show you can adapt general-purpose vision models to these tasks from just three photos!

📝: arxiv.org/abs/2412.16156

💻: github.com/ssundaram21/...

(1/n)

In our new paper, we show you can adapt general-purpose vision models to these tasks from just three photos!

📝: arxiv.org/abs/2412.16156

💻: github.com/ssundaram21/...

(1/n)

Do you need language to scale video models? No.

arxiv.org/abs/2412.15212

Great rigorous benchmarking from my colleagues at Google DeepMind.

Do you need language to scale video models? No.

arxiv.org/abs/2412.15212

Great rigorous benchmarking from my colleagues at Google DeepMind.

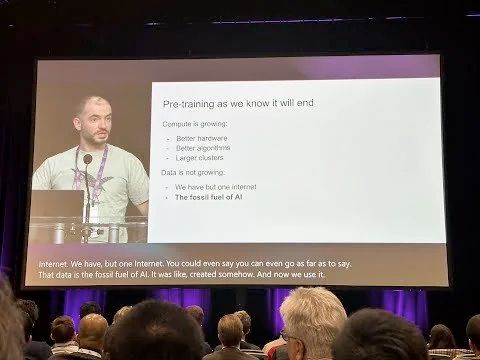

Brilliant talk by Ilya, but he's wrong on one point.

We are NOT running out of data. We are running out of human-written text.

We have more videos than we know what to do with. We just haven't solved pre-training in vision.

Just go out and sense the world. Data is easy.

Brilliant talk by Ilya, but he's wrong on one point.

We are NOT running out of data. We are running out of human-written text.

We have more videos than we know what to do with. We just haven't solved pre-training in vision.

Just go out and sense the world. Data is easy.

🗺️ Paper, code, and demo: nicolas-dufour.github.io/plonk

🗺️ Paper, code, and demo: nicolas-dufour.github.io/plonk

This is one of the largest publicly available natural world image repositories!

This is one of the largest publicly available natural world image repositories!

This is one of the largest publicly available natural world image repositories!

Most software does not need new features. None. Zero. Zilch.

ResNet focused on **fur** patterns, DETR too but also use **paws** (possibly because it helps define bounding boxes), and CLIP **head** concept oddly included human heads — language shaping learned concepts?

ResNet focused on **fur** patterns, DETR too but also use **paws** (possibly because it helps define bounding boxes), and CLIP **head** concept oddly included human heads — language shaping learned concepts?

dev-discuss.pytorch.org/t/fsdp-cudac...

TLDR by default mem is proper to a stream, to share it::

- `Tensor.record_stream` -> automatic, but can be suboptimal and nondeterministic

- `Stream.wait` -> manual, but precise control

dev-discuss.pytorch.org/t/fsdp-cudac...

TLDR by default mem is proper to a stream, to share it::

- `Tensor.record_stream` -> automatic, but can be suboptimal and nondeterministic

- `Stream.wait` -> manual, but precise control

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...