Looking for Fall2026 Neurotheory PhD positions

Interests: statistical mechanics of real NNs, zebrafish, ephaptic coupling

Searching for mesoscale structure in the brain.

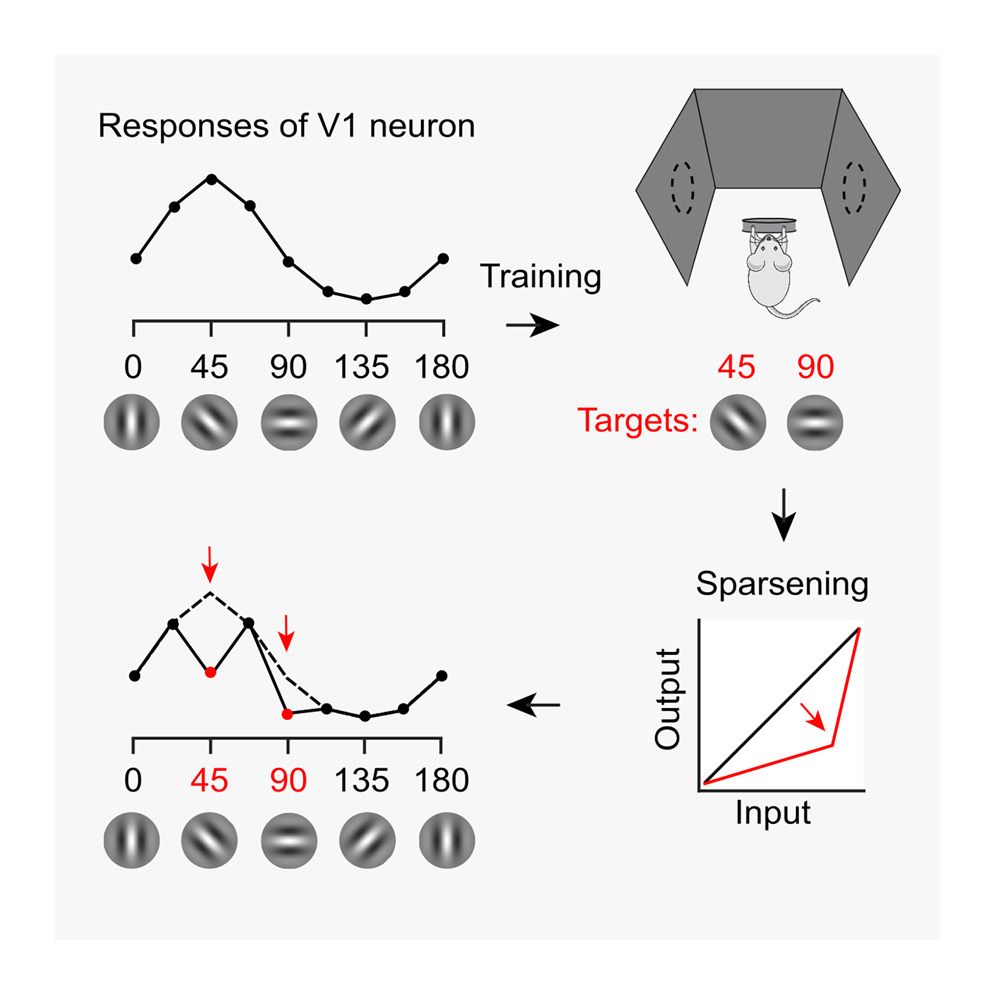

Visual experience orthogonalizes visual cortical responses

Training in a visual task changes V1 tuning curves in odd ways. This effect is explained by a simple convex transformation. It orthogonalizes the population, making it easier to decode.

10.1016/j.celrep.2025.115235

Visual experience orthogonalizes visual cortical responses

Training in a visual task changes V1 tuning curves in odd ways. This effect is explained by a simple convex transformation. It orthogonalizes the population, making it easier to decode.

10.1016/j.celrep.2025.115235

bioRxiv: www.biorxiv.org/content/10.1...

bioRxiv: www.biorxiv.org/content/10.1...

it seems to me that we don’t really even know what a parameter is in the brain, what the relevance of cell types is, how they line up with AI etc

Surprised by his certainty.

it seems to me that we don’t really even know what a parameter is in the brain, what the relevance of cell types is, how they line up with AI etc

Surprised by his certainty.

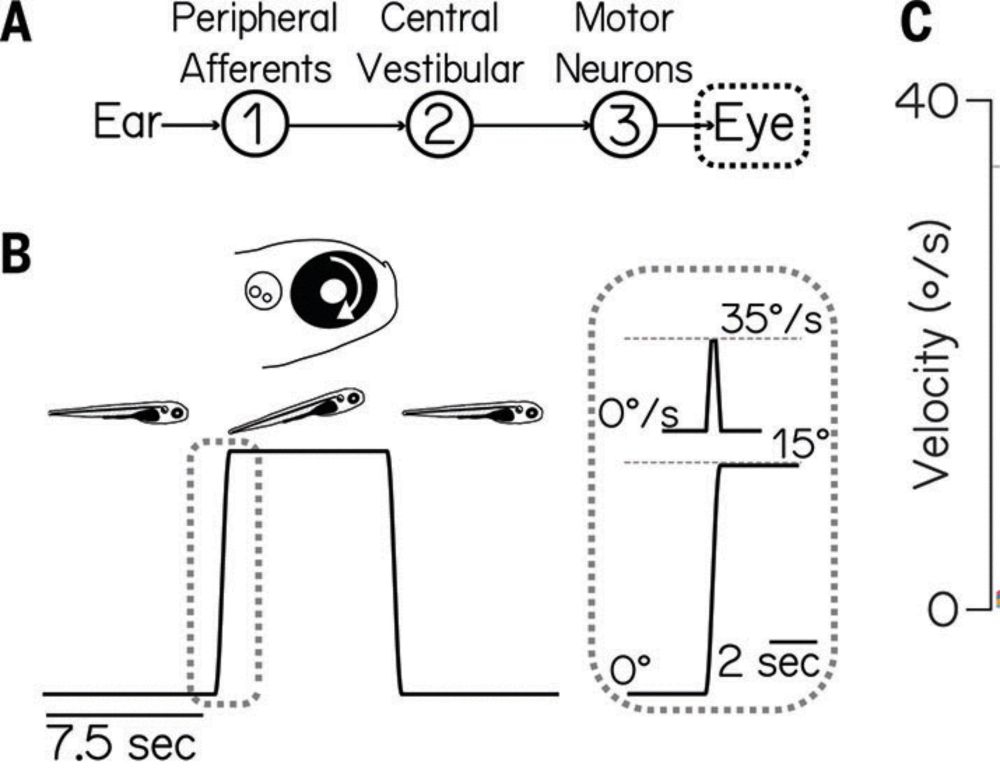

Sensory feedback is always crucial for proper development, right? Wrong!

Crazier still, the motor system is the slowest part of a developing reflex circuit!

Surprises abound in this bluetorial c’mon along….

www.science.org/doi/10.1126/...

1/19

Sensory feedback is always crucial for proper development, right? Wrong!

Crazier still, the motor system is the slowest part of a developing reflex circuit!

Surprises abound in this bluetorial c’mon along….

www.science.org/doi/10.1126/...

1/19

Memories in a network can drift at the single neuron level, but persist at the population level! 🍎🍏

“Drifting assemblies for persistent memory: Neuron transitions and unsupervised compensation”

www.pnas.org/doi/full/10....

Memories in a network can drift at the single neuron level, but persist at the population level! 🍎🍏

“Drifting assemblies for persistent memory: Neuron transitions and unsupervised compensation”

www.pnas.org/doi/full/10....

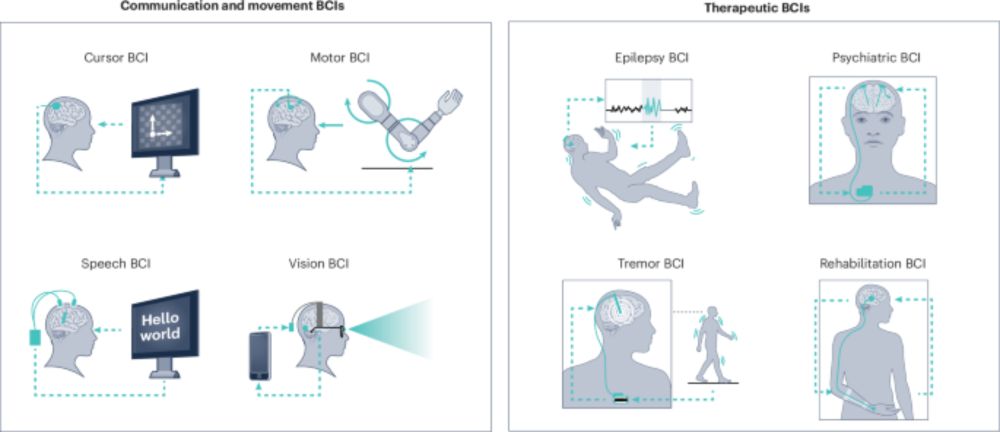

We argue in Nature BME today that if the tech stimulates or records brain activity AND does computation, it's a BCI.

www.nature.com/articles/s41...

But we still need a way to discuss different types of BCI separately... 🧵

#BCI #BrainComputerInterface

We argue in Nature BME today that if the tech stimulates or records brain activity AND does computation, it's a BCI.

www.nature.com/articles/s41...

But we still need a way to discuss different types of BCI separately... 🧵

#BCI #BrainComputerInterface

Nice review by Danil Tyulmankov

arxiv.org/abs/2412.05501

Nice review by Danil Tyulmankov

arxiv.org/abs/2412.05501

or u think outside collaborators + analyzing existing datasets are just as well?

or u think outside collaborators + analyzing existing datasets are just as well?

A short thread 🧵

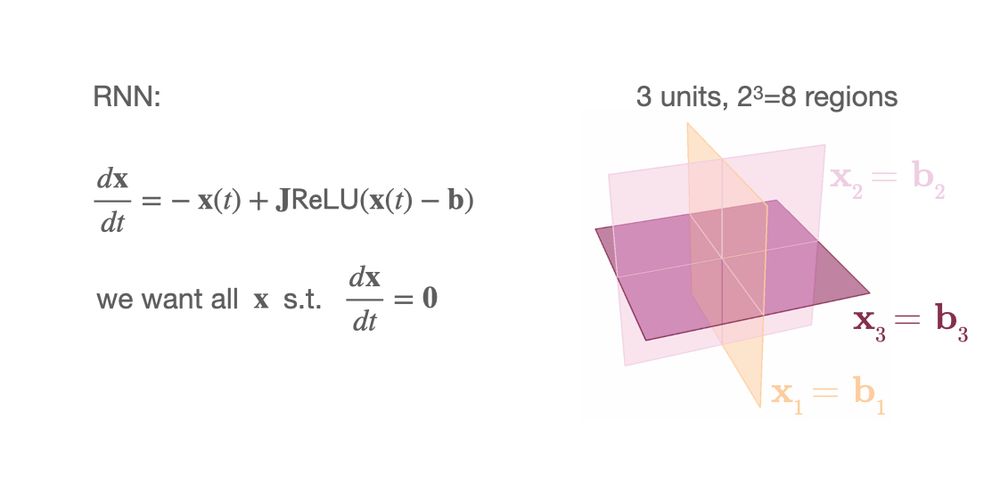

In RNNs with N units with ReLU(x-b) activations the phase space is partioned in 2^N regions by hyperplanes at x=b 1/7

A short thread 🧵

In RNNs with N units with ReLU(x-b) activations the phase space is partioned in 2^N regions by hyperplanes at x=b 1/7

Link to thesis: infoscience.epfl.ch/entities/pub...

Link to thesis: infoscience.epfl.ch/entities/pub...

If you know of any computational / theoretical work modelling neuromodulators please share it 🙏 if you don't, please retweet!

If you know of any computational / theoretical work modelling neuromodulators please share it 🙏 if you don't, please retweet!

This nifty theory predicts how intrinsic timescales depend on network structure, neuron properties and input. A nontrivial task!

“Microscopic theory of intrinsic timescales in spiking neural networks”

tinyurl.com/43esfrrk

By @avm.bsky.social and @albada.bsky.social

This nifty theory predicts how intrinsic timescales depend on network structure, neuron properties and input. A nontrivial task!

“Microscopic theory of intrinsic timescales in spiking neural networks”

tinyurl.com/43esfrrk

By @avm.bsky.social and @albada.bsky.social

But I’m struggling to see the leap from whole-brain connectomics to "building human-like AI systems." Help me here.

I’ve heard this idea a lot, but every time, I’m just not sure how it’s supposed to work.

#NeuroAI #neuroscience

Read more: e11.bio/news/roadmap

But I’m struggling to see the leap from whole-brain connectomics to "building human-like AI systems." Help me here.

I’ve heard this idea a lot, but every time, I’m just not sure how it’s supposed to work.

#NeuroAI #neuroscience

1990s: "reverse correlation"

2000s: "granger causality"

2010s: "functional connectivity"

2020s: "subspace communication"

1990s: "reverse correlation"

2000s: "granger causality"

2010s: "functional connectivity"

2020s: "subspace communication"

The renamed:

Bluesky-sized history of neuroscience (biased by my interests)

The renamed:

Bluesky-sized history of neuroscience (biased by my interests)