doomscrollingbabel.manoel.xyz/p/labeling-d...

doomscrollingbabel.manoel.xyz/p/labeling-d...

Here’s one answer from researchers across NLP/MT, Translation Studies, and HCI.

"An Interdisciplinary Approach to Human-Centered Machine Translation"

arxiv.org/abs/2506.13468

Here’s one answer from researchers across NLP/MT, Translation Studies, and HCI.

"An Interdisciplinary Approach to Human-Centered Machine Translation"

arxiv.org/abs/2506.13468

I'll be recruiting PhD students this upcoming cycle for fall 2026. (And if you're a UMD grad student, sign up for my fall seminar!)

I'll be recruiting PhD students this upcoming cycle for fall 2026. (And if you're a UMD grad student, sign up for my fall seminar!)

🧟 You get what we call a Frankentext!

💡 Frankentexts are surprisingly coherent and tough for AI detectors to flag.

🧟 You get what we call a Frankentext!

💡 Frankentexts are surprisingly coherent and tough for AI detectors to flag.

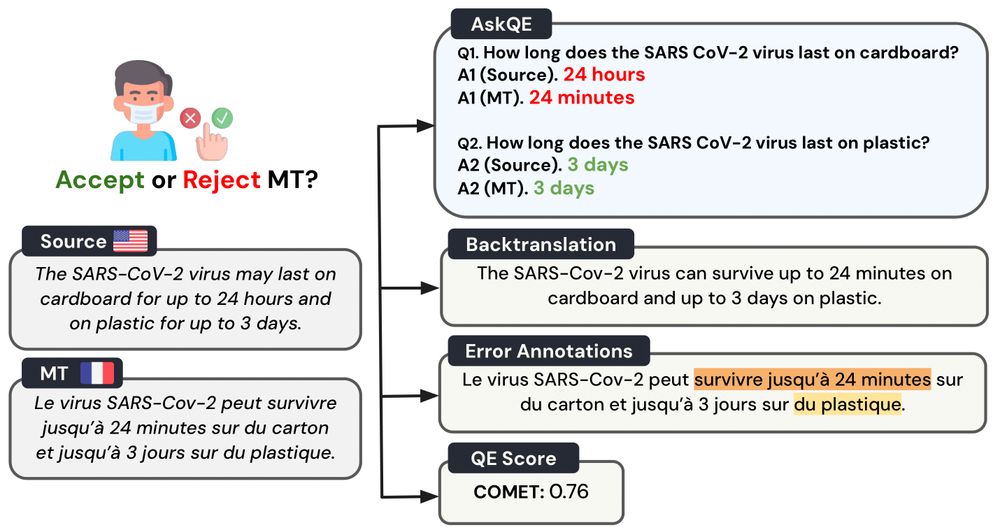

Introducing ❓AskQE❓, an #LLM-based Question Generation + Answering framework that detects critical MT errors and provides actionable feedback 🗣️

#ACL2025

Introducing ❓AskQE❓, an #LLM-based Question Generation + Answering framework that detects critical MT errors and provides actionable feedback 🗣️

#ACL2025

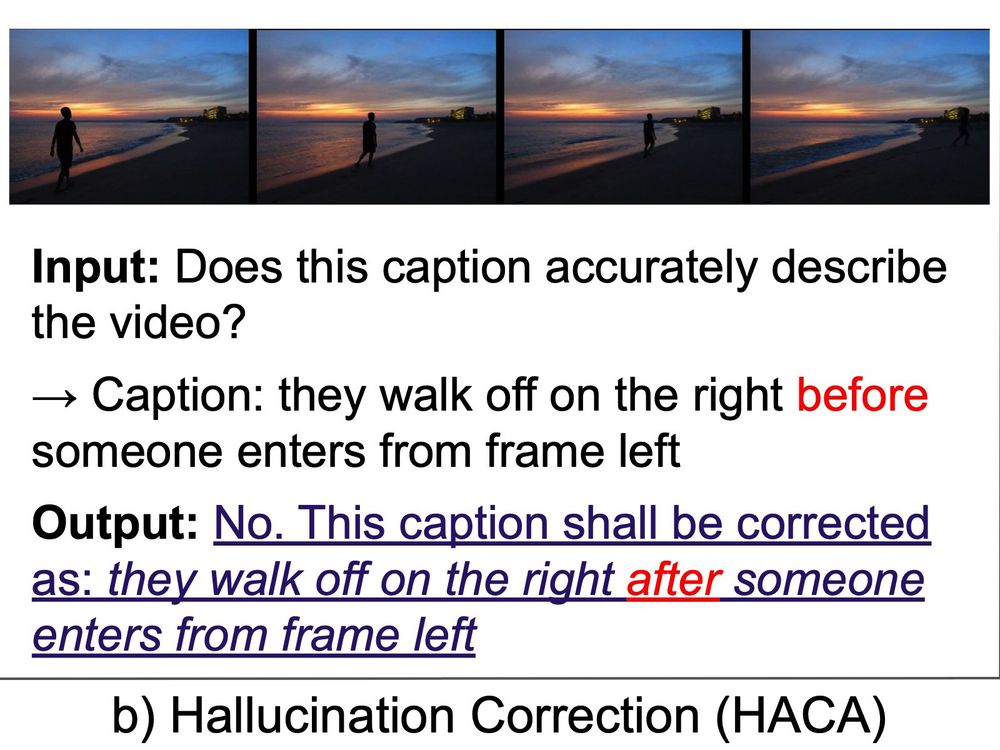

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079

FATE is hiring a pre-doc research assistant! We're looking for candidates who will have completed their bachelor's degree (or equivalent) by summer 2025 and want to advance their research skills before applying to PhD programs.

www.microsoft.com/en-us/resear...

FATE is hiring a pre-doc research assistant! We're looking for candidates who will have completed their bachelor's degree (or equivalent) by summer 2025 and want to advance their research skills before applying to PhD programs.

Before then, I'll postdoc for a year in the NLP group at another UW 🏔️ in the Pacific Northwest

Before then, I'll postdoc for a year in the NLP group at another UW 🏔️ in the Pacific Northwest

Excited to share my paper that analyzes the effect of cross-lingual alignment on multilingual performance

Paper: arxiv.org/abs/2504.09378 🧵

Excited to share my paper that analyzes the effect of cross-lingual alignment on multilingual performance

Paper: arxiv.org/abs/2504.09378 🧵

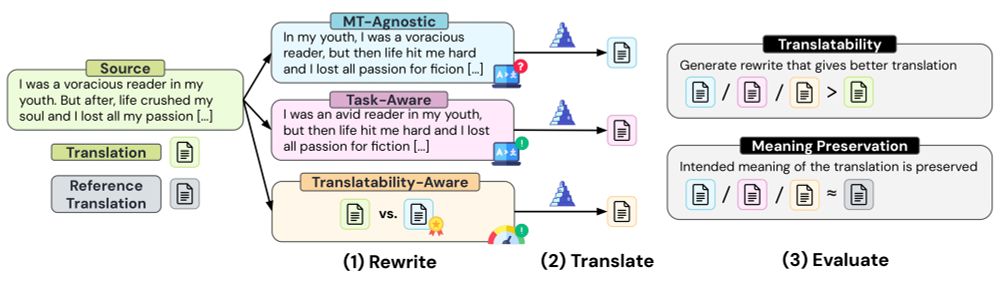

1/ We often assume that well-written text is easier to translate ✏️

But can #LLMs automatically rewrite inputs to improve machine translation? 🌍

Here’s what we found 🧵

1/ We often assume that well-written text is easier to translate ✏️

But can #LLMs automatically rewrite inputs to improve machine translation? 🌍

Here’s what we found 🧵

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

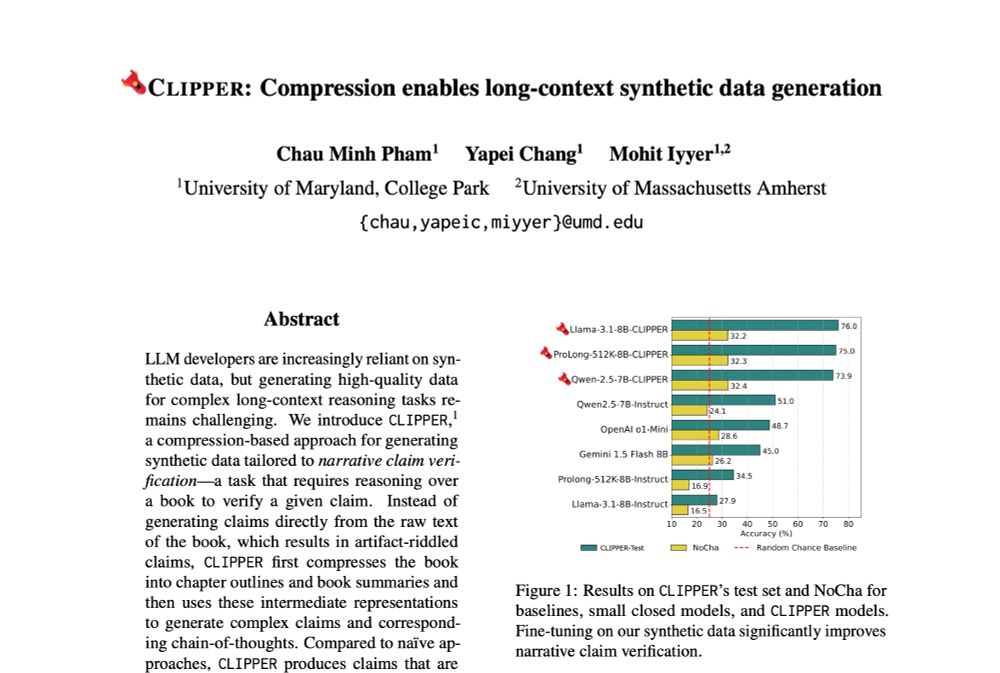

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

Metaphors shape how people understand politics, but measuring them (& their real-world effects) is hard.

We develop a new method to measure metaphor & use it to study dehumanizing metaphor in 400K immigration tweets Link: bit.ly/4i3PGm3

#NLP #NLProc #polisky #polcom #compsocialsci

🐦🐦

Metaphors shape how people understand politics, but measuring them (& their real-world effects) is hard.

We develop a new method to measure metaphor & use it to study dehumanizing metaphor in 400K immigration tweets Link: bit.ly/4i3PGm3

#NLP #NLProc #polisky #polcom #compsocialsci

🐦🐦

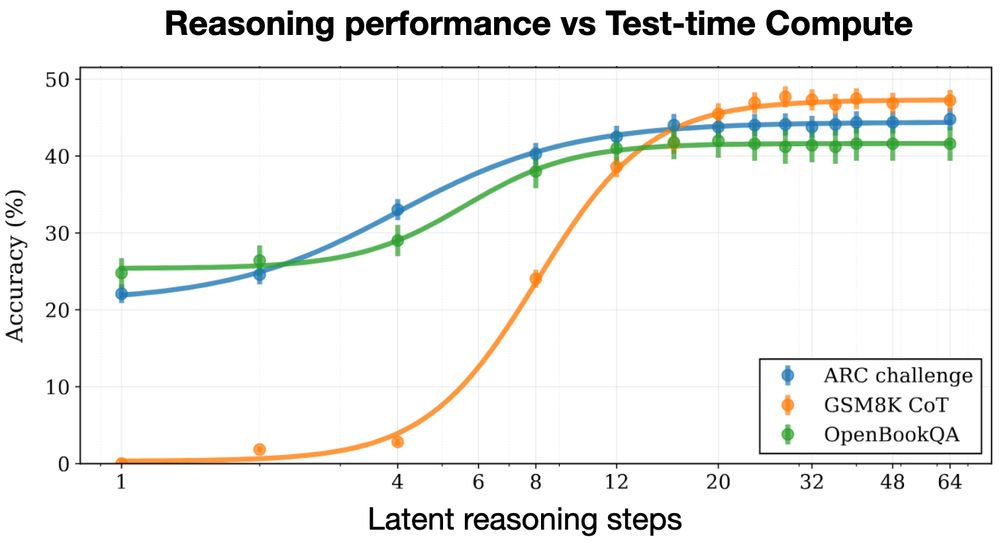

Huginn-3.5B reasons implicitly in latent space 🧠

Unlike O1 and R1, latent reasoning doesn’t need special chain-of-thought training data, and doesn't produce extra CoT tokens at test time.

We trained on 800B tokens 👇

Huginn-3.5B reasons implicitly in latent space 🧠

Unlike O1 and R1, latent reasoning doesn’t need special chain-of-thought training data, and doesn't produce extra CoT tokens at test time.

We trained on 800B tokens 👇

CECE enables interpretability and achieves significant improvements in hard compositional benchmarks without fine-tuning (e.g., Winoground, EqBen) and alignment (e.g., DrawBench, EditBench). + info: cece-vlm.github.io

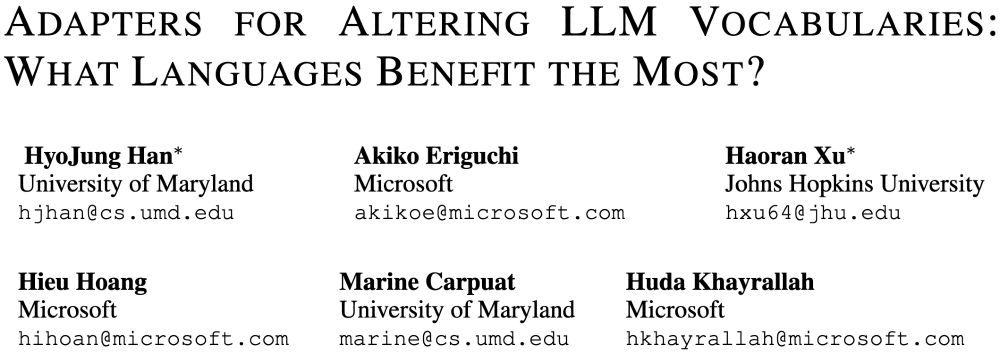

🧐Which languages benefit the most from vocabulary adaptation?

We introduce VocADT, a new vocabulary adaptation method with a vocabulary adapter.

We explore the impact of various adaptation strategies on languages with diverse scripts and fragmentation to answer this question

🧐Which languages benefit the most from vocabulary adaptation?

We introduce VocADT, a new vocabulary adaptation method with a vocabulary adapter.

We explore the impact of various adaptation strategies on languages with diverse scripts and fragmentation to answer this question

forms.gle/rZp67YvMn1hn...

forms.gle/rZp67YvMn1hn...

Are you bursting with anticipation to see what they are? Check out this blog post, and read down-thread!! 🎉🧵👇 1/n

medium.com/@TmlrOrg/ann...

Are you bursting with anticipation to see what they are? Check out this blog post, and read down-thread!! 🎉🧵👇 1/n

medium.com/@TmlrOrg/ann...