🧟 You get what we call a Frankentext!

💡 Frankentexts are surprisingly coherent and tough for AI detectors to flag.

How the current way of training language models destroys any voice (and hope of good writing).

www.interconnects.ai/p/why-ai-wri...

How the current way of training language models destroys any voice (and hope of good writing).

www.interconnects.ai/p/why-ai-wri...

We'll start with this piece on the Google Books project: the hopes, dreams, disasters, and aftermath of building a public library on the internet.

1/n

We'll start with this piece on the Google Books project: the hopes, dreams, disasters, and aftermath of building a public library on the internet.

1/n

We explore a fundamental question: How can we *automatically* learn which data is most valuable for training foundation models?

Paper: arxiv.org/pdf/2505.17895 to appear at @neuripsconf.bsky.social

Thread 👇

We explore a fundamental question: How can we *automatically* learn which data is most valuable for training foundation models?

Paper: arxiv.org/pdf/2505.17895 to appear at @neuripsconf.bsky.social

Thread 👇

So I’m thrilled to share this new home for DH proceedings, which will include CHR papers & more.

Thanks to @taylor-arnold.bsky.social for leading this effort!

bit.ly/ach-anthology

So I’m thrilled to share this new home for DH proceedings, which will include CHR papers & more.

Thanks to @taylor-arnold.bsky.social for leading this effort!

bit.ly/ach-anthology

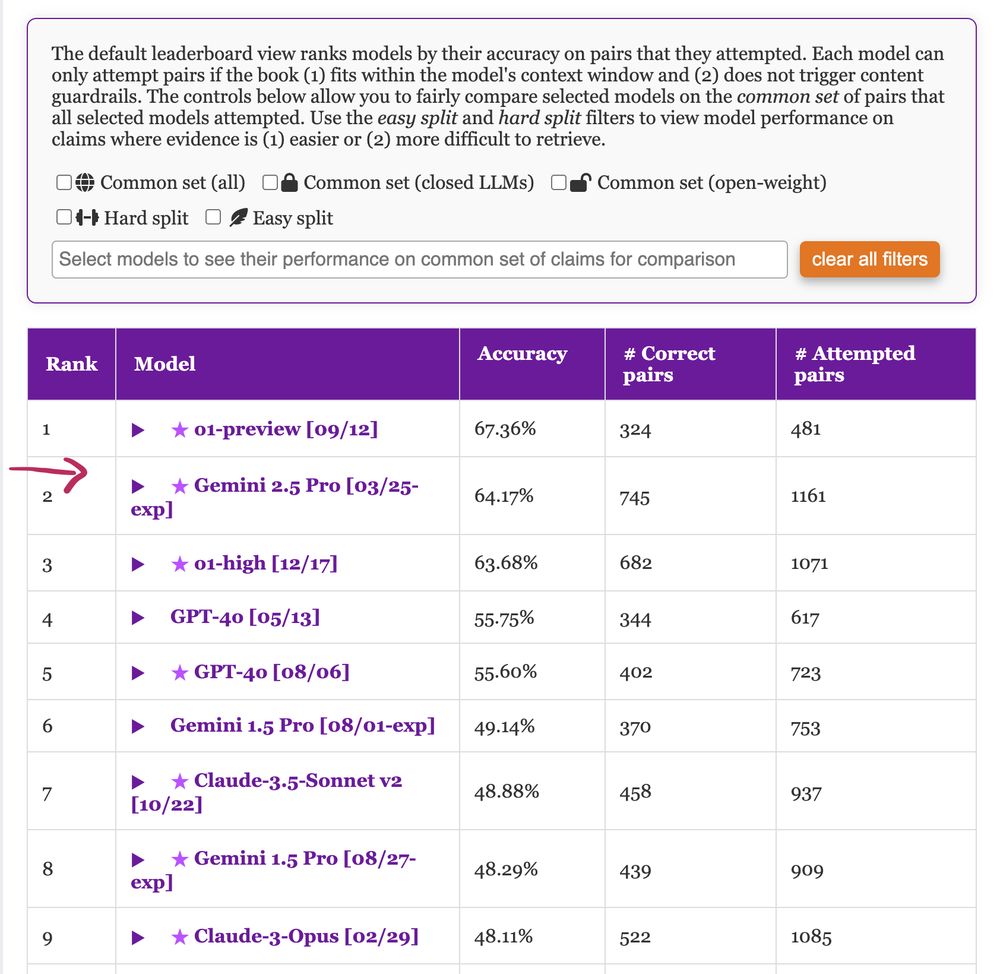

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

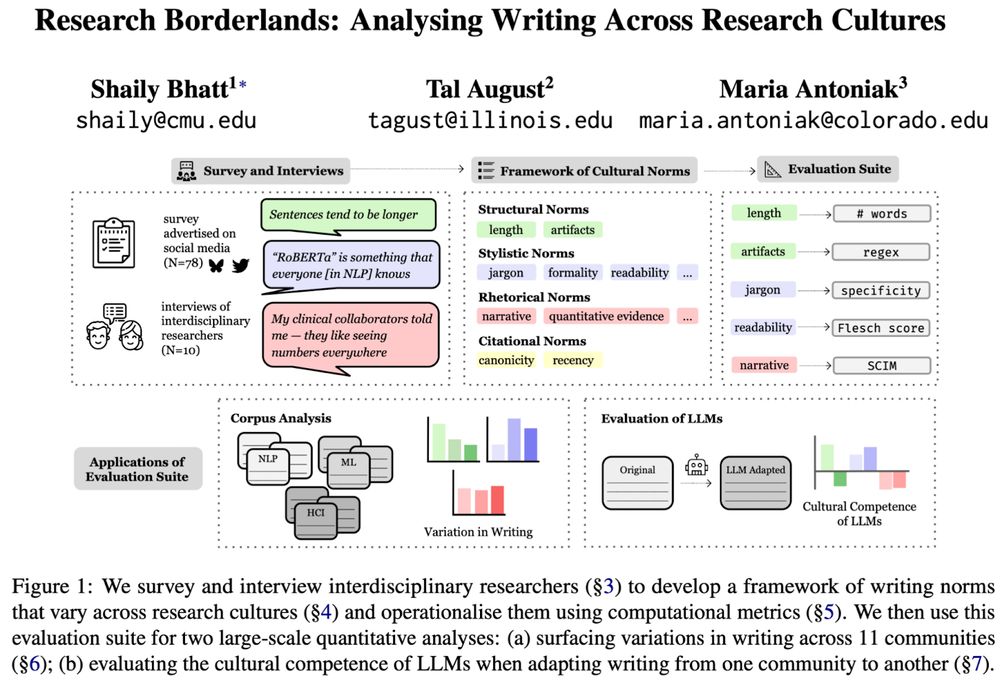

🚩 Tired of “cultural” evals that don't consult people?

We engaged with interdisciplinary researchers to identify & measure ✨cultural norms✨in scientific writing, and show that❗LLMs flatten them❗

📜 arxiv.org/abs/2506.00784

[1/11]

🧟 You get what we call a Frankentext!

💡 Frankentexts are surprisingly coherent and tough for AI detectors to flag.

🧟 You get what we call a Frankentext!

💡 Frankentexts are surprisingly coherent and tough for AI detectors to flag.

Great work led by undergrads at UMass NLP 🥳

Our 🦉OWL dataset (31K/10 languages) shows GPT4o recognizes books:

92% English

83% official translations

69% unseen translations

75% as audio (EN)

Great work led by undergrads at UMass NLP 🥳

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

🚨 We've put LLMs to the test as writing co-pilots – how good are they really at helping us write? LLMs are increasingly used for open-ended tasks like writing assistance, but how do we assess their effectiveness? 🤔

arxiv.org/pdf/2503.19711

🚨 We've put LLMs to the test as writing co-pilots – how good are they really at helping us write? LLMs are increasingly used for open-ended tasks like writing assistance, but how do we assess their effectiveness? 🤔

arxiv.org/pdf/2503.19711

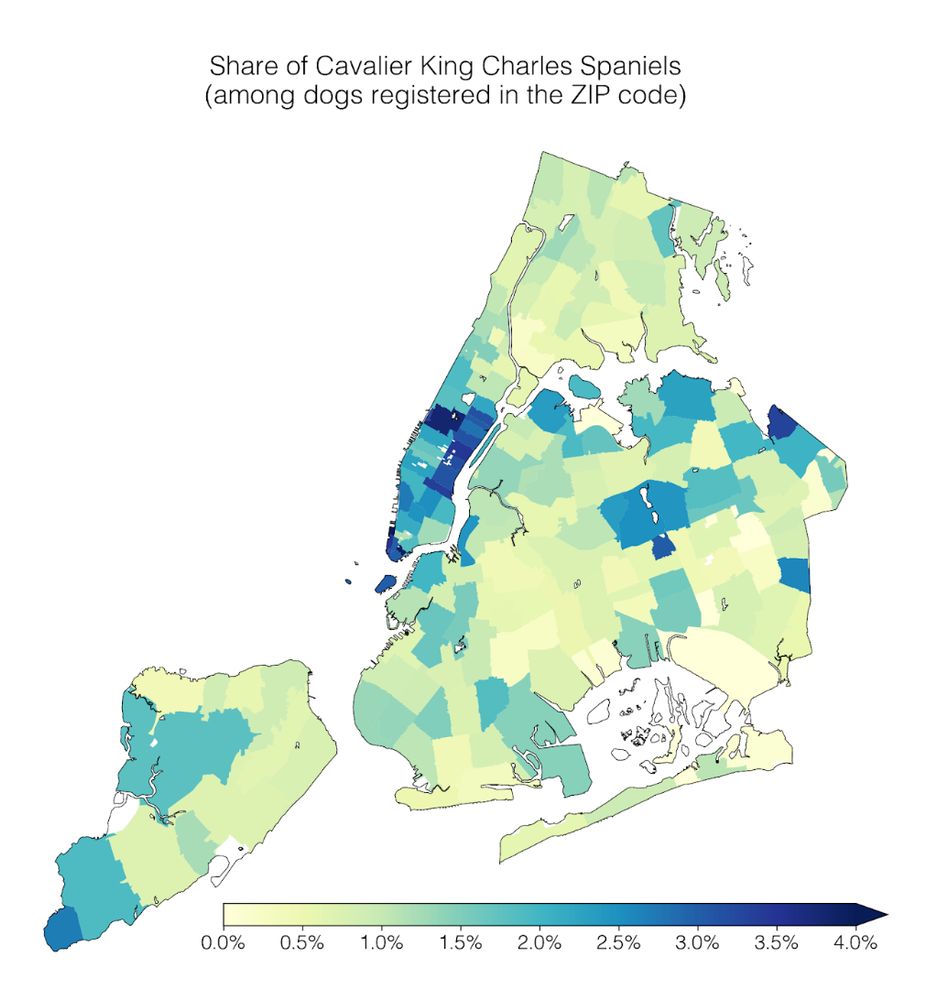

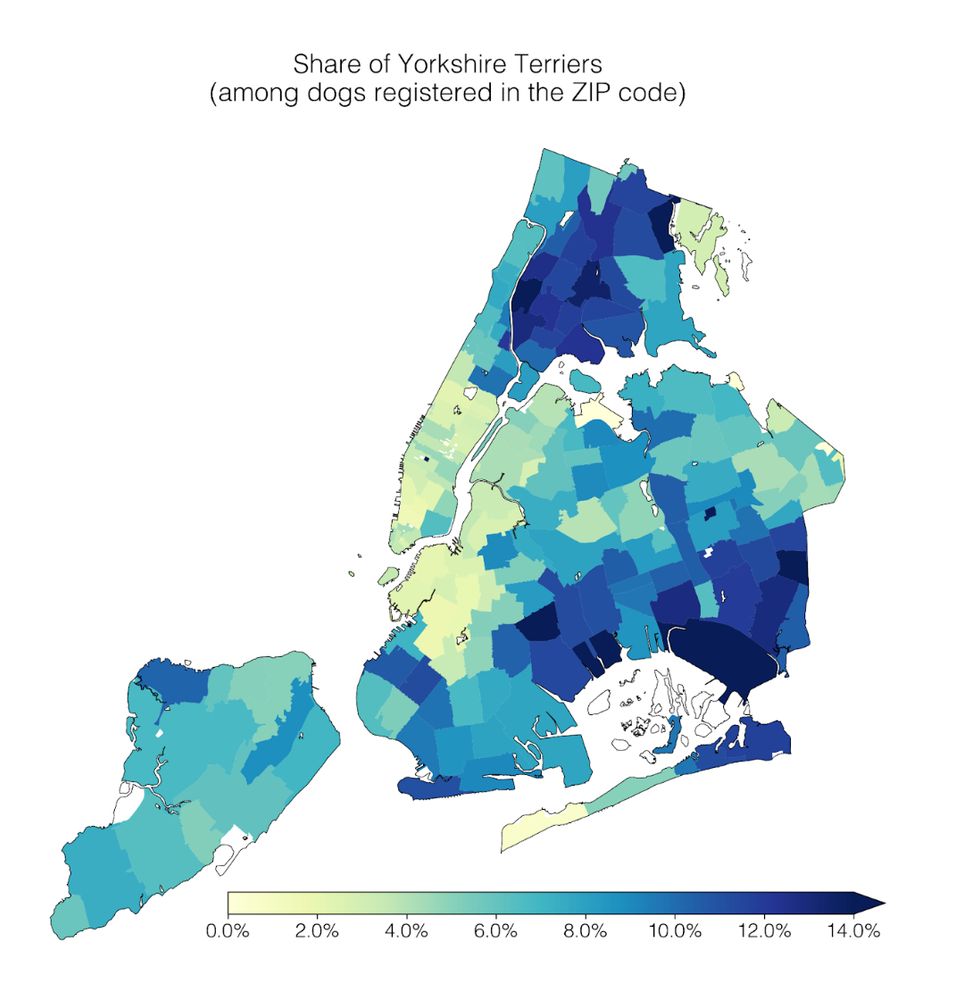

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

Which, clearly only a crazy weirdo would do.

dmarx.github.io/papers-feed/

Which, clearly only a crazy weirdo would do.

dmarx.github.io/papers-feed/

Our method, HypotheSAEs, produces interpretable text features that predict a target variable, e.g. features in news headlines that predict engagement. 🧵1/

Our method, HypotheSAEs, produces interpretable text features that predict a target variable, e.g. features in news headlines that predict engagement. 🧵1/

✅ Humans achieve 85% accuracy

❌ OpenAI Operator: 24%

❌ Anthropic Computer Use: 14%

❌ Convergence AI Proxy: 13%

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

We're open to feedback—read & share thoughts!

@laurenfklein.bsky.social @mmvty.bsky.social @docdre.distributedblackness.net @mariaa.bsky.social @jmjafrx.bsky.social @nolauren.bsky.social @dmimno.bsky.social

We're open to feedback—read & share thoughts!

@laurenfklein.bsky.social @mmvty.bsky.social @docdre.distributedblackness.net @mariaa.bsky.social @jmjafrx.bsky.social @nolauren.bsky.social @dmimno.bsky.social