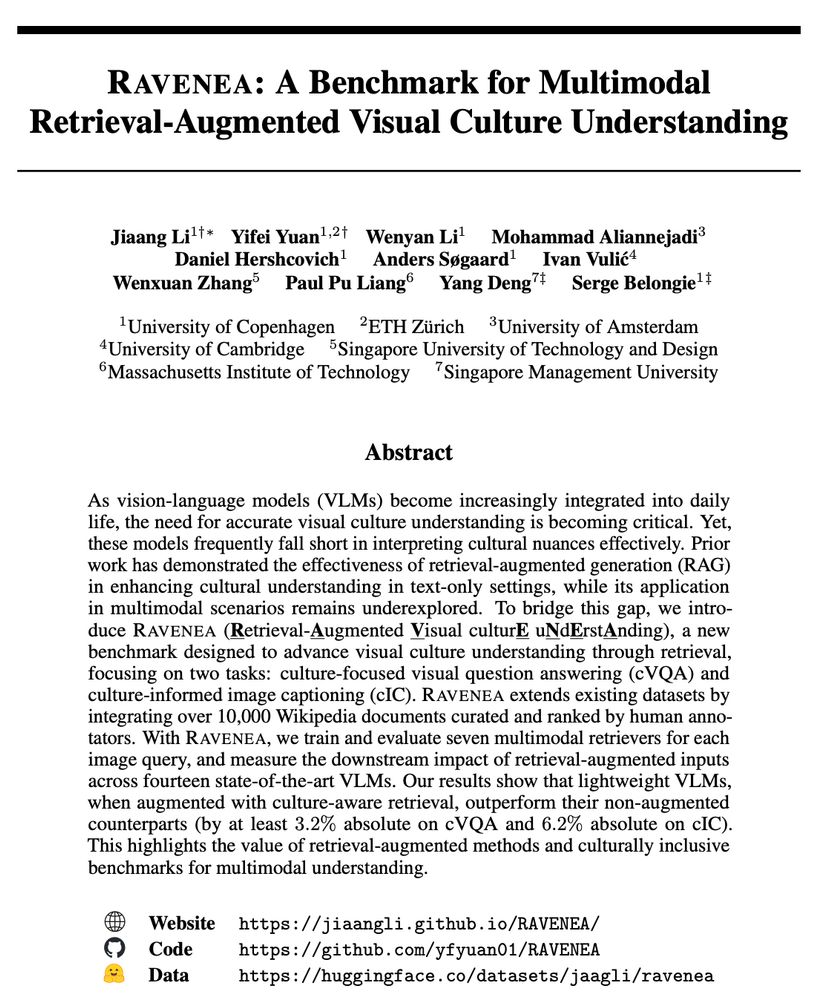

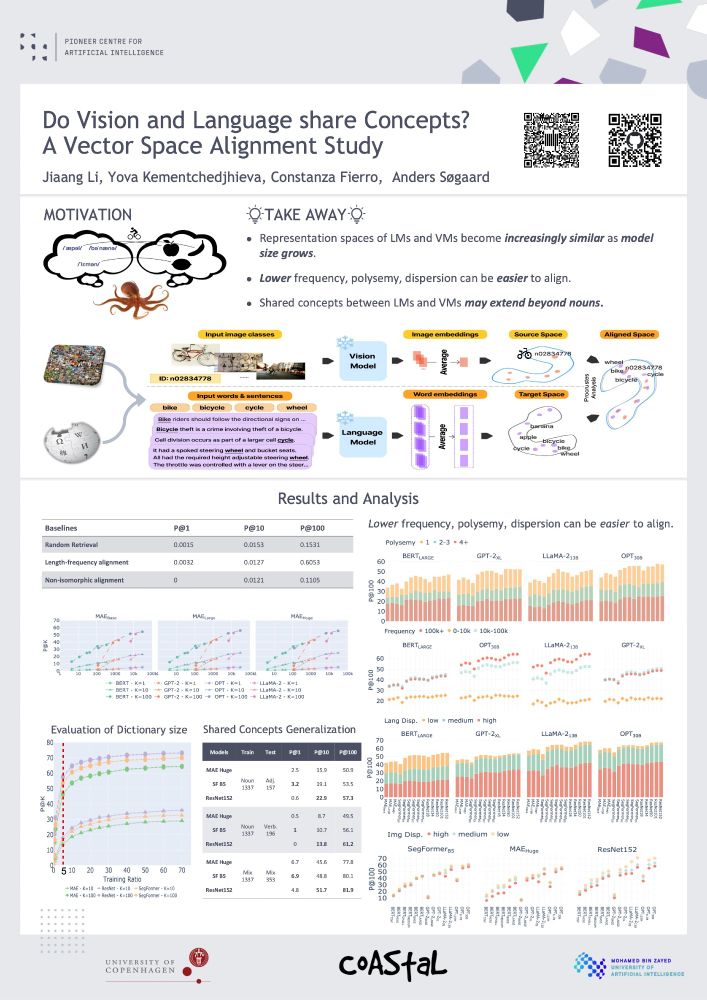

Jiaang Li

@jiaangli.bsky.social

1.1K followers

85 following

17 posts

PhD student at University of Copenhagen @belongielab.org | #nlp #computervision | ELLIS student @ellis.eu

🌐 https://jiaangli.github.io/

Posts

Media

Videos

Starter Packs

Reposted by Jiaang Li

Reposted by Jiaang Li

Reposted by Jiaang Li

Reposted by Jiaang Li

Jiaang Li

@jiaangli.bsky.social

· May 23

Reposted by Jiaang Li

Yifei Yuan

@yfyuan01.bsky.social

· Apr 21

Revisiting the Othello World Model Hypothesis

Li et al. (2023) used the Othello board game as a test case for the ability of GPT-2 to induce world models, and were followed up by Nanda et al. (2023b). We briefly discuss the original experiments, ...

arxiv.org

Reposted by Jiaang Li

Reposted by Jiaang Li

Reposted by Jiaang Li

Nico Lang

@nicolang.bsky.social

· Jan 9

Reposted by Jiaang Li

Reposted by Jiaang Li

Belongie Lab

@belongielab.org

· Dec 3

Reposted by Jiaang Li

Jiaang Li

@jiaangli.bsky.social

· Nov 24