Winston Lin

@linstonwin.bsky.social

1.9K followers

1.6K following

22 posts

senior lecturer in statistics, penn

NYC & Philadelphia

https://www.stat.berkeley.edu/~winston

Posts

Media

Videos

Starter Packs

Pinned

Winston Lin

@linstonwin.bsky.social

· Mar 8

Winston Lin

@linstonwin.bsky.social

· Sep 1

How to Write an Effective Referee Report and Improve the Scientific Review Process

(Winter 2017) - The review process for academic journals in economics has grown vastly more extensive over time. Journals demand more revisions, and papers have become bloated with numerous robustness...

www.aeaweb.org

Reposted by Winston Lin

Noah Greifer

@noahgreifer.bsky.social

· Jul 9

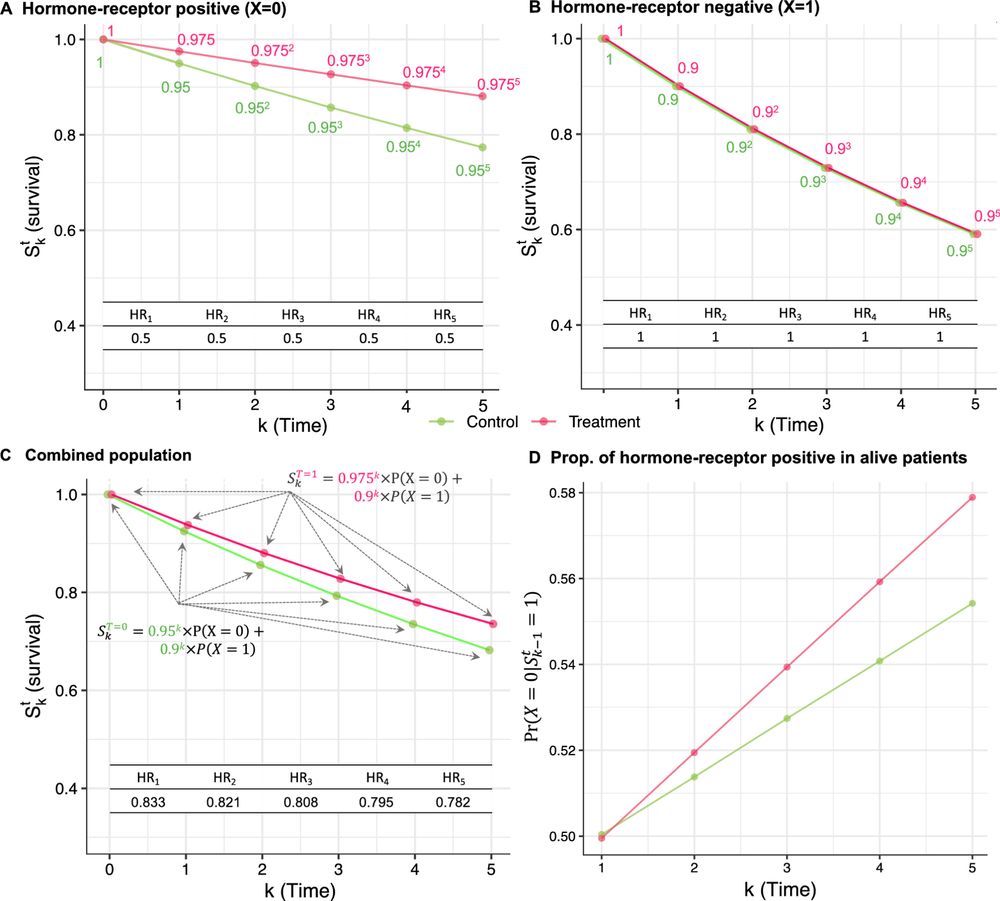

How hazard ratios can mislead and why it matters in practice - European Journal of Epidemiology

Hazard ratios are routinely reported as effect measures in clinical trials and observational studies. However, many methodological works have raised concerns about the interpretation of hazard ratios ...

doi.org

Reposted by Winston Lin

Reposted by Winston Lin

Noah Greifer

@noahgreifer.bsky.social

· Jun 4

Winston Lin

@linstonwin.bsky.social

· May 5

Reposted by Winston Lin

Reposted by Winston Lin

Brennan Kahan

@brennankahan.bsky.social

· Apr 15

CONSORT 2025 explanation and elaboration: updated guideline for reporting randomised trials

Critical appraisal of the quality of randomised trials is possible only if their design, conduct, analysis, and results are completely and accurately reported. Without transparent reporting of the met...

www.bmj.com

Reposted by Winston Lin

Winston Lin

@linstonwin.bsky.social

· Apr 9

Why Econometrics is Confusing Part 1: The Error Term | econometrics.blog

“Suppose that \(Y = \alpha + \beta X + U\).” A sentence like this is bound to come up dozens of times in an introductory econometrics course, but if I had my way it would be stamped out completely.

www.econometrics.blog

Reposted by Winston Lin

Winston Lin

@linstonwin.bsky.social

· Apr 6

ROBUST STANDARD ERRORS IN SMALL SAMPLES: SOME PRACTICAL ADVICE on JSTOR

Guido W. Imbens, Michal Kolesár, ROBUST STANDARD ERRORS IN SMALL SAMPLES: SOME PRACTICAL ADVICE, The Review of Economics and Statistics, Vol. 98, No. 4 (October 2016), pp. 701-712

www.jstor.org

Reposted by Winston Lin

Reposted by Winston Lin

Winston Lin

@linstonwin.bsky.social

· Mar 9

The impact of a Hausman pretest on the size of a hypothesis test: The panel data case | Request PDF

Request PDF | The impact of a Hausman pretest on the size of a hypothesis test: The panel data case | The size properties of a two-stage test in a panel data model are investigated where in the first ...

www.researchgate.net

Winston Lin

@linstonwin.bsky.social

· Mar 9

Pretest with Caution: Event-Study Estimates after Testing for Parallel Trends

(September 2022) - This paper discusses two important limitations of the common practice of testing for preexisting differences in trends ("pre-trends") when using difference-in-differences and relate...

www.aeaweb.org

Winston Lin

@linstonwin.bsky.social

· Mar 8

Robustness of ordinary least squares in randomized clinical trials

There has been a series of occasional papers in this journal about semiparametric methods for robust covariate control in the analysis of clinical trials. These methods are fairly easy to apply on cu....

doi.org

Winston Lin

@linstonwin.bsky.social

· Mar 8

Reposted by Winston Lin

Winston Lin

@linstonwin.bsky.social

· Feb 10

Winston Lin

@linstonwin.bsky.social

· Feb 10

Winston Lin

@linstonwin.bsky.social

· Feb 10