Charlie Williams

@pontifc8.bsky.social

390 followers

650 following

82 posts

Professor at Bocconi University in Milan. Amiable dabbler posting about business research, business education, strategy execution, human impact of business, and maybe a few other things.

Posts

Media

Videos

Starter Packs

Reposted by Charlie Williams

Thor Benson

@thorbenson.bsky.social

· Aug 15

Reposted by Charlie Williams

Tombmas Spooks

@thomasfuchs.at

· Jun 20

Reposted by Charlie Williams

Charlie Williams

@pontifc8.bsky.social

· May 17

Reposted by Charlie Williams

Reposted by Charlie Williams

Reposted by Charlie Williams

Charlie Williams

@pontifc8.bsky.social

· Apr 24

Charlie Williams

@pontifc8.bsky.social

· Apr 14

Essay | The Last Decision by the World’s Leading Thinker on Decisions

Shortly before Daniel Kahneman died last March, he emailed friends a message: He was choosing to end his own life in Switzerland. Some are still struggling with his choice.

www.wsj.com

Reposted by Charlie Williams

Reposted by Charlie Williams

Charlie Williams

@pontifc8.bsky.social

· Dec 21

Charlie Williams

@pontifc8.bsky.social

· Dec 20

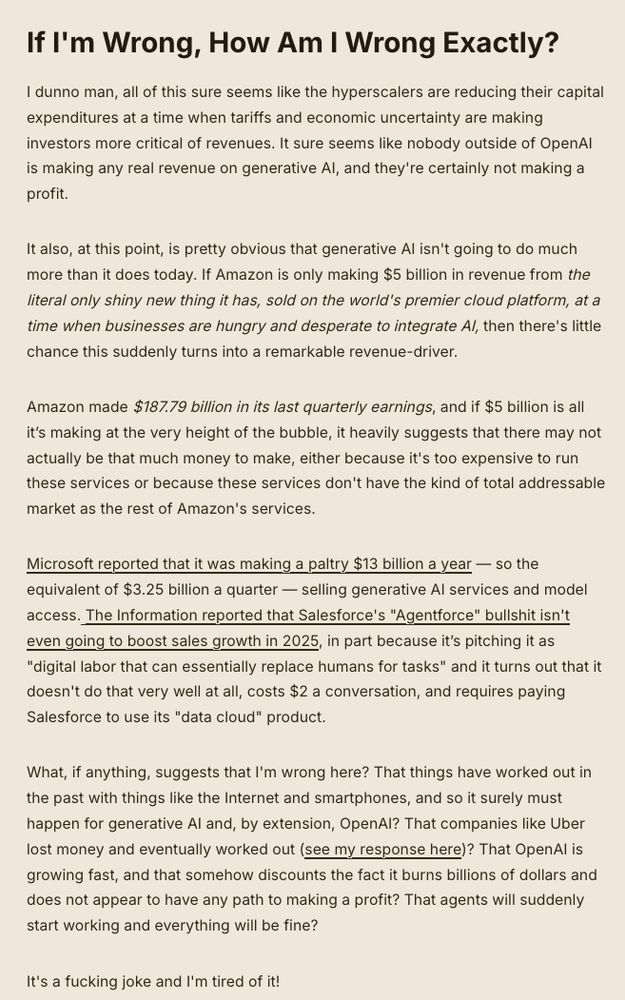

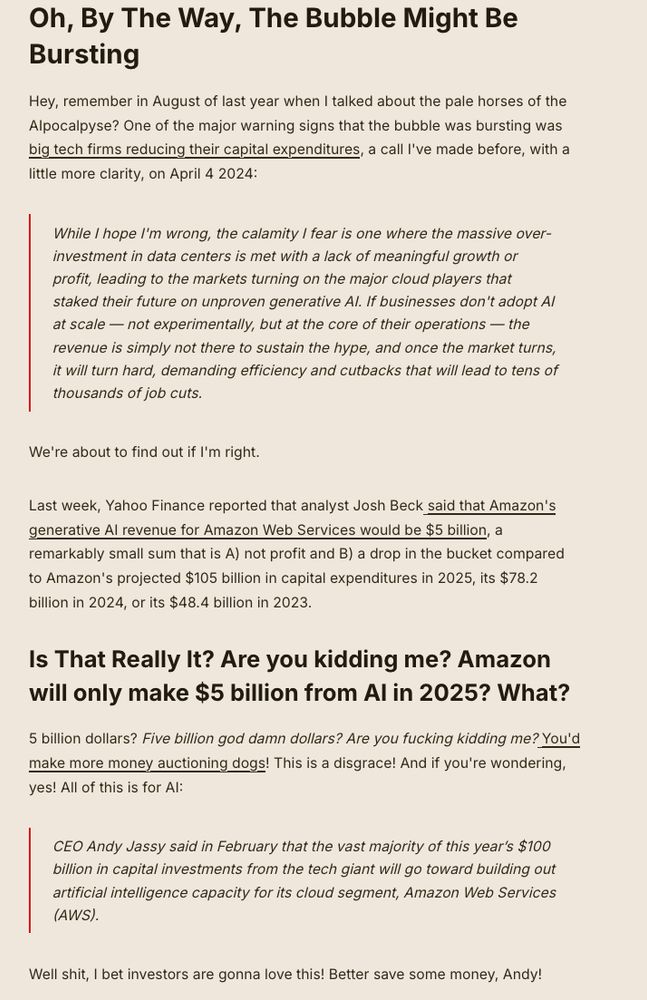

![Oh, shit! A report from Wells Fargo analysts (called "Data Centers: AWS Goes on Pause") says that Amazon has "paused a portion of its leasing discussions on the colocation side...[and while] it's not clear the magnitude of the pause...the positioning is similar to what [analysts have] heard recently from Microsoft, [that] they are digesting aggressive recent lease-up deals...pulling back from a pipeline of LOIs or SOQs."

Some asshole is going to say "LOIs and SOQs aren't a big deal," but they are. I wrote about it here.

"Digesting" in this case refers to when hyperscalers sit with their current capacity for a minute, and Wells Fargo adds that these periods typically last 6-12 months, though can be much shorter. It's not obvious how much capacity Amazon is walking away from, but they are walking away from capacity. It's happening.

But what if it wasn't just Amazon? Another report from friend of the newsletter (read: people I email occasionally asking for a PDF) analyst TD Cowen put out a report last week that, while titled in a way that suggested there wasn't a pull back, actually said there was.

Let's take a look at one damning quote:

...relative to the hyperscale demand backdrop at PTC, hyperscale demand has moderated a bit (driven by the Microsoft pullback and to a lesser extent Amazon, discussed below), particularly in Europe, 2) there has been a broader moderation in the urgency and speed with which the hyperscalers are looking to take down capacity, and 3) the number of large deals (i.e. +400MW deals) in the market appears to have moderated.

In plain English, this means "demand has come down, there's less urgency in building this stuff, and the market is slowing down. Cowen also added that it "...observed a moderation in the exuberance around the outlook for hyperscale demand which characterized the market this time last year."

Brother, isn't this meant to be the next big thing? We need more exuberance! Not less!](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:qc6xzgctorfsm35w6i3vdebx/bafkreig6slvxsazwqancvj7fkcj4qcg46n3t256dlhyohzhvp7mrtirtoa@jpeg)

!["It's the same thing happening on both sides, and I've been amazed at how much this is coordinating our reality," said the writer Thomas Chatterton Williams, who was for a time a member of a group chat with Andreessen. "If you weren't in the business at all, you'd think everyone was arriving at conclusions independently - and [they're] not. It's a small group of people who talk to each other and overlap between politics and journalism and a few industries.'](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:u2dwaqvvtmmz6v3hmszjrpk5/bafkreif6nmdmp5icww4rugbj4ztlxsuoi7zmppzgguanwna3kemk65k634@jpeg)