Pooja Kathail

@poojakathail.bsky.social

87 followers

200 following

4 posts

Computational Biology PhD student @ucberkeley

Posts

Media

Videos

Starter Packs

Pinned

Pooja Kathail

@poojakathail.bsky.social

· Nov 20

Leveraging genomic deep learning models for non-coding variant effect prediction

The majority of genetic variants identified in genome-wide association studies of complex traits are non-coding, and characterizing their function remains an important challenge in human genetics. Gen...

arxiv.org

Reposted by Pooja Kathail

Liana Lareau

@lianafaye.bsky.social

· Aug 7

Protein language models reveal evolutionary constraints on synonymous codon choice

Evolution has shaped the genetic code, with subtle pressures leading to preferences for some synonymous codons over others. Codons are translated at different speeds by the ribosome, imposing constrai...

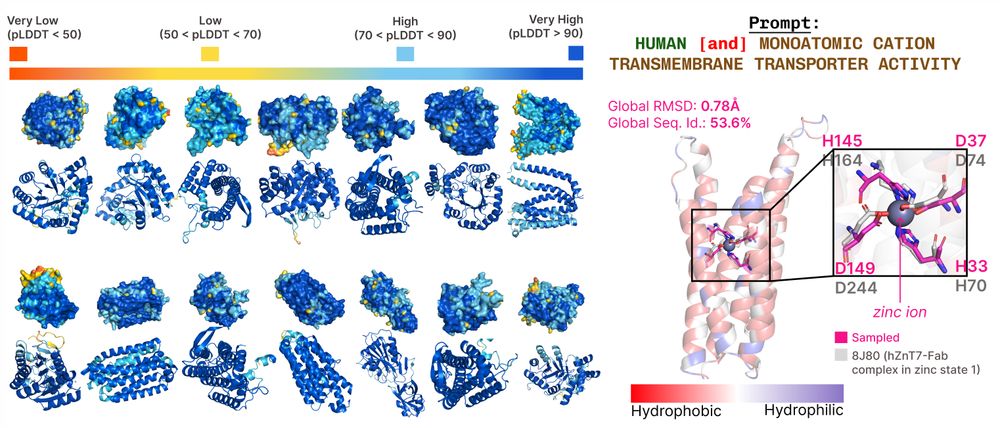

www.biorxiv.org

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Sara Mostafavi

@saramostafavi.bsky.social

· Apr 16

Deep genomic models of allele-specific measurements

Allele-specific quantification of sequencing data, such as gene expression, allows for a causal investigation of how DNA sequence variations influence cis gene regulation. Current methods for analyzin...

www.biorxiv.org

Reposted by Pooja Kathail

Sara Mostafavi

@saramostafavi.bsky.social

· Mar 15

Investigating Data Size, Sequence Diversity, and Model Complexity in MPRA-based Sequence-to-Function Prediction

We created the MPRA Dataset Collection (MDC), a curated resource of MPRA data from 12 studies comprising over 150 million labeled DNA subsequences. These datasets include both random and natural genom...

www.biorxiv.org

Reposted by Pooja Kathail

Jeremy Berg

@jeremymberg.bsky.social

· Mar 11

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Sara Mostafavi

@saramostafavi.bsky.social

· Feb 23

A scalable approach to investigating sequence-to-expression prediction from personal genomes

A key promise of sequence-to-function (S2F) models is their ability to evaluate arbitrary sequence inputs, providing a robust framework for understanding genotype-phenotype relationships. However, despite strong performance across genomic loci , S2F models struggle with inter-individual variation. Training a model to make genotype-dependent predictions at a single locus-an approach we call personal genome training-offers a potential solution. We introduce SAGE-net, a scalable framework and software package for training and evaluating S2F models using personal genomes. Leveraging its scalability, we conduct extensive experiments on model and training hyperparameters, demonstrating that training on personal genomes improves predictions for held-out individuals. However, the model achieves this by identifying predictive variants rather than learning a cis-regulatory grammar that generalizes across loci. This failure to generalize persists across a range of hyperparameter settings. These findings highlight the need for further exploration to unlock the full potential of S2F models in decoding the regulatory grammar of personal genomes. Scalable software and infrastructure development will be critical to this progress. ### Competing Interest Statement The authors have declared no competing interest.

www.biorxiv.org

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Peter Koo

@pkoo562.bsky.social

· Feb 5

Reposted by Pooja Kathail

Reposted by Pooja Kathail

Austin Wang

@austintwang.bsky.social

· Dec 11

DART-Eval: A Comprehensive DNA Language Model Evaluation Benchmark on Regulatory DNA

Recent advances in self-supervised models for natural language, vision, and protein sequences have inspired the development of large genomic DNA language models (DNALMs). These models aim to learn gen...

arxiv.org

Reposted by Pooja Kathail

Pooja Kathail

@poojakathail.bsky.social

· Nov 20

Pooja Kathail

@poojakathail.bsky.social

· Nov 20

Leveraging genomic deep learning models for non-coding variant effect prediction

The majority of genetic variants identified in genome-wide association studies of complex traits are non-coding, and characterizing their function remains an important challenge in human genetics. Gen...

arxiv.org