Saurabh

@saurabhr.bsky.social

55 followers

220 following

8 posts

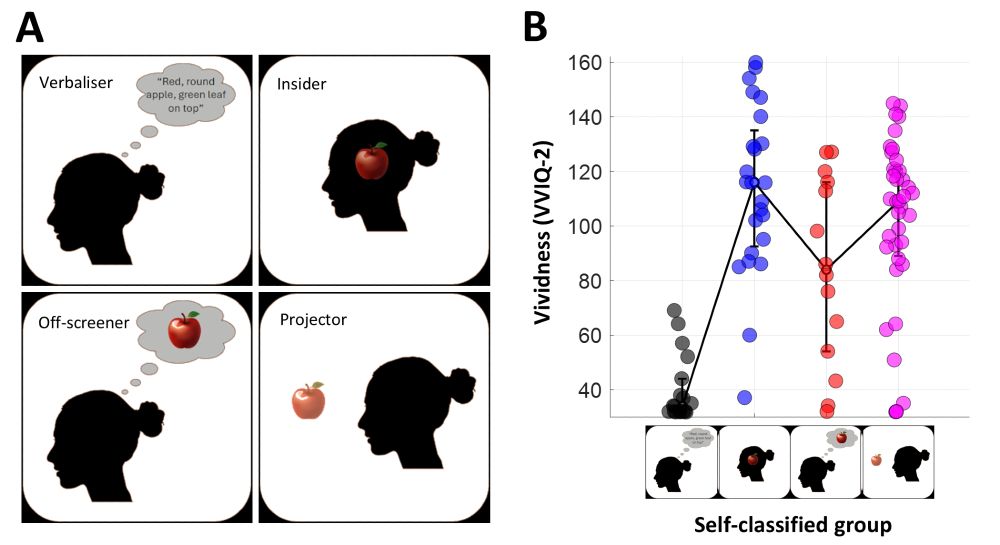

Ph.D. in Psychology | Currently on Job Market | Pursuing Consciousness, Reality Monitoring, World Models, Imagination with my life force. saurabhr.github.io

Posts

Media

Videos

Starter Packs

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh

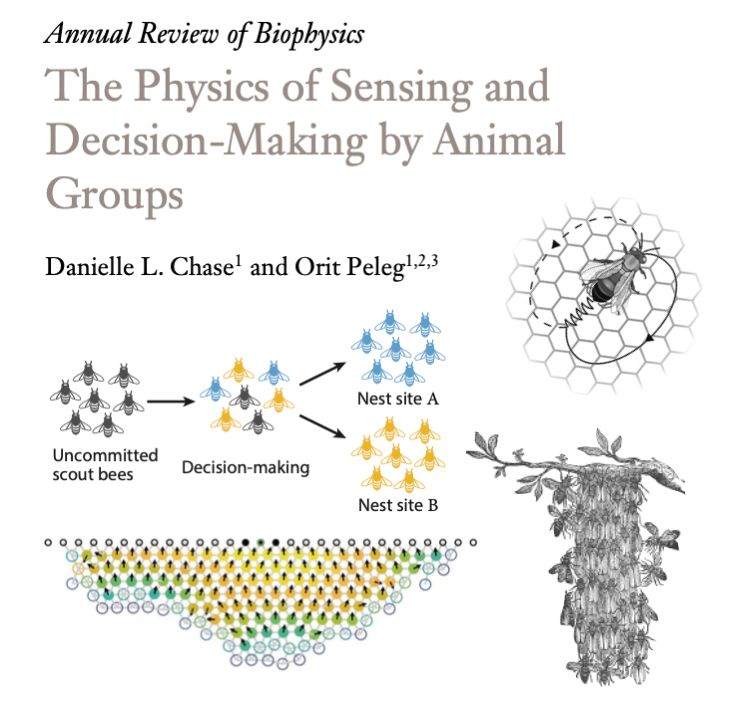

PsyArXivBot

@psyarxivbot.bsky.social

· Aug 18

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh

Reposted by Saurabh