It uses a hybrid auto-regressive plus diffusion architecture, combining strong global semantic understanding with high fidelity visual detail. It matches mainstream diffusion models in overall quality while excelling at text rendering and knowledge intensive generati

It uses a hybrid auto-regressive plus diffusion architecture, combining strong global semantic understanding with high fidelity visual detail. It matches mainstream diffusion models in overall quality while excelling at text rendering and knowledge intensive generati

- avatar colors that show whether we're mutuals

- exportable bookmarks with custom folders

- feed of trending papers and articles

- safety alerts when a post goes viral

- researcher profiles with topics, affiliations, featured papers

We found that if you simply delete them after pretraining and recalibrate for <1% of the original budget, you unlock massive context windows. Smarter, not harder.

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

We found that if you simply delete them after pretraining and recalibrate for <1% of the original budget, you unlock massive context windows. Smarter, not harder.

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

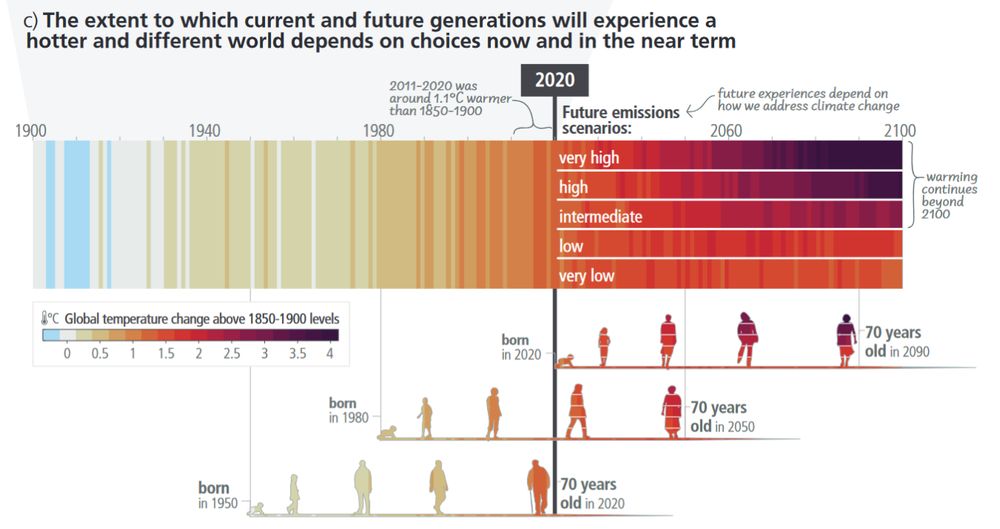

How? I'm adding new videos, deep dives & behind-the-scenes stories to Talking Climate.

Why? Because climate honesty and hope matter more than ever right now.

Join me on Patreon: bit.ly/47pCLaf

Or Substack: bit.ly/4ntfvhJ

How? I'm adding new videos, deep dives & behind-the-scenes stories to Talking Climate.

Why? Because climate honesty and hope matter more than ever right now.

Join me on Patreon: bit.ly/47pCLaf

Or Substack: bit.ly/4ntfvhJ

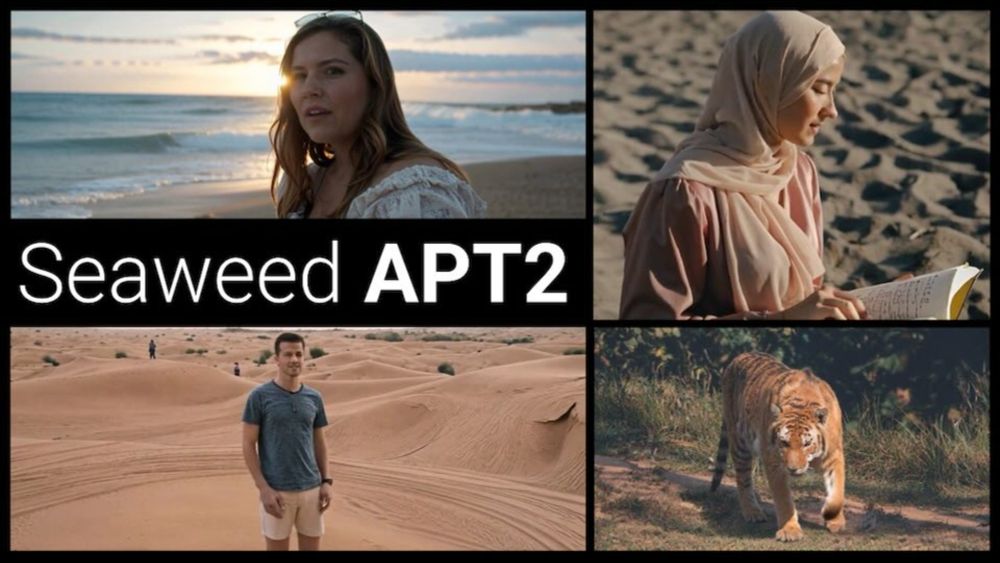

Meet Seaweed-APT2: an 8B-param autoregressive GAN that streams 24fps video at 736×416—all in *1 network forward eval*. That’s 1440 frames/min, live, on a single H100 🚀

Project: seaweed-apt.com/2

Meet Seaweed-APT2: an 8B-param autoregressive GAN that streams 24fps video at 736×416—all in *1 network forward eval*. That’s 1440 frames/min, live, on a single H100 🚀

Project: seaweed-apt.com/2

Meanwhile the FTC: www.ftc.gov/news-events/...

Meanwhile the FTC: www.ftc.gov/news-events/...

People are always a bit surprised by that.

People are always a bit surprised by that.

I have equipped it with a @sipmask.bsky.social port so I can drink.

I have equipped it with a @sipmask.bsky.social port so I can drink.

Enter IntrinsicEdit: Precise generative image manipulation in intrinsic space (SIGGRAPH 2025)!

intrinsic-edit.github.io

Enter IntrinsicEdit: Precise generative image manipulation in intrinsic space (SIGGRAPH 2025)!

intrinsic-edit.github.io

Discover MUSt3R & Pow3R, universal encoder DUNE + research in navigation, vizloc, segmentation & human motion understanding!

All our #CVPR2025 papers are here

➡️ tinyurl.com/4z79ujce

Discover MUSt3R & Pow3R, universal encoder DUNE + research in navigation, vizloc, segmentation & human motion understanding!

All our #CVPR2025 papers are here

➡️ tinyurl.com/4z79ujce

Conference papers:

PromptHMR: Promptable Human Mesh Recovery

yufu-wang.github.io/phmr-page/

DiffLocks: Generating 3D Hair from a Single Image using Diffusion Models

radualexandru.github.io/difflocks/

Conference papers:

PromptHMR: Promptable Human Mesh Recovery

yufu-wang.github.io/phmr-page/

DiffLocks: Generating 3D Hair from a Single Image using Diffusion Models

radualexandru.github.io/difflocks/

The paper is rather critical of reasoning LLMs (LRMs):

x.com/MFarajtabar...

The paper is rather critical of reasoning LLMs (LRMs):

x.com/MFarajtabar...