https://katesanders9.github.io/

GitHub: github.com/kr-ramesh/sy...

Paper 📝: aclanthology.org/2025.emnlp-d...

#EMNLP2025 #EMNLP #SyntheticData

GitHub: github.com/kr-ramesh/sy...

Paper 📝: aclanthology.org/2025.emnlp-d...

#EMNLP2025 #EMNLP #SyntheticData

Read more about the award here: aaai.org/about-aaai/a...

@yoavartzi.com

Read more about the award here: aaai.org/about-aaai/a...

@yoavartzi.com

2004: A year

2010: ~ a month

2015: ~ a week

Now: A day

ourworldindata.org/data-insight... 🧪

2004: A year

2010: ~ a month

2015: ~ a week

Now: A day

ourworldindata.org/data-insight... 🧪

The mapper is now completely redesigned by me and @spudwaffle.bsky.social, allowing for much prettier looking maps and way more customization alongside significantly more options for geographies!

See below for some of the examples of the maps you can create!

The mapper is now completely redesigned by me and @spudwaffle.bsky.social, allowing for much prettier looking maps and way more customization alongside significantly more options for geographies!

See below for some of the examples of the maps you can create!

Now on arXiv: arxiv.org/abs/2508.16599

Now on arXiv: arxiv.org/abs/2508.16599

🔗 Project page: aka.ms/jailbreak-d...

📊 Dataset: huggingface.co/datasets/ja...

🔗 Project page: aka.ms/jailbreak-d...

📊 Dataset: huggingface.co/datasets/ja...

x.com/jackjingyuz...

x.com/jackjingyuz...

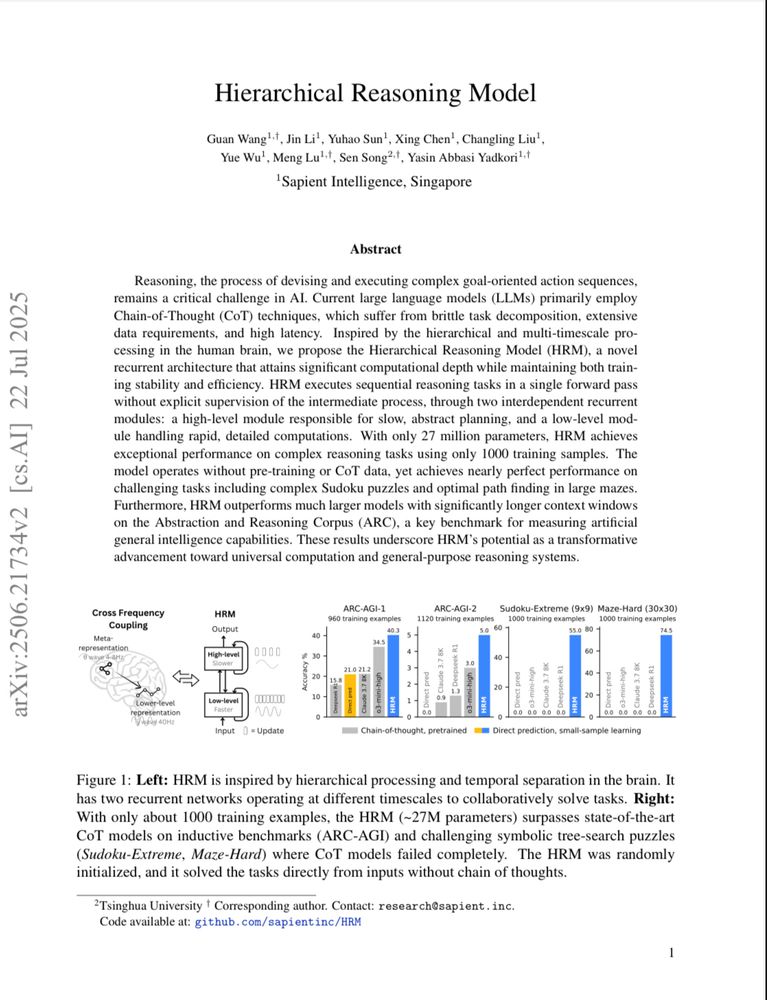

A tiny (27M) brain-inspired model trained just on 1000 samples outperforming o3-mini-high on reasoning tasks.

#MLSky 🧠🤖

A tiny (27M) brain-inspired model trained just on 1000 samples outperforming o3-mini-high on reasoning tasks.

#MLSky 🧠🤖

Writing a paper on these topics? Submit to the ScaleOPT workshop at NeurIPS!

www.cvxgrp.org/scaleopt/#su...

Writing a paper on these topics? Submit to the ScaleOPT workshop at NeurIPS!

www.cvxgrp.org/scaleopt/#su...

Reach out if you know folks interested in legal NLP, structured prediction, and full-time at a startup environment in NYC

I'll also always chat about:

• population-level inference on corpora

• broad-coverage semantics

• which café has the best Sachertorte in Vienna

Reach out if you know folks interested in legal NLP, structured prediction, and full-time at a startup environment in NYC

I'll also always chat about:

• population-level inference on corpora

• broad-coverage semantics

• which café has the best Sachertorte in Vienna

Please reach out if you want to grab coffee!

Please reach out if you want to grab coffee!

Pin it to your home 📌 and enjoy!

bsky.app/profile/did:...

Pin it to your home 📌 and enjoy!

bsky.app/profile/did:...

Thank you all for all your support, and make sure to keep spreading the word!

Thank you all for all your support, and make sure to keep spreading the word!

We’re thrilled to pre-celebrate the incredible research 📚 ✨ that will be presented starting Monday next week in Vienna 🇦🇹 !

Start exploring 👉 aclanthology.org/events/acl-2...

#NLProc #ACL2025NLP #ACLAnthology

We’re thrilled to pre-celebrate the incredible research 📚 ✨ that will be presented starting Monday next week in Vienna 🇦🇹 !

Start exploring 👉 aclanthology.org/events/acl-2...

#NLProc #ACL2025NLP #ACLAnthology

I had no idea solar was booming like this. And if you live in the same world as me, dominated by oil & gas guys maintaining that solar and wind are inefficient gimmicks, you might not've known some of this either.

I had no idea solar was booming like this. And if you live in the same world as me, dominated by oil & gas guys maintaining that solar and wind are inefficient gimmicks, you might not've known some of this either.

Hover to see details, zoom to explore more fine-grained topics, click to go to a page. Search by page

name to find interesting starting points for exploration.

lmcinnes.github.io/datamapplot_...

Hover to see details, zoom to explore more fine-grained topics, click to go to a page. Search by page

name to find interesting starting points for exploration.

lmcinnes.github.io/datamapplot_...