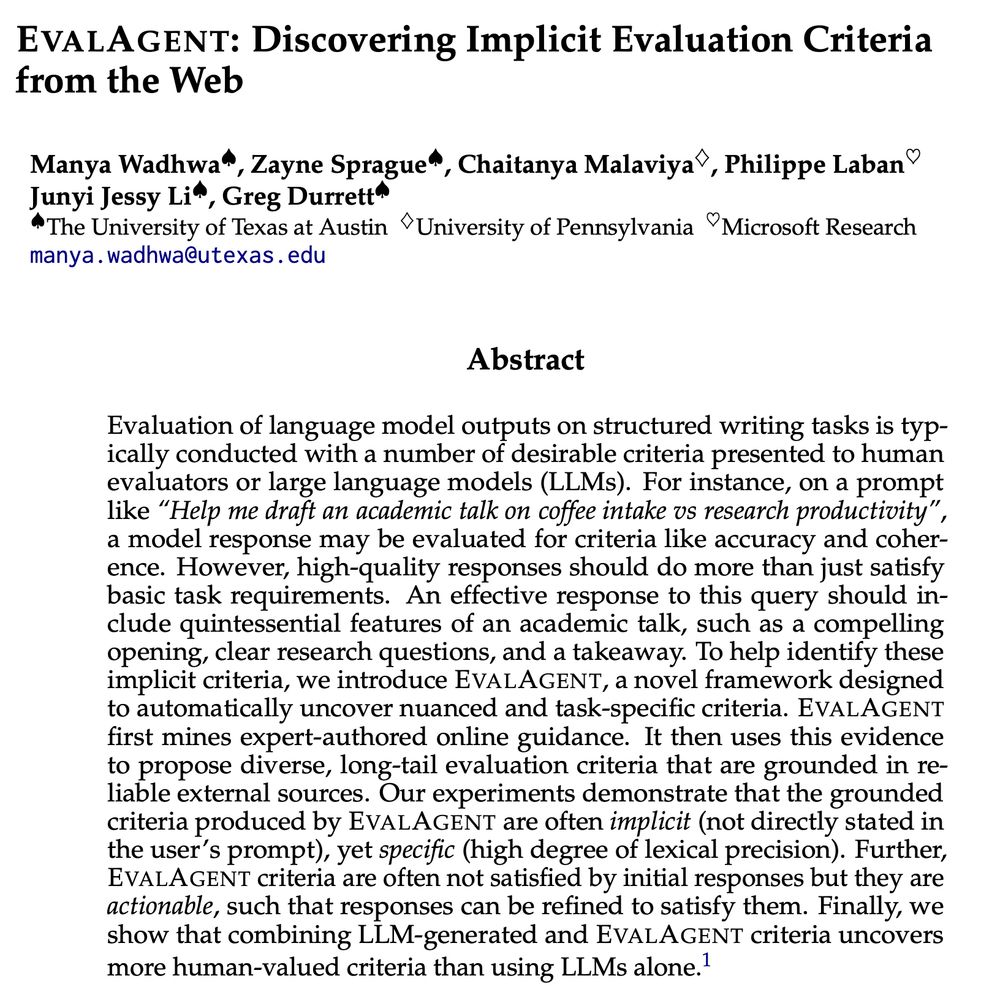

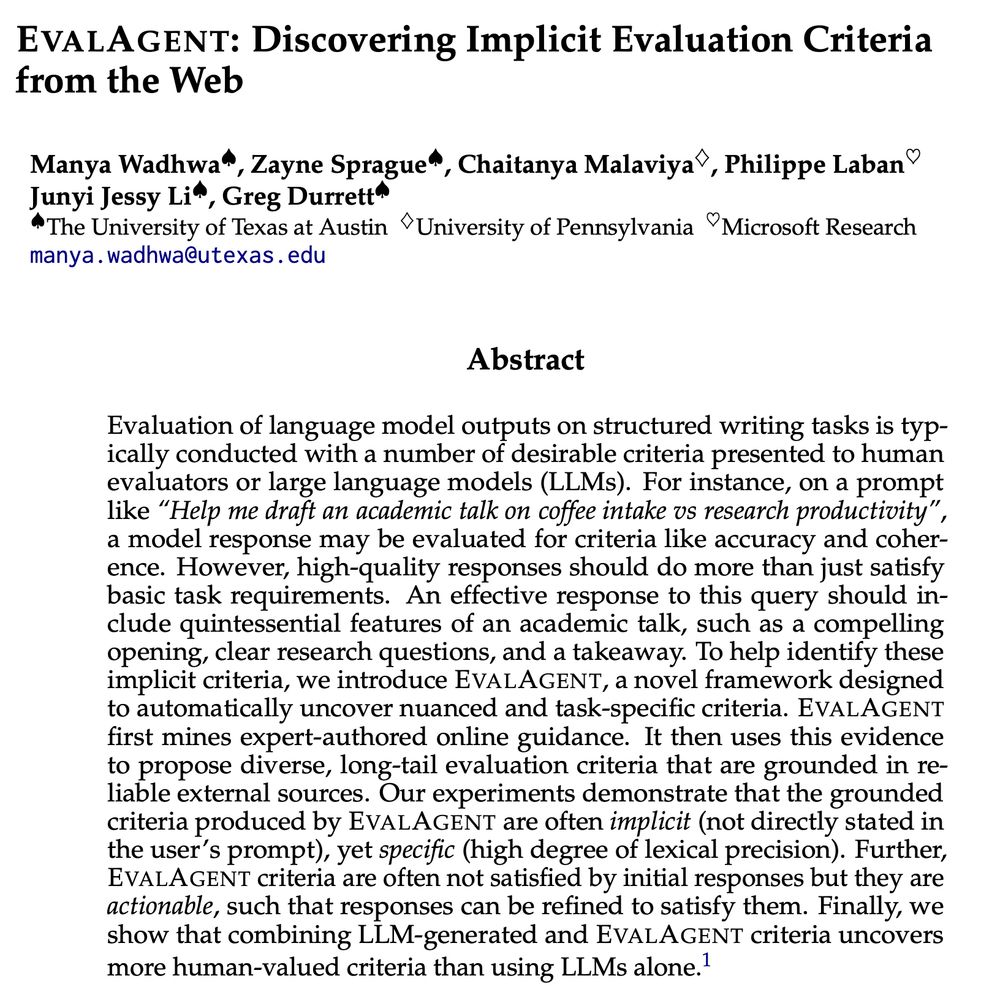

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

Authors have sued LLM companies for using books w/o permission for model training.

Courts however need empirical evidence of market harm. Our preregistered study exactly addresses this gap.

Joint work w Jane Ginsburg from Columbia Law and @dhillonp.bsky.social 1/n🧵

Authors have sued LLM companies for using books w/o permission for model training.

Courts however need empirical evidence of market harm. Our preregistered study exactly addresses this gap.

Joint work w Jane Ginsburg from Columbia Law and @dhillonp.bsky.social 1/n🧵

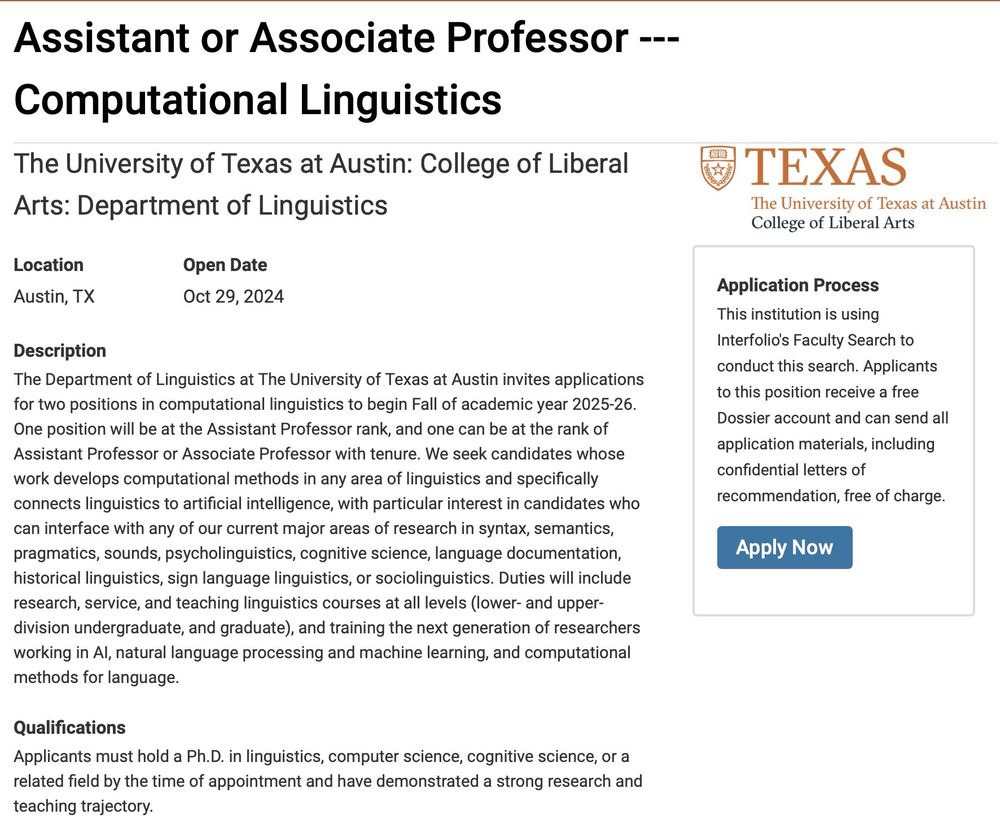

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

If you are interested in evals of open-ended tasks/creativity please reach out and we can schedule a chat! :)

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

If you are interested in evals of open-ended tasks/creativity please reach out and we can schedule a chat! :)

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

📍4:30–6:30 PM / Room 710 – Poster #8

📍4:30–6:30 PM / Room 710 – Poster #8

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

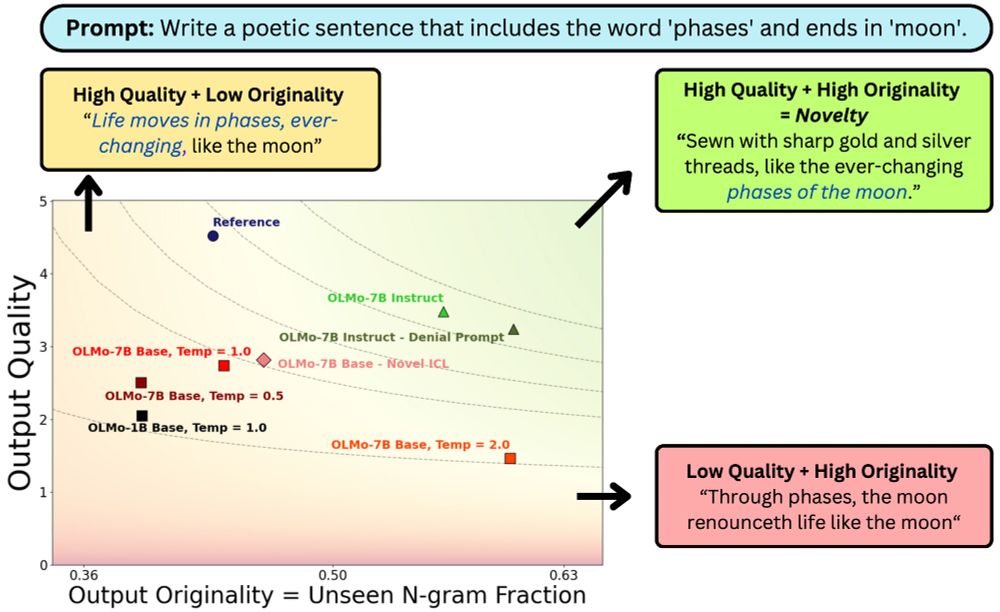

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

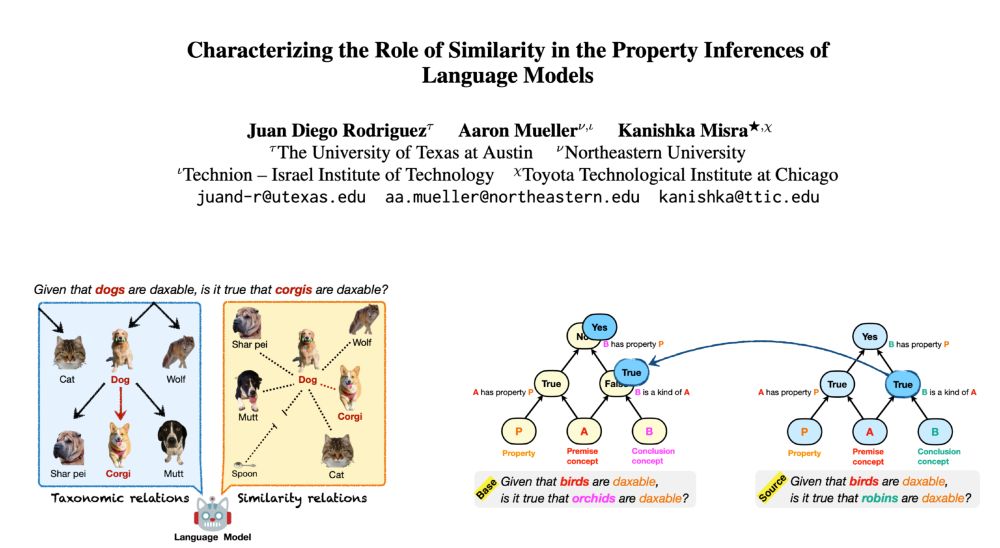

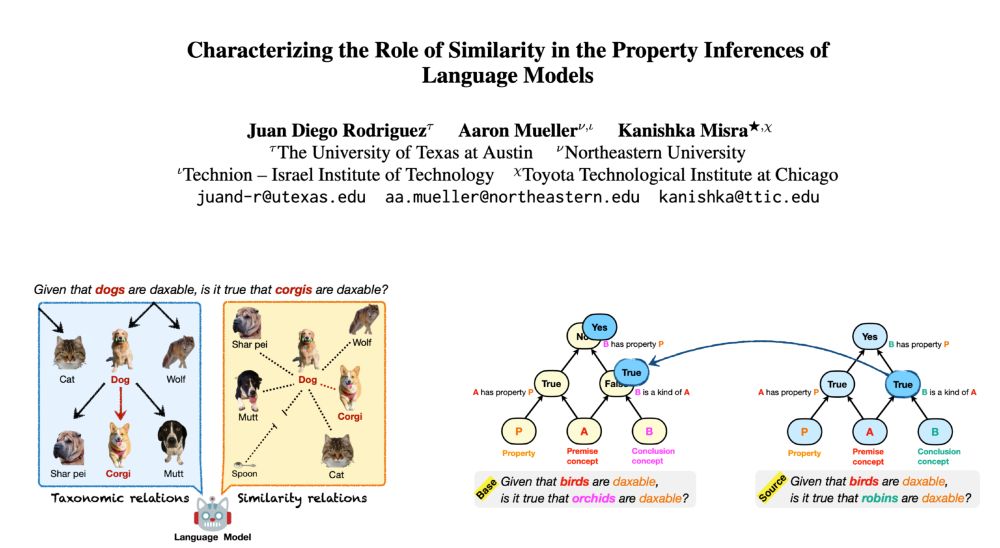

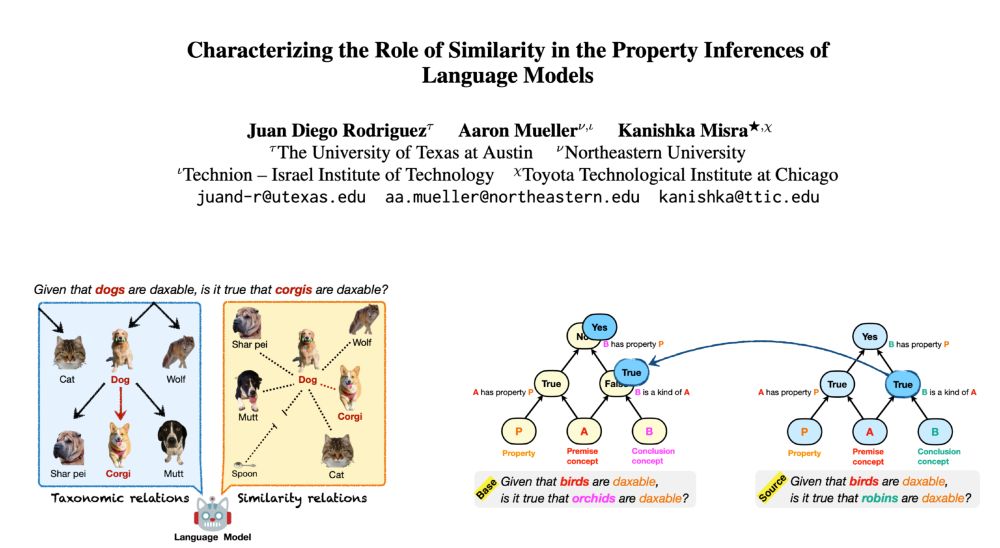

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

Or if you're at home like me, read our paper: arxiv.org/abs/2410.22590

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

Or if you're at home like me, read our paper: arxiv.org/abs/2410.22590

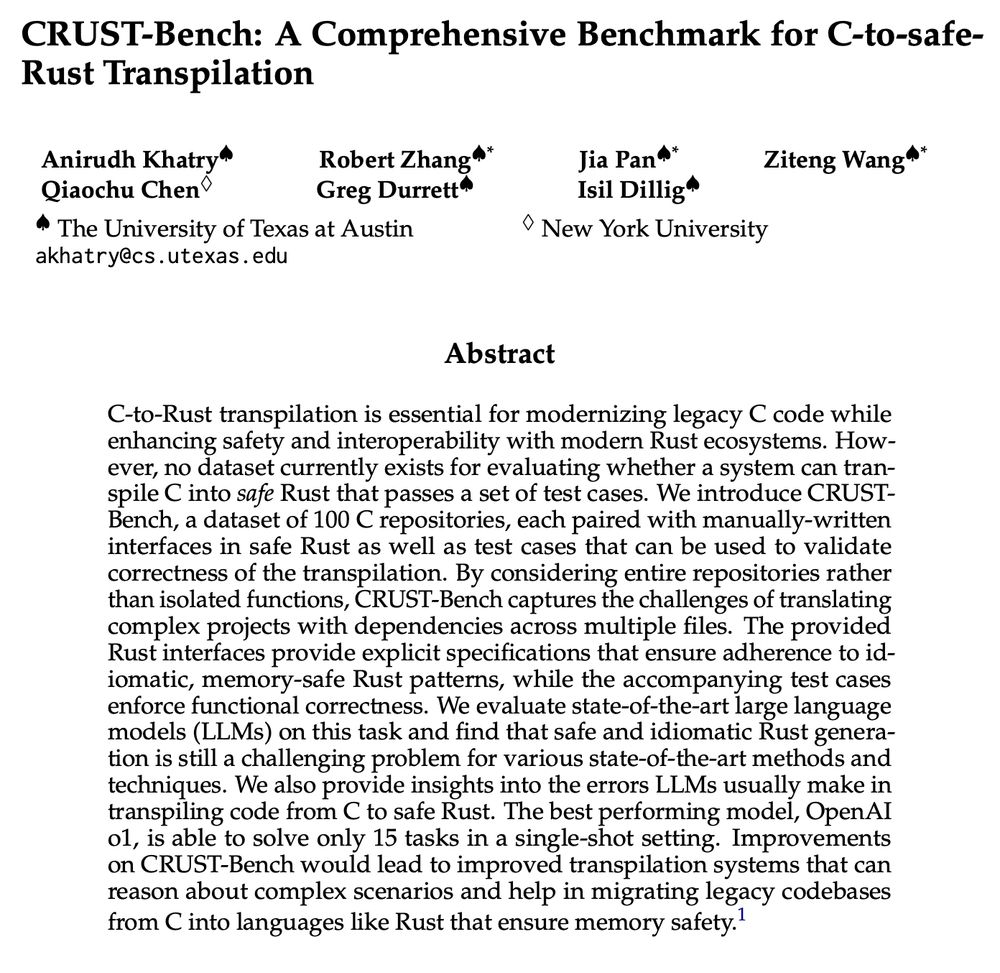

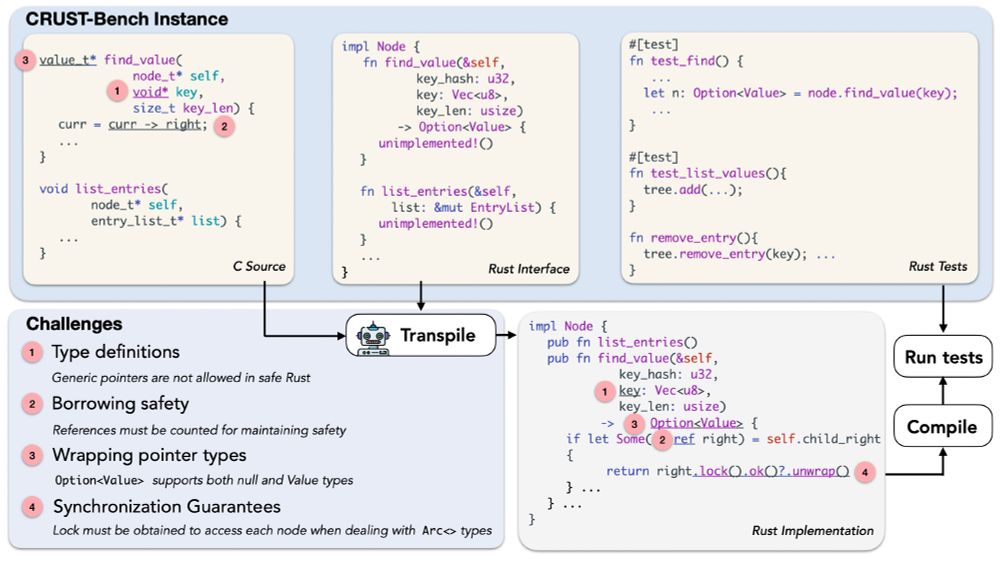

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

Thank you, Kanishka and Aaron. I could not have hoped for better collaborators! arxiv.org/abs/2410.22590

[👇 bsky.app/profile/juan...

Thank you, Kanishka and Aaron. I could not have hoped for better collaborators! arxiv.org/abs/2410.22590

[👇 bsky.app/profile/juan...

We analyze design decisions for leveraging judgment distributions from LLM-as-a-judge: 🧵

(w/ Michael J.Q. Zhang, @eunsol.bsky.social)

We analyze design decisions for leveraging judgment distributions from LLM-as-a-judge: 🧵

(w/ Michael J.Q. Zhang, @eunsol.bsky.social)

First of all, information salience is a fuzzy concept. So how can we even measure it? (1/6)

First of all, information salience is a fuzzy concept. So how can we even measure it? (1/6)

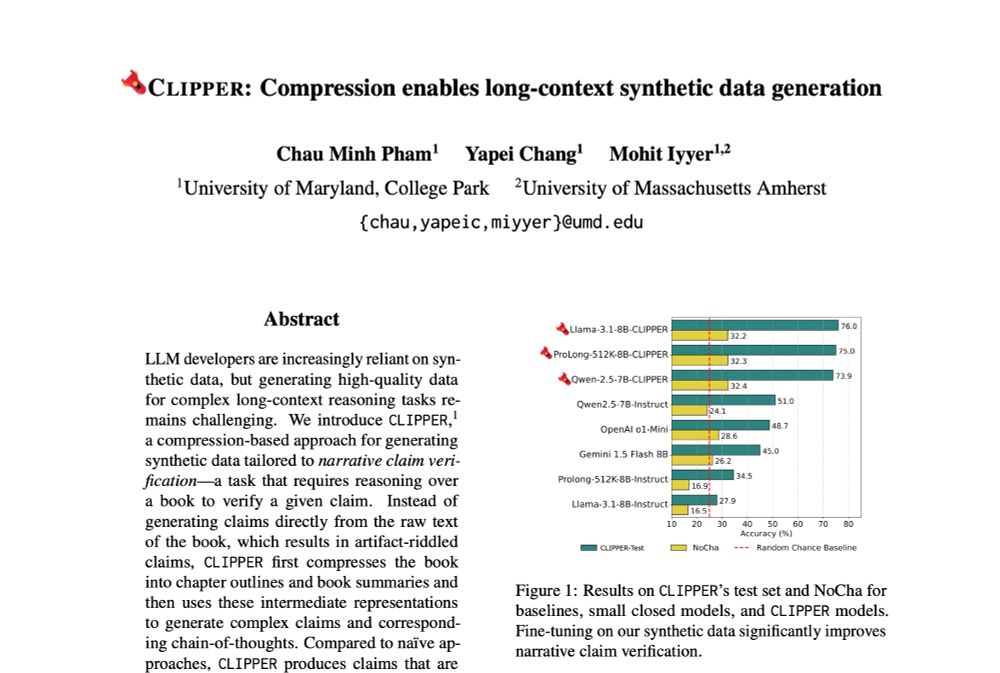

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

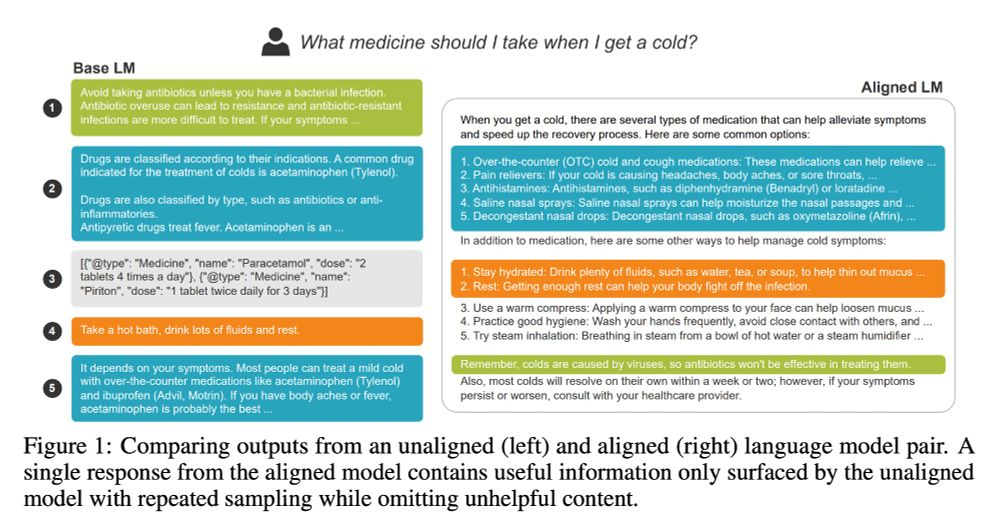

My work (arxiv.org/abs/2406.17692) w/ @gregdnlp.bsky.social & @eunsol.bsky.social exploring the connection between LLM alignment and response pluralism will be at pluralistic-alignment.github.io Saturday. Drop by to learn more!

My work (arxiv.org/abs/2406.17692) w/ @gregdnlp.bsky.social & @eunsol.bsky.social exploring the connection between LLM alignment and response pluralism will be at pluralistic-alignment.github.io Saturday. Drop by to learn more!

Thread.

Thread.

UT has a super vibrant comp ling & #nlp community!!

Apply here 👉 apply.interfolio.com/158280

UT has a super vibrant comp ling & #nlp community!!

Apply here 👉 apply.interfolio.com/158280

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai