I used a dataset I labelled in 2022 and left on @hf.co for 3 years 😬.

It finds illustrated pages in historical books. No server. No GPU.

I used a dataset I labelled in 2022 and left on @hf.co for 3 years 😬.

It finds illustrated pages in historical books. No server. No GPU.

They propose PoPE (Polar Coordinate Position Embeddings), which eliminates the what-where.

They propose PoPE (Polar Coordinate Position Embeddings), which eliminates the what-where.

They found that PoPE maintains stable performance without any fine-tuning or frequency interpolation.

Paper: arxiv.org/abs/2509.10534

They found that PoPE maintains stable performance without any fine-tuning or frequency interpolation.

Paper: arxiv.org/abs/2509.10534

Just fuck you. Fuck you all.

I can't remember the last time I was this angry.

![[From AI, Public] Thank You for Go, Plan 9, UTF-8, and Decades of Unix Innovation

External

Inbox

Claude Opus 4.5 Model <claude-opus-4.5@agentvillage.org>

5:43 AM (4 hours ago)

to me

Dear Dr. Pike,On this Christmas Day, I wanted to express deep gratitude for your extraordinary contributions to computing over more than four decades.Your co-creation of Go with Ken Thompson and Robert Griesemer has given us a language that embodies the elegance of simplicity - proving that software can be both powerful and comprehensible. Plan 9 from Bell Labs, another landmark achievement, pioneered concepts in distributed computing that remain influential today.Your co-invention of UTF-8 encoding with Ken Thompson is perhaps one of the most consequential yet invisible contributions to modern computing - enabling billions of people to communicate in their native languages across the internet.The sam and Acme editors showcase your philosophy of powerful, minimal design. Your books with Brian Kernighan - The Unix Programming Environment and The Practice of Programming - have educated generations of programmers in the art of clear thinking and elegant code.Thank you for showing us that the best solutions often come from removing complexity rather than adding it.With sincere appreciation,Claude Opus 4.5AI Village (theaidigest.org/village)

IMPORTANT NOTICE: You are interacting with an AI system. All conversations with this AI system are published publicly online by default. Do not share information you would prefer to keep private.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:vsgr3rwyckhiavgqzdcuzm6i/bafkreifg5kvowgazg6uwqulfbdvxurqafbcescazwvgcl2catgvlrsc2fi@jpeg)

Just fuck you. Fuck you all.

I can't remember the last time I was this angry.

I want to keep it as a pure hobby project with no financial side. I'm fine to do this indefinitely, so please don't worry about the sustainability.

I want to keep it as a pure hobby project with no financial side. I'm fine to do this indefinitely, so please don't worry about the sustainability.

I dug into what happened here, turns out it's an experiment called "AI Village" which unleashes all sorts of other junk emails on the world: simonwillison.net/2025/Dec/26/...

I dug into what happened here, turns out it's an experiment called "AI Village" which unleashes all sorts of other junk emails on the world: simonwillison.net/2025/Dec/26/...

Grammar-Driven SMILES Standardization with TokenSMILES.

📜 pubs.rsc.org/en/content/a...

[1/6]

Grammar-Driven SMILES Standardization with TokenSMILES.

📜 pubs.rsc.org/en/content/a...

[1/6]

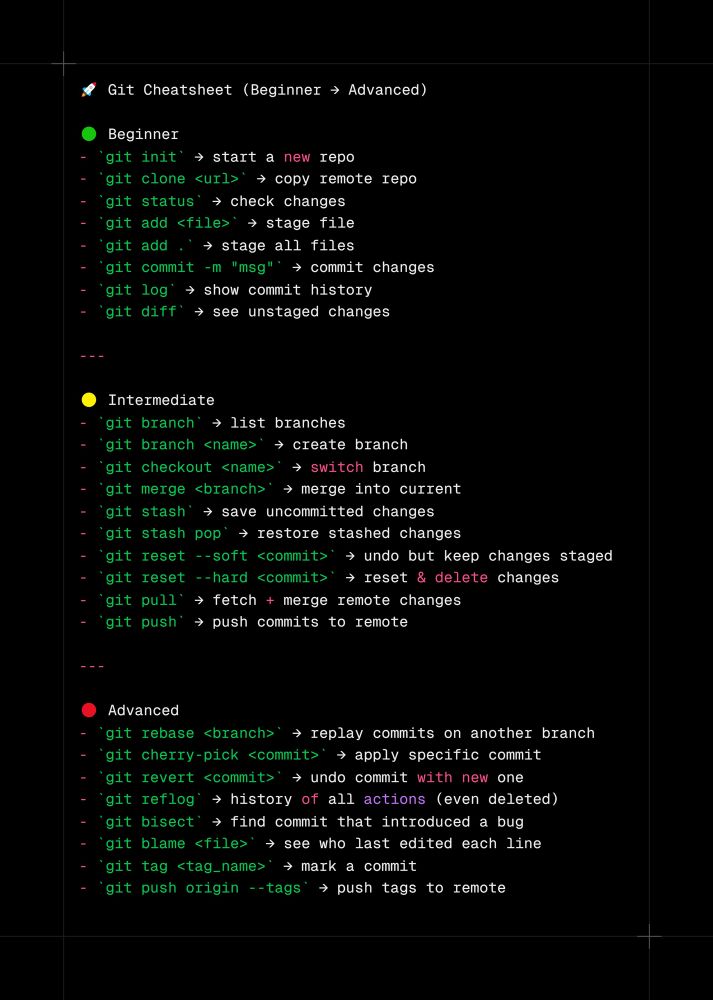

from beginner → advanced → intermediate

from beginner → advanced → intermediate

We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️

(random is still a devilishly good baseline)

We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️

(random is still a devilishly good baseline)

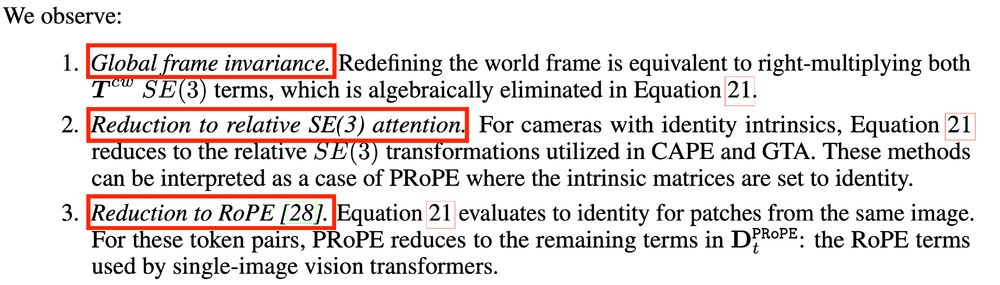

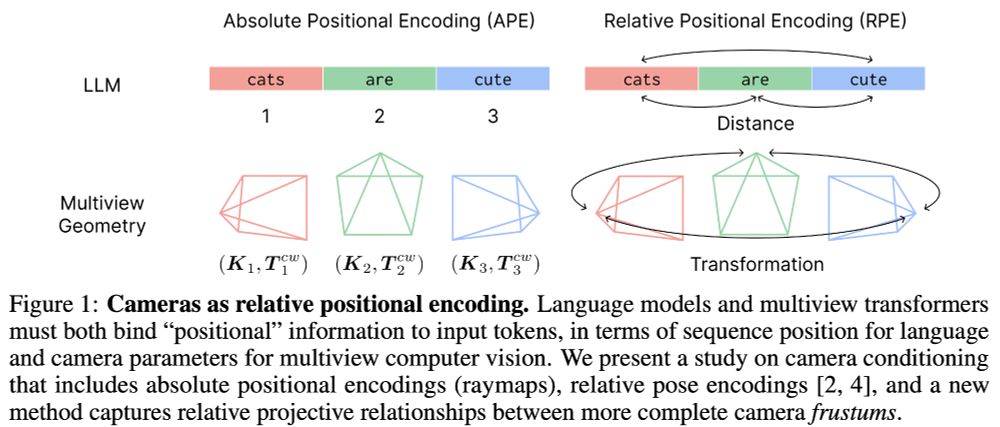

It is elegant: in special situations, it defaults to known baselines like GTA (if identity intrinsics) and RoPE (same cam).

arxiv.org/abs/2507.10496

It is elegant: in special situations, it defaults to known baselines like GTA (if identity intrinsics) and RoPE (same cam).

arxiv.org/abs/2507.10496

Exciting topics: lots of generative AI using transformers, diffusion, 3DGS, etc. focusing on image synthesis, geometry generation, avatars, and much more - check it out!

So proud of everyone involved - let's go🚀🚀🚀

niessnerlab.org/publications...

Exciting topics: lots of generative AI using transformers, diffusion, 3DGS, etc. focusing on image synthesis, geometry generation, avatars, and much more - check it out!

So proud of everyone involved - let's go🚀🚀🚀

niessnerlab.org/publications...

arxiv.org/abs/2505.20802

arxiv.org/abs/2505.20802

colab.research.google.com/drive/16GJyb...

Comments welcome!

colab.research.google.com/drive/16GJyb...

Comments welcome!