they/she. 🧋 🍑 🧑🏾💻

https://psampson.net

(3) Give authors the ability to opt out of having an AI summary on their paper entirely. (Or require an opt in.)

(3) Give authors the ability to opt out of having an AI summary on their paper entirely. (Or require an opt in.)

Maybe I shouldn't be, but I'm legitimately surprised there's not an opt out option.

Maybe I shouldn't be, but I'm legitimately surprised there's not an opt out option.

synthetic-data-workshop.github.io/papers/13.pdf

synthetic-data-workshop.github.io/papers/13.pdf

(canon ae-1 · kodak ultramax 400)

(canon ae-1 · kodak ultramax 400)

Check out Marissa's talk on our paper "auditing the audits" if you're at #FAccT2025!

⬇️

then it might be especially tempting to just replace them with ai. fewer tedious coffees, for one thing.

then it might be especially tempting to just replace them with ai. fewer tedious coffees, for one thing.

There’s a new, more contagious strain spreading—“razor blade throat” is one symptom. But, most spread is SYMPTOMLESS. You DON’T KNOW you’re spreading it.

More spread, more mutations & variants—>more disabled & dead.

Put a mask on in public. We keep us safe.

There’s a new, more contagious strain spreading—“razor blade throat” is one symptom. But, most spread is SYMPTOMLESS. You DON’T KNOW you’re spreading it.

More spread, more mutations & variants—>more disabled & dead.

Put a mask on in public. We keep us safe.

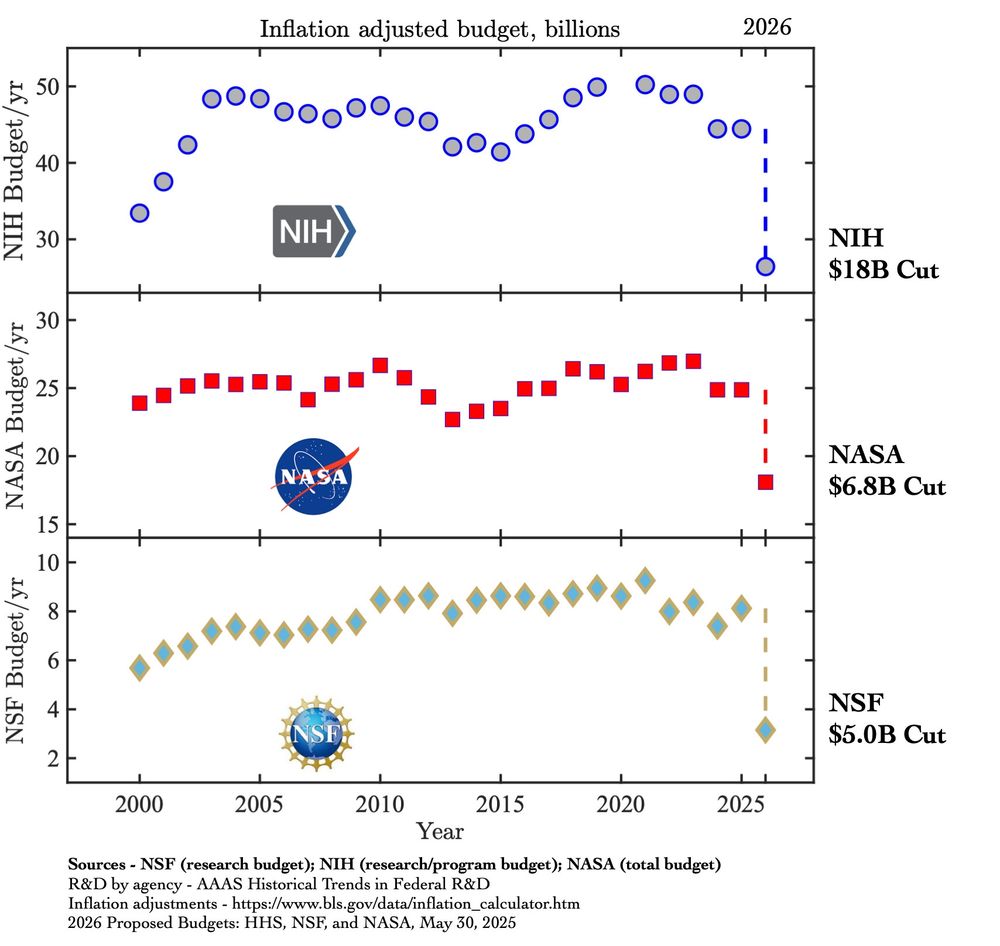

It's not just budgets but research, institutions, expertise, and training the next generation.

It's not just budgets but research, institutions, expertise, and training the next generation.