https://www.maxkagan.com/

- candidates' ideology reflect strategic choices (endogeniety)

- perceptions of candidate ideology are related to candidate quality (endogeneity)

- favorable race dynamics attract higher-quality candidates (endogeneity)

🤔🤔🤔

- candidates' ideology reflect strategic choices (endogeniety)

- perceptions of candidate ideology are related to candidate quality (endogeneity)

- favorable race dynamics attract higher-quality candidates (endogeneity)

🤔🤔🤔

We show that even well-identified DiD studies are often underpowered; sample sizes needed are surprisingly large

Paper: osf.io/preprints/os... 1/6

We show that even well-identified DiD studies are often underpowered; sample sizes needed are surprisingly large

Paper: osf.io/preprints/os... 1/6

@jamesbrandecon.bsky.social and I have been chipping away at `dbreg`, a 📦 for running big regression models on database backends. For the right kinds of problems, the speed-ups are near magical.

Website: grantmcdermott.com/dbreg/

#rstats

[1/2]

@jamesbrandecon.bsky.social and I have been chipping away at `dbreg`, a 📦 for running big regression models on database backends. For the right kinds of problems, the speed-ups are near magical.

Website: grantmcdermott.com/dbreg/

#rstats

[1/2]

1️⃣ replicate an existing experiment

2️⃣ run a novel experiment

on repdata.com

3️⃣ coauthor with Mary McGrath and me to meta-analyze the replications and existing studies

4️⃣ publish your study

details: alexandercoppock.com/replication_...

applications open Feb 1

please repost!

1️⃣ replicate an existing experiment

2️⃣ run a novel experiment

on repdata.com

3️⃣ coauthor with Mary McGrath and me to meta-analyze the replications and existing studies

4️⃣ publish your study

details: alexandercoppock.com/replication_...

applications open Feb 1

please repost!

www.wsj.com/tech/ai/why-...

www.wsj.com/tech/ai/why-...

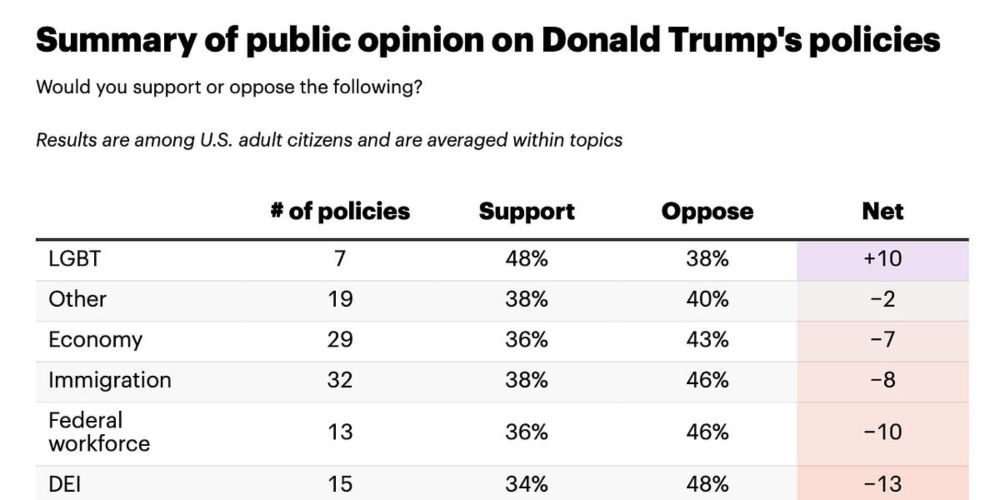

www.nytimes.com/interactive/...

www.nytimes.com/interactive/...

vrollet.github.io/files/Ideolo...

vrollet.github.io/files/Ideolo...

www.andrewbenjaminhall.com/HallSun25.pdf

www.andrewbenjaminhall.com/HallSun25.pdf

For every uncorrected p value you must add an extra letter to the claim.

“Eating chocolate maaaaaaaaay be associated with lower rates of stroke”

For every uncorrected p value you must add an extra letter to the claim.

“Eating chocolate maaaaaaaaay be associated with lower rates of stroke”

🗓️ Sat, July 26 | 11:00AM–1:30PM

📍 Bella Center, Auditorium 12

📝 Pre-register by ***July 1***: umdsurvey.umd.edu/jfe/form/SV_...

🗓️ Sat, July 26 | 11:00AM–1:30PM

📍 Bella Center, Auditorium 12

📝 Pre-register by ***July 1***: umdsurvey.umd.edu/jfe/form/SV_...

Measuring and Modeling Neighborhoods By Cory McCartan, New York University, Jacob R. Brown, Boston University and Kosuke Imai, Harvard University Granular geographic data present new opportunities to understand how neighborhoods are formed, and how they…

Measuring and Modeling Neighborhoods By Cory McCartan, New York University, Jacob R. Brown, Boston University and Kosuke Imai, Harvard University Granular geographic data present new opportunities to understand how neighborhoods are formed, and how they…

PDF: fabriziogilardi.org/resources/pa...

PDF: fabriziogilardi.org/resources/pa...

🧵

🧵