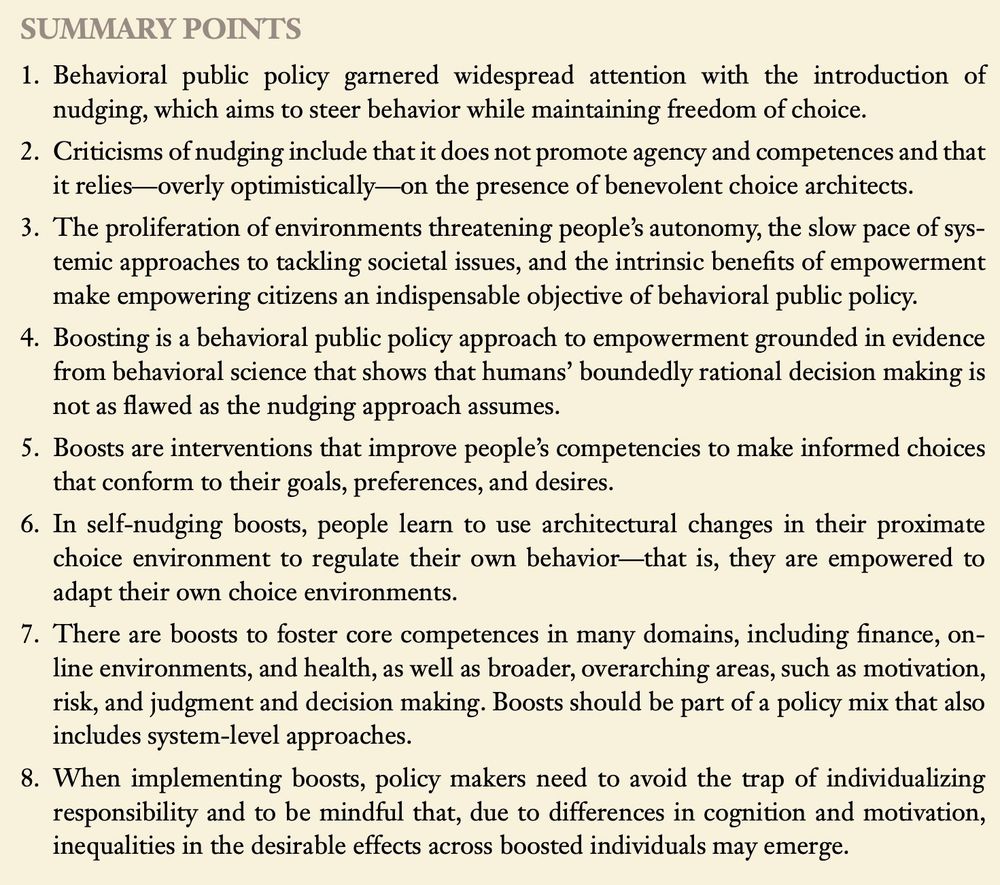

Senior Researcher @arc-mpib.bsky.social MaxPlanck @mpib-berlin.bsky.social, group leader #BOOSTING decisions: cognitive science, AI/collective intelligence, behavioral public policy, comput. social science, misinfo; stefanherzog.org scienceofboosting.org .. more

Senior Researcher @arc-mpib.bsky.social MaxPlanck @mpib-berlin.bsky.social, group leader #BOOSTING decisions: cognitive science, AI/collective intelligence, behavioral public policy, comput. social science, misinfo; stefanherzog.org scienceofboosting.org

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Robert Böhm, Brendan Nyhan, Scott L. Greer , and 15 more Robert Böhm, Brendan Nyhan, Scott L. Greer, Simon J. Greenhill, Mark J. Brandt, Elizabeth Stokoe, Rebecca Sear, Andrea Manca, Ian Hussey, Robert C. Richards, Paolo Crosetto, Rebecca Tushnet, Fabio Giglietto, Margot C. Finn, Stefan M. Herzog, Johannes Breuer, Greg Linden, Tom Willems

Reposted by Stefan M. Herzog

Reposted by Andreas Ortmann

Reposted by Taha Yasseri, Stefan M. Herzog

Reposted by Timnit Gebru, Margaret Mitchell, Euan G. Ritchie , and 38 more Timnit Gebru, Margaret Mitchell, Euan G. Ritchie, Steve Peers, Elizabeth Stokoe, Ernesto Priego, Ana Delicado, Martin Tomko, Rebecca Sear, David S. Cohen, Jo Barraket, Silvia Secchi, Patrick S. Forscher, Stephen D. Murphy, Beatriz Gallardo Paúls, John Hogan, Christine Kooi, Ben Crum, Stefan M. Herzog, Aoife O’Donoghue, Jonathan Webber, Nikolay Marinov, Tama Leaver, Zen Faulkes, Madeleine Pownall, Erin O’Donnell, David Murakami Wood, Aurélien Mondon, Ann Bartow, Clayton Littlejohn, Henrik Skaug Sætra, Jack Stilgoe, James Connelly, Aleksandra Urman, Juan Ramón, Gary Marcus, Michael Larkin, Alysia Blackham, Hilary J. Allen, Annette Yoshiko Reed, Maksym Polyakov

Reposted by Stefan M. Herzog

Reposted by Stephan Lewandowsky, Stefan M. Herzog

Reposted by Leonhard Dobusch, Stefan M. Herzog

Reposted by Stefan M. Herzog, Rense Corten

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Fabio Giglietto, Stefan M. Herzog, Katrin Weller

Reposted by Stephan Lewandowsky, Victor Galaz, Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Cristina F. Fominaya, Nina Jankowicz, Nancy Kanwisher , and 16 more Cristina F. Fominaya, Nina Jankowicz, Nancy Kanwisher, Stephan Lewandowsky, David Lazer, Sander van der Linden, Winnifred R. Louis, Linda J. Skitka, David M. Amodio, Ana S. L. Rodrigues, Cornelia Betsch, Markus Appel, John Cook, Cameron Brick, Erika Franklin Fowler, Stefan M. Herzog, Timothy Graham, Manuel Puppis, Asheley R. Landrum

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog

Reposted by Stefan M. Herzog